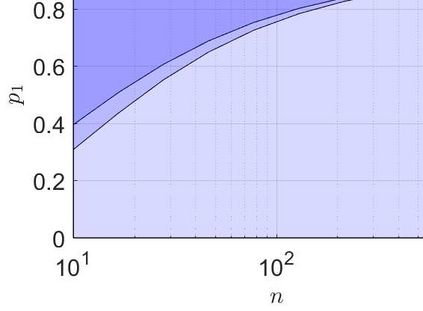

We consider the problem of learning from training data obtained in different contexts, where the underlying context distribution is unknown and is estimated empirically. We develop a robust method that takes into account the uncertainty of the context distribution. Unlike the conventional and overly conservative minimax approach, we focus on excess risks and construct distribution sets with statistical coverage to achieve an appropriate trade-off between performance and robustness. The proposed method is computationally scalable and shown to interpolate between empirical risk minimization and minimax regret objectives. Using both real and synthetic data, we demonstrate its ability to provide robustness in worst-case scenarios without harming performance in the nominal scenario.

翻译:我们考虑了从不同背景下获得的培训数据中学习的问题,这些培训数据的基本环境分布并不为人所知,而且是根据经验估算的。我们开发了一种考虑到背景分布不确定性的稳健方法。与传统和过于保守的迷你模式不同,我们侧重于超额风险,并构建具有统计覆盖面的分布套,以便在业绩和稳健性之间实现适当的权衡。拟议方法可以计算成可缩放,并显示将经验风险最小化与微量遗憾目标混为一谈。我们使用真实数据和合成数据,表明它有能力在最坏情况下提供稳健,而不会损害名义情景的绩效。