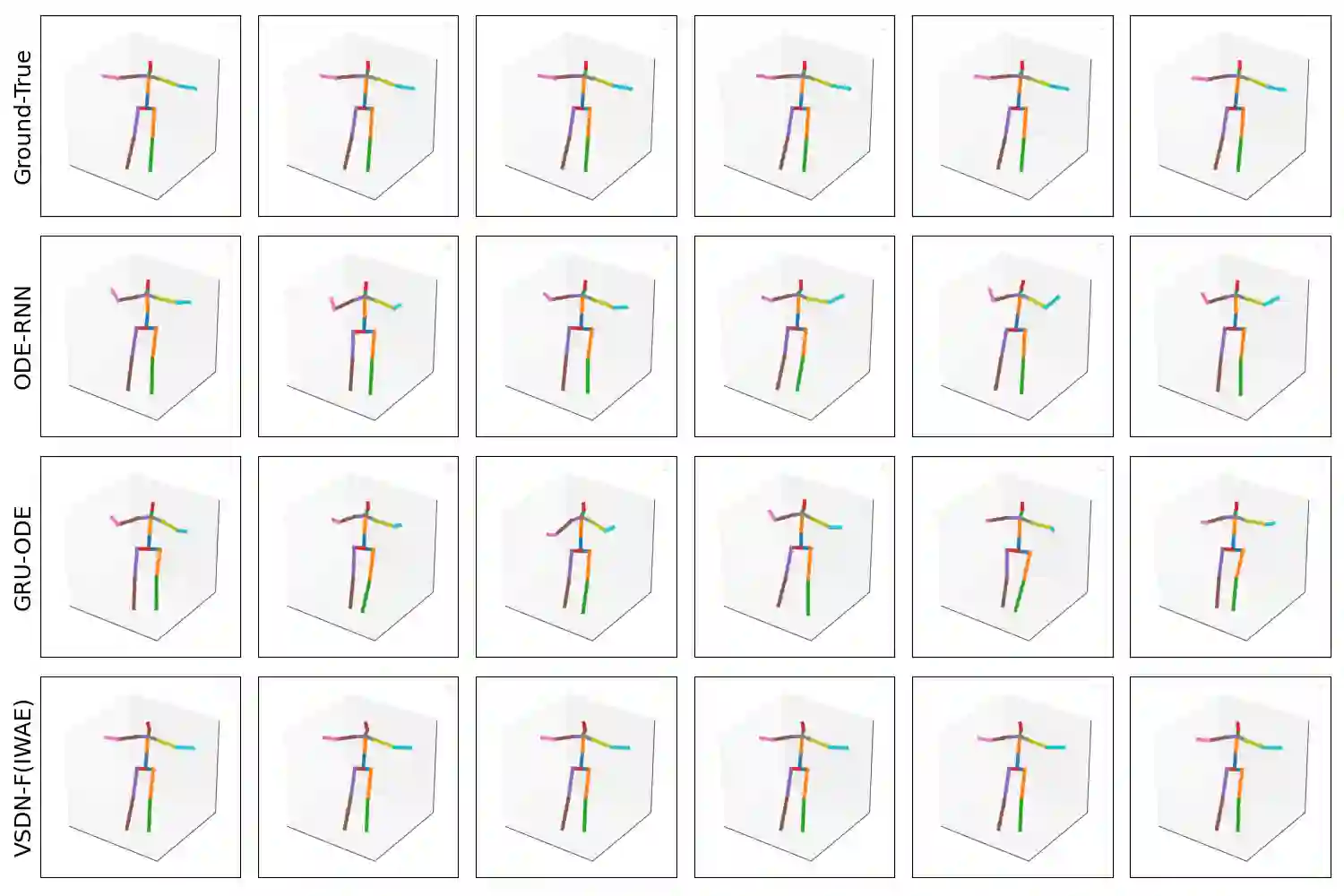

Learning continuous-time stochastic dynamics is a fundamental and essential problem in modeling sporadic time series, whose observations are irregular and sparse in both time and dimension. For a given system whose latent states and observed data are high-dimensional, it is generally impossible to derive a precise continuous-time stochastic process to describe the system behaviors. To solve the above problem, we apply Variational Bayesian method and propose a flexible continuous-time stochastic recurrent neural network named Variational Stochastic Differential Networks (VSDN), which embeds the complicated dynamics of the sporadic time series by neural Stochastic Differential Equations (SDE). VSDNs capture the stochastic dependency among latent states and observations by deep neural networks. We also incorporate two differential Evidence Lower Bounds to efficiently train the models. Through comprehensive experiments, we show that VSDNs outperform state-of-the-art continuous-time deep learning models and achieve remarkable performance on prediction and interpolation tasks for sporadic time series.

翻译:为了解决上述问题,我们采用变异性巴耶斯方法,并提议一个名为变异性斯托切片差异网络(VSDN)的灵活、连续性随机性经常性神经网络(VSDN),它包含由神经斯托克差异值(SDE)组成的偶发时间序列的复杂动态。 VSDN捕捉了潜伏状态和深神经网络观测之间的随机依赖性。我们还采用两个低度证据来有效训练模型。我们通过全面实验,显示VSDNs超越了最新水平的连续深度学习模型,并在偶发时间序列的预测和内插任务上取得了显著的成绩。