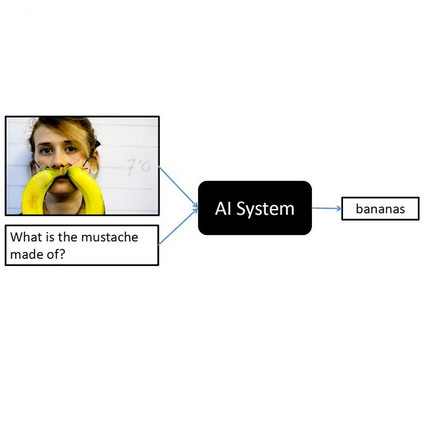

There has been a growing interest in solving Visual Question Answering (VQA) tasks that require the model to reason beyond the content present in the image. In this work, we focus on questions that require commonsense reasoning. In contrast to previous methods which inject knowledge from static knowledge bases, we investigate the incorporation of contextualized knowledge using Commonsense Transformer (COMET), an existing knowledge model trained on human-curated knowledge bases. We propose a method to generate, select, and encode external commonsense knowledge alongside visual and textual cues in a new pre-trained Vision-Language-Commonsense transformer model, VLC-BERT. Through our evaluation on the knowledge-intensive OK-VQA and A-OKVQA datasets, we show that VLC-BERT is capable of outperforming existing models that utilize static knowledge bases. Furthermore, through a detailed analysis, we explain which questions benefit, and which don't, from contextualized commonsense knowledge from COMET.

翻译:对解决视觉问题解答(VQA)任务的兴趣日益浓厚,这要求模型超越图像中的内容来理解。在这项工作中,我们侧重于需要常识推理的问题。与以前从静态知识库注入知识的方法相比,我们调查使用常识变换器(COMET)纳入背景化知识的情况。 常识变换器(COMET)是受过人类知识基础培训的现有知识模型。我们提出一种方法,在经过预先训练的新的视觉-语言-Commonsense变异器(VLC-BERT)模型中,生成、选择和编码外部常识知识,同时生成、选择和编码视觉和文字提示。我们通过对知识密集型的 OK-VQA 和 A-OKVQA 数据集的评估,我们表明VLC-BERT能够超越使用静态知识库的现有模型。此外,通过详细分析,我们解释哪些问题从知识变现的常识学知识库中获益,哪些问题并不从CONT的真识化常识中获益。