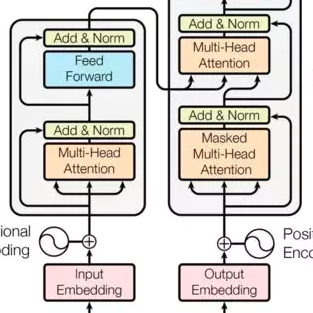

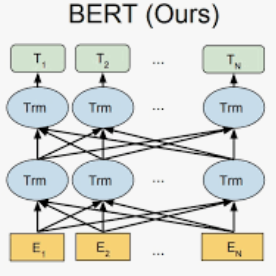

With the popularity of the recent Transformer-based models represented by BERT, GPT-3 and ChatGPT, there has been state-of-the-art performance in a range of natural language processing tasks. However, the massive computations, huge memory footprint, and thus high latency of Transformer-based models is an inevitable challenge for the cloud with high real-time requirement. To tackle the issue, we propose BBCT, a method of block-wise bit-compression for transformer without retraining. Our method achieves more fine-grained compression of the whole transformer, including embedding, matrix multiplication, GELU, softmax, layer normalization, and all the intermediate results. As a case, we compress an efficient BERT with the method of BBCT. Our benchmark test results on General Language Understanding Evaluation (GLUE) show that BBCT can achieve less than 1% accuracy drop in most tasks.

翻译:随着BERT、GPT-3和ChatGPT等Transformer模型的流行,自然语言处理任务的性能呈现出最新最优。但是,Transformer模型的巨大计算量、巨大内存占用和高延迟成为云计算中必须克服的挑战。为了解决这个问题,我们提出了BBCT方法,它是一种基于块的Transformer位压缩方法,无需重新训练。我们的方法对整个Transformer实现了更细粒度的压缩,包括嵌入、矩阵乘法、GELU、softmax、层规范化以及所有中间结果。作为案例,我们使用BBCT方法压缩一个高效的BERT。我们在General Language Understanding Evaluation(GLUE)基准测试中的结果显示,BBCT在大多数任务中可以实现不到1%的准确性下降。