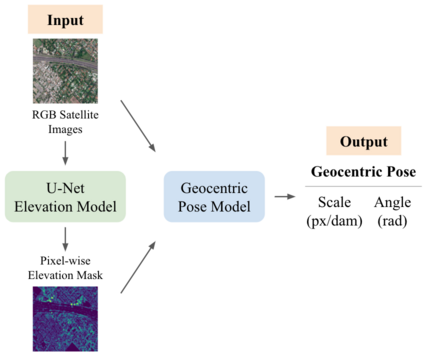

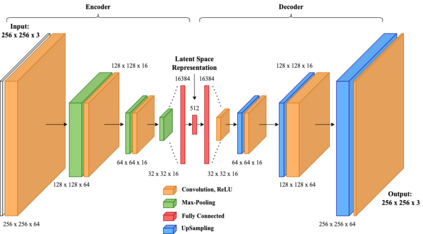

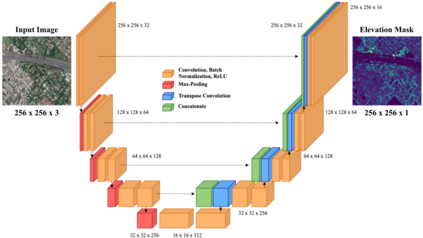

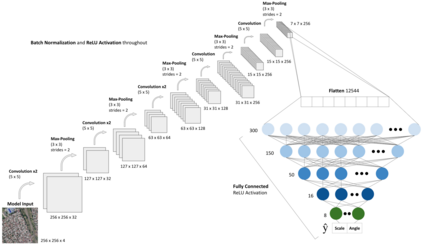

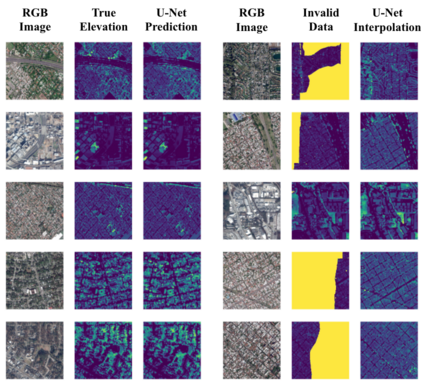

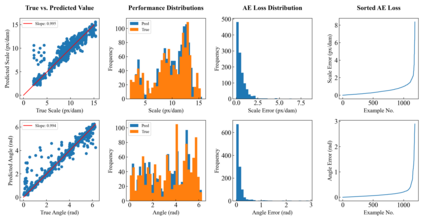

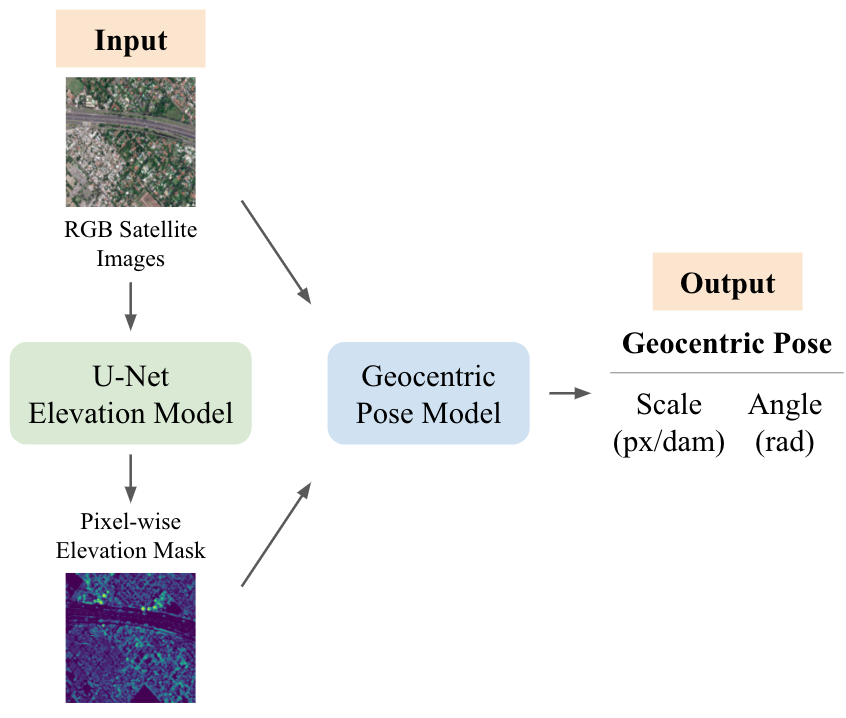

Roughly 6,800 natural disasters occur worldwide annually, and this alarming number continues to grow due to the effects of climate change. Effective methods to improve natural disaster response include performing change detection, map alignment, and vision-aided navigation to allow for the time-efficient delivery of life-saving aid. Current software functions optimally only on nadir images taken ninety degrees above ground level. The inability to generalize to oblique images increases the need to compute an image's geocentric pose, which is its spatial orientation with respect to gravity. This Deep Learning investigation presents three convolutional models to predict geocentric pose using 5,923 nadir and oblique red, green, and blue (RGB) satellite images of cities worldwide. The first model is an autoencoder that condenses the 256 x 256 x 3 images to 32 x 32 x 16 latent space representations, demonstrating the ability to learn useful features from the data. The second model is a U-Net Fully Convolutional Network with skip connections used to predict each image's corresponding pixel-level elevation mask. This model achieves a median absolute deviation of 0.335 meters and an R2 of 0.865 on test data. Afterward, the elevation masks are concatenated with the RGB images to form four-channel inputs fed into the third model, which predicts each image's rotation angle and scale, the components of its geocentric pose. This Deep Convolutional Neural Network achieves an R2 of 0.943 on test data, significantly outperforming previous models designed by researchers. The high-accuracy software built in this study contributes to mapping and navigation procedures to accelerate disaster relief and save human lives.

翻译:全世界每年发生约6,800次自然灾害,由于气候变化的影响,这一惊人的数字继续增加。改进自然灾害应对的有效方法包括进行变化探测、地图调整和视觉辅助导航,以便有时间高效地提供救生援助。目前软件只在地面水平上90度以上拍摄的纳迪尔图像上最优化地功能。无法对图像进行概括化,更需要计算图像的地心结构,即其空间方向与重力有关。这一深层学习调查提供了三个螺旋模型,用以用全世界城市的5,923纳迪尔和斜面红、绿色和蓝色(RGB)卫星图像预测地球中心结构。第一个模型是自动编码,将256x256x3图像压缩到32x32x16潜伏空间图示,显示从数据中学习有用特征的能力。第二个模型是U-Net 完全变电图网络,用于预测每张图像对应的平面海拔掩罩。这个模型将0.335米和斜面红心红心红心的红心模型进行中位偏移,并将每0.865米和R2的图像用于上方位数据测试。