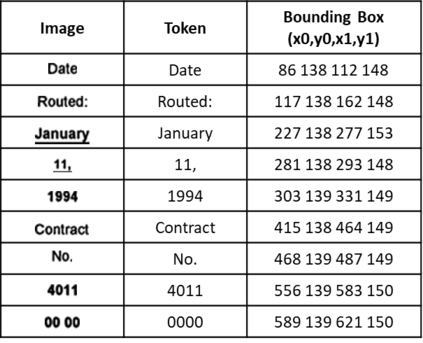

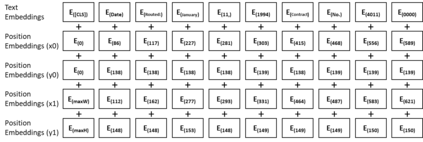

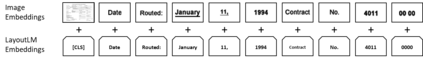

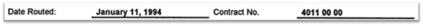

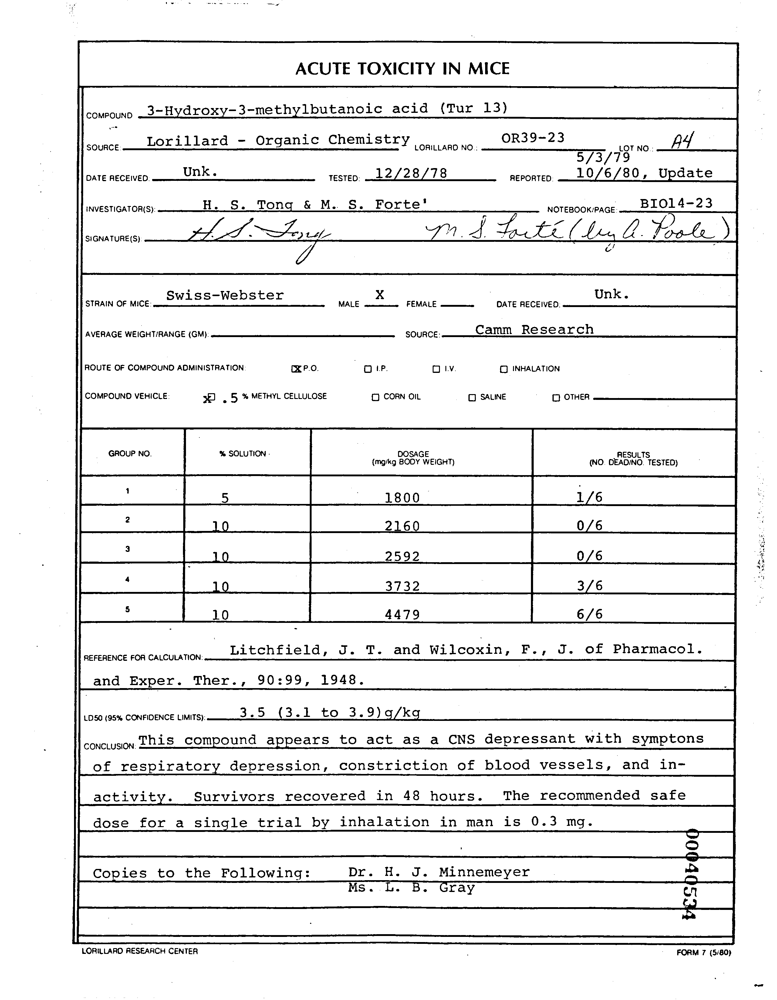

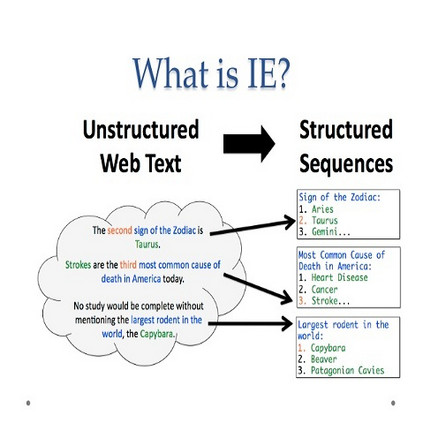

Pre-training techniques have been verified successfully in a variety of NLP tasks in recent years. Despite the wide spread of pre-training models for NLP applications, they almost focused on text-level manipulation, while neglecting the layout and style information that is vital for document image understanding. In this paper, we propose \textbf{LayoutLM} to jointly model the interaction between text and layout information across scanned document images, which is beneficial for a great number of real-world document image understanding tasks such as information extraction from scanned documents. We also leverage the image features to incorporate the style information of words in LayoutLM. To the best of our knowledge, this is the first time that text and layout are jointly learned in a single framework for document-level pre-training, leading to significant performance improvement in downstream tasks for document image understanding.

翻译:近年来,培训前技术在各种国家语言方案任务中都得到了成功验证。尽管国家语言方案应用程序的培训前模式分布广泛,但它们几乎侧重于文字操作,而忽略了对文件图像理解至关重要的布局和风格信息。在本文中,我们提议通过扫描文件图像,共同模拟文本和布局信息之间的互动,这有利于大量真实世界文件图像理解任务,如从扫描文件中提取信息。我们还利用图像功能将文字文字的风格信息纳入DLM。 据我们所知,这是首次在文件水平预培训单一框架内共同学习文字和布局,从而大大改进了文件图像理解下游任务的业绩。