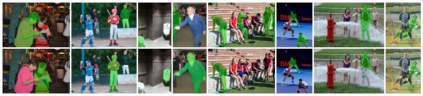

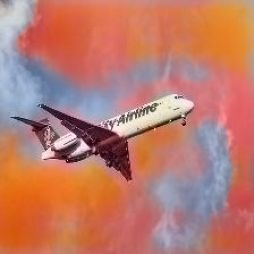

How to best integrate linguistic and perceptual processing in multi-modal tasks that involve language and vision is an important open problem. In this work, we argue that the common practice of using language in a top-down manner, to direct visual attention over high-level visual features, may not be optimal. We hypothesize that the use of language to also condition the bottom-up processing from pixels to high-level features can provide benefits to the overall performance. To support our claim, we propose a U-Net-based model and perform experiments on two language-vision dense-prediction tasks: referring expression segmentation and language-guided image colorization. We compare results where either one or both of the top-down and bottom-up visual branches are conditioned on language. Our experiments reveal that using language to control the filters for bottom-up visual processing in addition to top-down attention leads to better results on both tasks and achieves competitive performance. Our linguistic analysis suggests that bottom-up conditioning improves segmentation of objects especially when input text refers to low-level visual concepts. Code is available at https://github.com/ilkerkesen/bvpr.

翻译:如何最好地将语言和感知处理纳入涉及语言和视觉的多模式任务中,这是一个重要的未决问题。在这项工作中,我们争辩说,以自上而下的方式使用语言,对高层次视觉特征进行视觉关注的常见做法可能不是最佳做法。我们假设,语言的使用也会为从像素到高层次特征的自下而上处理提供条件,从而有利于总体性能。为了支持我们的要求,我们提议了一个基于 U-Net 的模型,并对两种语言视觉密集任务进行实验:提及表达分解和语言引导图像的颜色化。我们比较了上下和自下而上的视觉分支中的一种或两种以语言为条件的结果。我们的实验显示,除了上而下而上而上,使用语言控制自下而上视觉处理的过滤器可以改善两个任务的结果并实现竞争性的性能。我们的语言分析表明,自下而上而起的调节改进对象的分化,特别是当输入文本提到低层次的视觉概念时。代码可在 https://github.com/ilkerke/vprur.