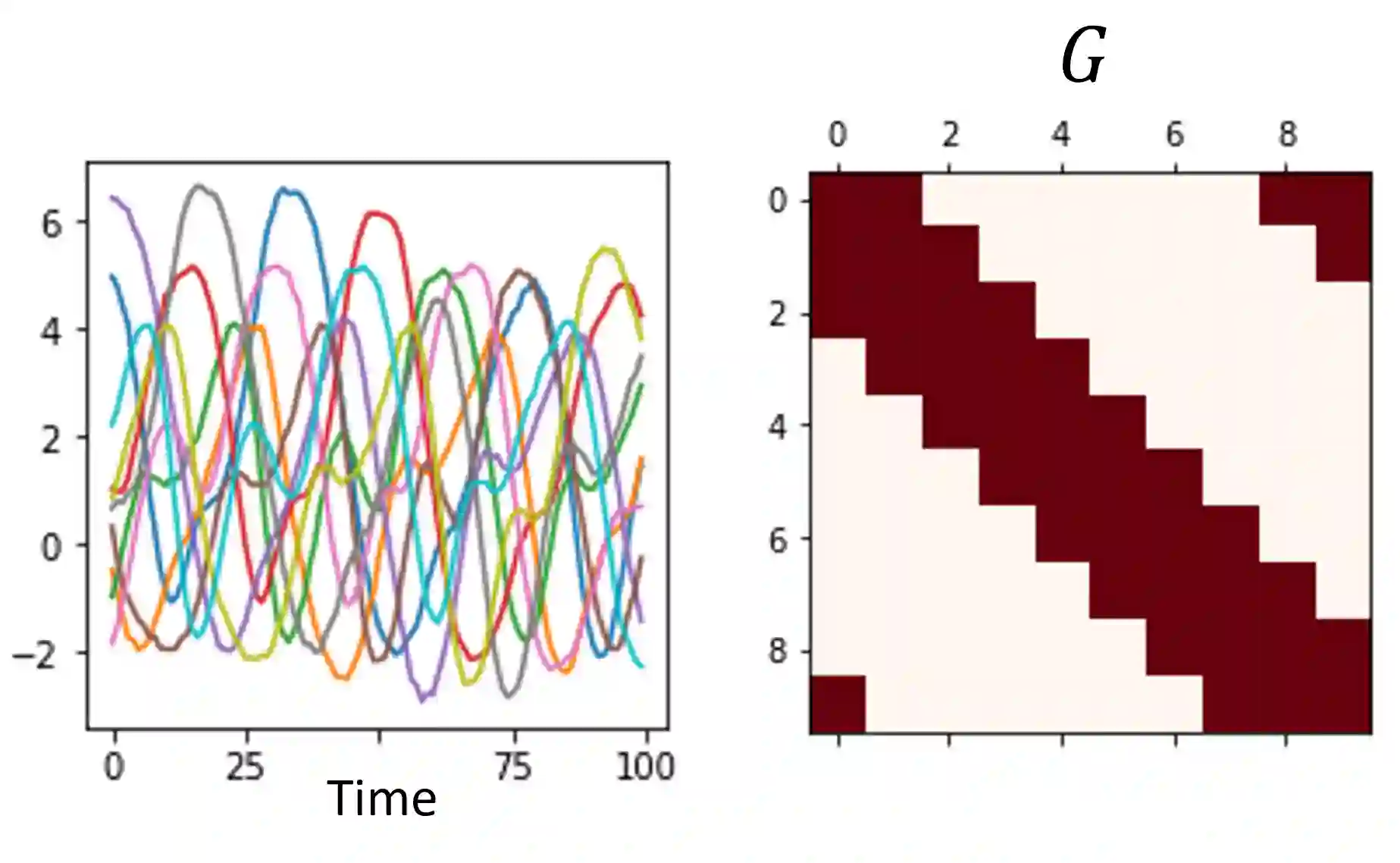

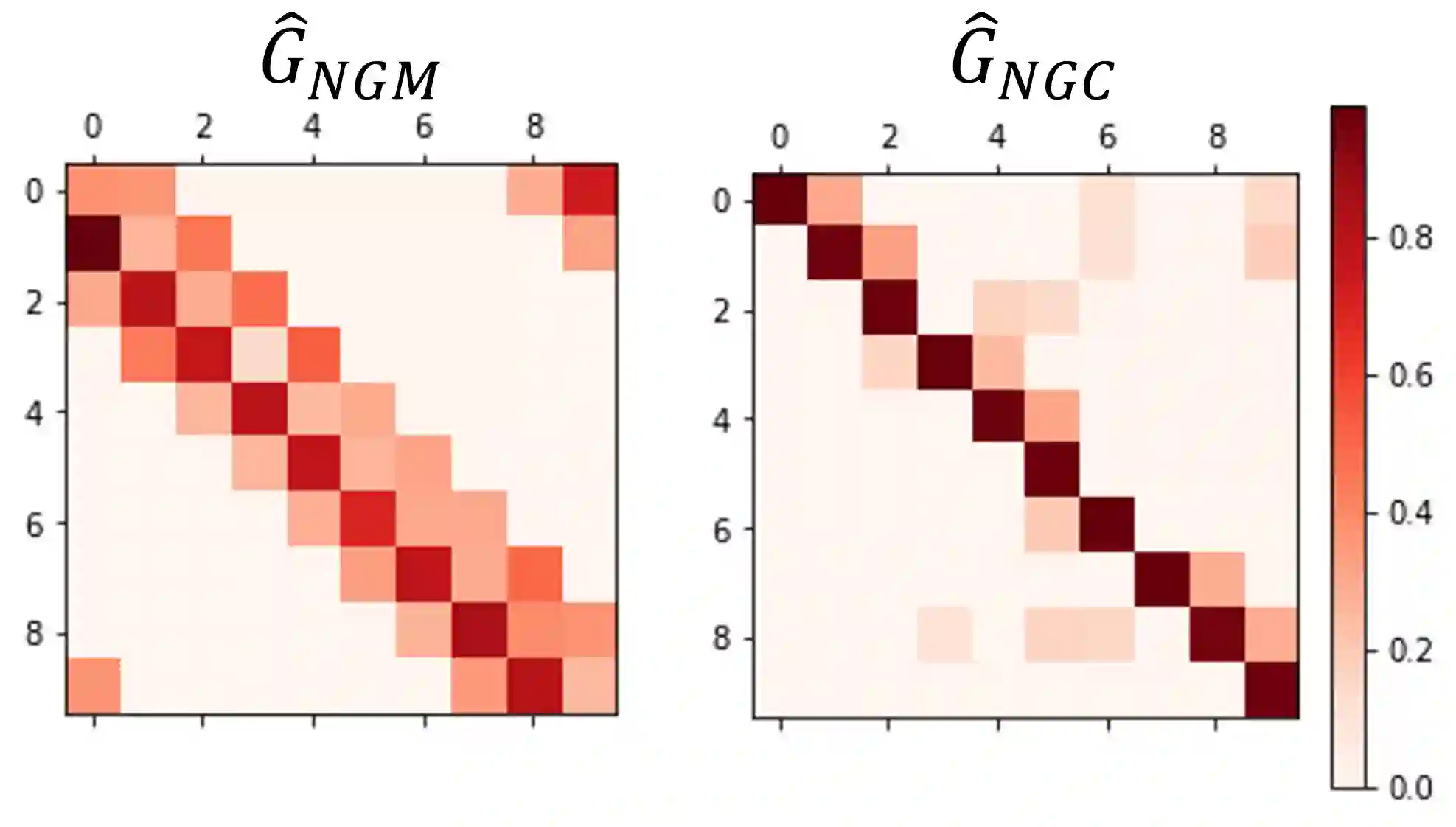

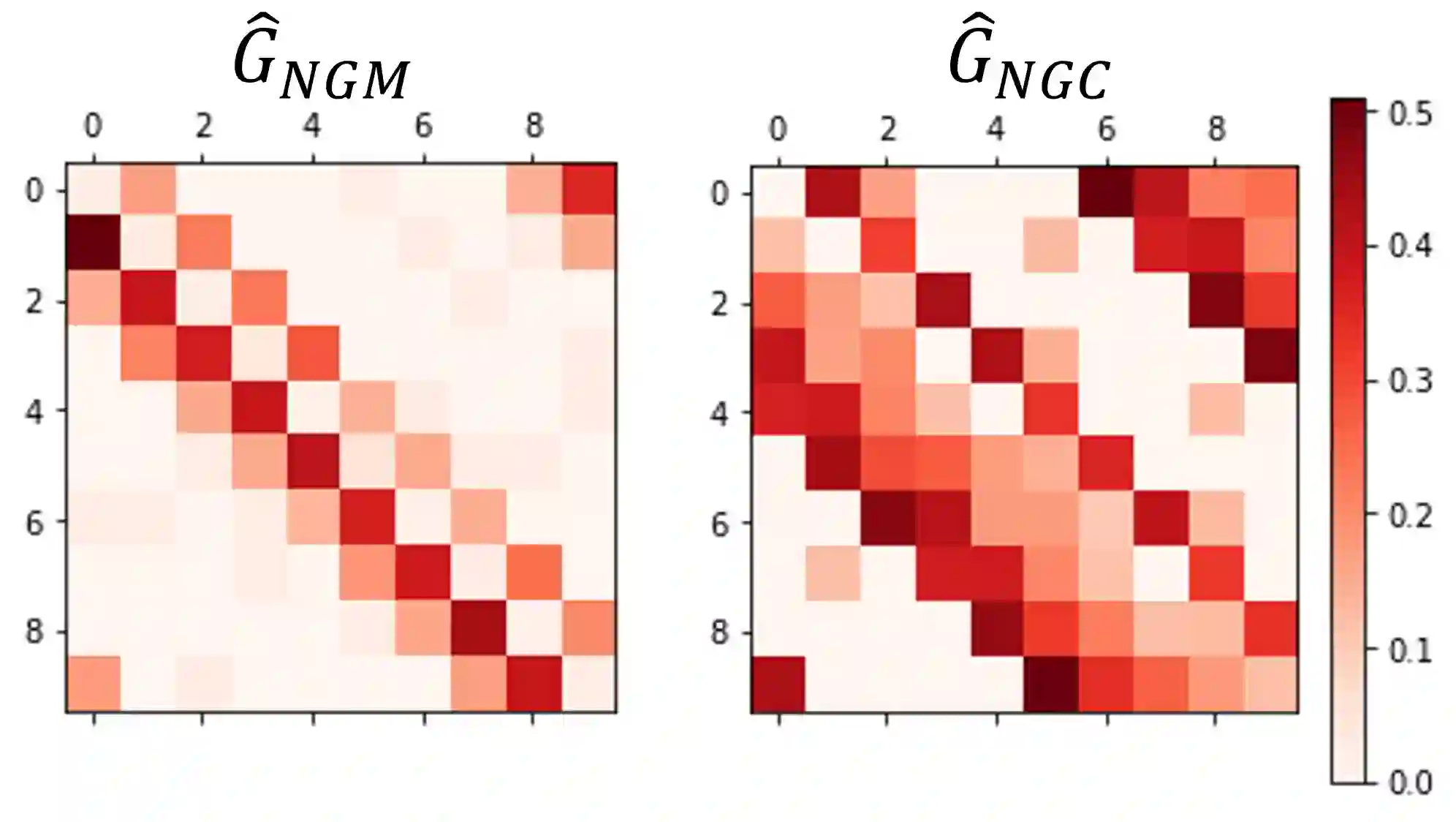

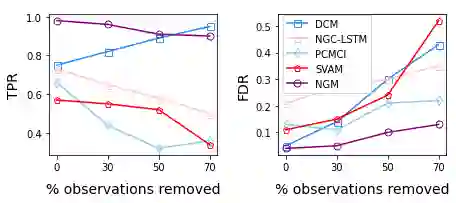

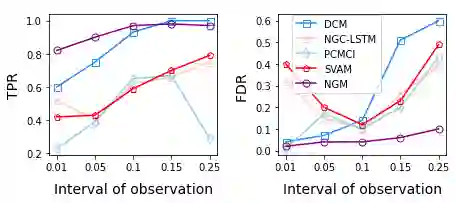

The discovery of structure from time series data is a key problem in fields of study working with complex systems. Most identifiability results and learning algorithms assume the underlying dynamics to be discrete in time. Comparatively few, in contrast, explicitly define dependencies in infinitesimal intervals of time, independently of the scale of observation and of the regularity of sampling. In this paper, we consider score-based structure learning for the study of dynamical systems. We prove that for vector fields parameterized in a large class of neural networks, least squares optimization with adaptive regularization schemes consistently recovers directed graphs of local independencies in systems of stochastic differential equations. Using this insight, we propose a score-based learning algorithm based on penalized Neural Ordinary Differential Equations (modelling the mean process) that we show to be applicable to the general setting of irregularly-sampled multivariate time series and to outperform the state of the art across a range of dynamical systems.

翻译:从时间序列数据中发现结构是复杂系统研究领域的一个关键问题。 多数可识别性结果和学习算法都假设基本动态在时间上是分离的。 相比之下,在不考虑观测规模和抽样的规律性的情况下,在极小的时间间隔中明确界定依赖性的情况相对较少。 在本文中,我们考虑为研究动态系统而采用基于分数的结构学习方法。 我们证明,对于在大量神经网络中参数化的矢量字段而言,适应性规范化计划最差的方形优化始终在恢复随机差异方程式系统中当地不依赖性的定向图表。 我们利用这种洞察力,建议采用基于固定的神经普通差异(模拟平均过程)的得分法学习算法,我们表明该算法适用于不规则的多变数时间序列的总体设置,并超越一系列动态系统中的艺术状态。