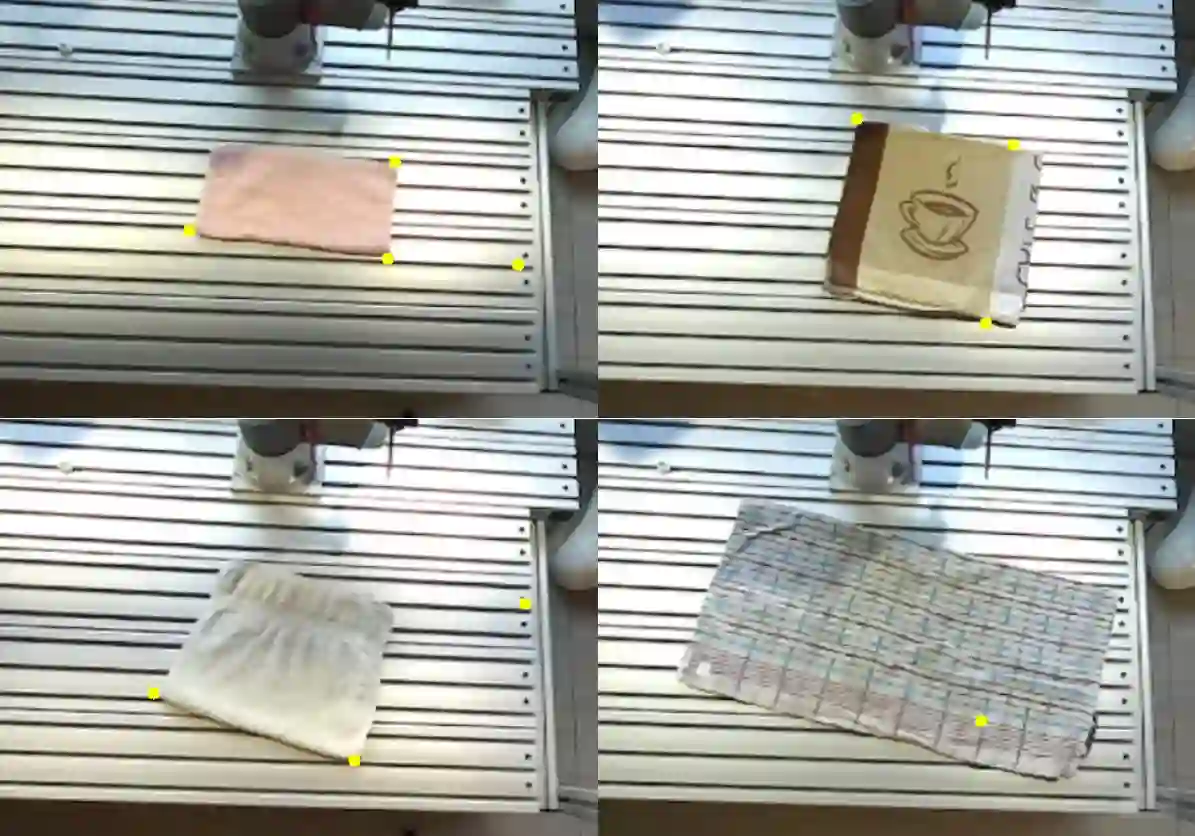

Robotic cloth manipulation is challenging due to its deformability, which makes determining its full state infeasible. However, for cloth folding, it suffices to know the position of a few semantic keypoints. Convolutional neural networks (CNN) can be used to detect these keypoints, but require large amounts of annotated data, which is expensive to collect. To overcome this, we propose to learn these keypoint detectors purely from synthetic data, enabling low-cost data collection. In this paper, we procedurally generate images of towels and use them to train a CNN. We evaluate the performance of this detector for folding towels on a unimanual robot setup and find that the grasp and fold success rates are 77% and 53%, respectively. We conclude that learning keypoint detectors from synthetic data for cloth folding and related tasks is a promising research direction, discuss some failures and relate them to future work. A video of the system, as well as the codebase, more details on the CNN architecture and the training setup can be found at https://github.com/tlpss/workshop-icra-2022-cloth-keypoints.git.

翻译:机械化的布料操纵因其变形性能而具有挑战性,因为它使毛巾的图象无法完全确定。然而,对于布叠,我们只需了解几个语义关键点的位置即可。 革命性神经网络(CNN)可以用来检测这些关键点,但需要大量附加说明的数据,这些数据收集成本很高。 为了克服这一点,我们提议只从合成数据中学习这些关键点探测器,以便收集低成本的数据。在本文中,我们从程序上生成毛巾图像,并用它们来培训CNN。我们评估了这一探测器在单体机器人设置上折叠毛巾的性能,发现握和折叠成功率分别为77%和53%。我们的结论是,从合成数据中学习织布和相关任务的关键点检测器是一个很有希望的研究方向,讨论一些故障,并将它们与未来工作联系起来。一个系统的视频以及代码库、CNN架构的更多细节和训练设置可以在https://github.com/tgips/schemas-chramkeys.