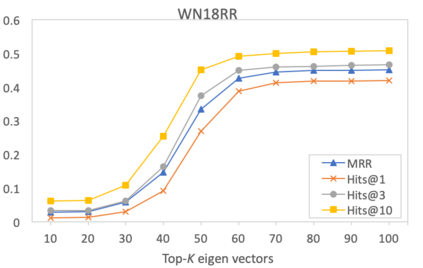

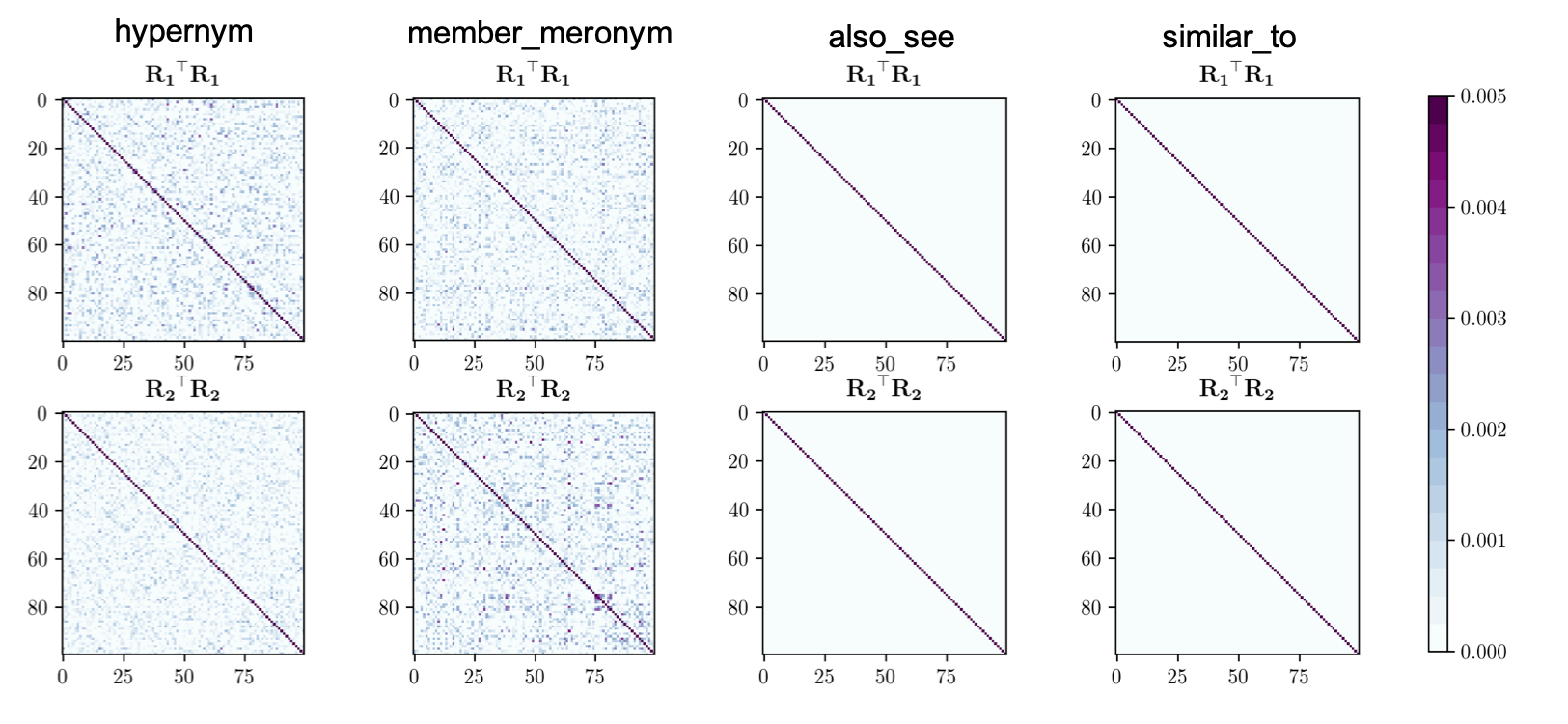

Embedding entities and relations of a knowledge graph in a low-dimensional space has shown impressive performance in predicting missing links between entities. Although progresses have been achieved, existing methods are heuristically motivated and theoretical understanding of such embeddings is comparatively underdeveloped. This paper extends the random walk model (Arora et al., 2016a) of word embeddings to Knowledge Graph Embeddings (KGEs) to derive a scoring function that evaluates the strength of a relation R between two entities h (head) and t (tail). Moreover, we show that marginal loss minimisation, a popular objective used in much prior work in KGE, follows naturally from the log-likelihood ratio maximisation under the probabilities estimated from the KGEs according to our theoretical relationship. We propose a learning objective motivated by the theoretical analysis to learn KGEs from a given knowledge graph. Using the derived objective, accurate KGEs are learnt from FB15K237 and WN18RR benchmark datasets, providing empirical evidence in support of the theory.

翻译:低维空间的嵌入实体和知识图关系在预测实体间缺失环节方面表现出令人印象深刻的成绩。虽然已经取得了进步,但现有方法具有超自然动机,对嵌入过程的理论理解相对不足。本文扩展了知识图嵌入(KGEs)的随机单词嵌入模式(Arora等人,2016年a),以得出一个评分功能,评估两个实体h(头)和t(尾)R之间关系的强度。此外,我们显示,边际损失最小化(边际损失最小化)是KGE以前许多工作中常用的一个目标,它自然遵循了根据我们理论关系估计的概率比值最大化。我们提出了一个学习目标,这是根据理论分析从给定的知识图中学习KGEs的。我们提出一个学习目标。利用衍生目标,从FB15K237和WN18RR基准数据集中学习了准确的KGGE,提供了支持理论的经验证据。