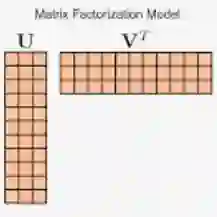

In addition to recent developments in computing speed and memory, methodological advances have contributed to significant gains in the performance of stochastic simulation. In this paper, we focus on variance reduction for matrix computations via matrix factorization. We provide insights into existing variance reduction methods for estimating the entries of large matrices. Popular methods do not exploit the reduction in variance that is possible when the matrix is factorized. We show how computing the square root factorization of the matrix can achieve in some important cases arbitrarily better stochastic performance. In addition, we propose a factorized estimator for the trace of a product of matrices and numerically demonstrate that the estimator can be up to 1,000 times more efficient on certain problems of estimating the log-likelihood of a Gaussian process. Additionally, we provide a new estimator of the log-determinant of a positive semi-definite matrix where the log-determinant is treated as a normalizing constant of a probability density.

翻译:除了计算速度和存储的最近发展,方法学进步也为随机模拟的性能带来了显著的增益。本文关注通过矩阵分解来进行矩阵计算的方差缩减。我们提供了洞见,扩展了现有的估计大矩阵元素方差缩减的方法。流行的方法没有从矩阵分解可获得的方差缩减中受益。我们展示了计算矩阵平方根分解可以在某些重要情况下达到任意优秀的随机性能。此外,我们提出了用于矩阵乘积的迹的分解估计器,并数值上证明了该估计器在某些估计高斯过程对数似然函数的问题的效率可能高达1,000倍。此外,我们提供了一个将对数行列式视为概率密度规范化常数来对正半定矩阵的对数行列式进行估计的新估计器。