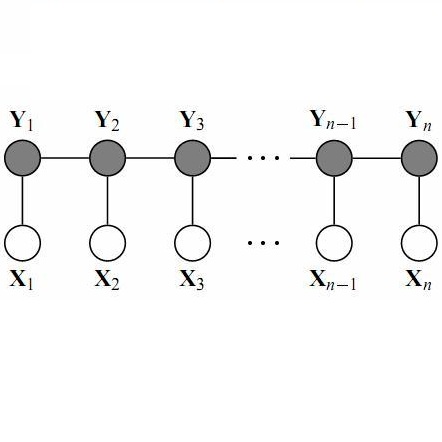

Persuasion aims at forming one's opinion and action via a series of persuasive messages containing persuader's strategies. Due to its potential application in persuasive dialogue systems, the task of persuasive strategy recognition has gained much attention lately. Previous methods on user intent recognition in dialogue systems adopt recurrent neural network (RNN) or convolutional neural network (CNN) to model context in conversational history, neglecting the tactic history and intra-speaker relation. In this paper, we demonstrate the limitations of a Transformer-based approach coupled with Conditional Random Field (CRF) for the task of persuasive strategy recognition. In this model, we leverage inter- and intra-speaker contextual semantic features, as well as label dependencies to improve the recognition. Despite extensive hyper-parameter optimizations, this architecture fails to outperform the baseline methods. We observe two negative results. Firstly, CRF cannot capture persuasive label dependencies, possibly as strategies in persuasive dialogues do not follow any strict grammar or rules as the cases in Named Entity Recognition (NER) or part-of-speech (POS) tagging. Secondly, the Transformer encoder trained from scratch is less capable of capturing sequential information in persuasive dialogues than Long Short-Term Memory (LSTM). We attribute this to the reason that the vanilla Transformer encoder does not efficiently consider relative position information of sequence elements.

翻译:通过一系列说服者战略的说服力信息来形成个人的意见和行动。由于在有说服力的对话系统中可能应用这种观点和行动,说服性战略承认的任务最近引起了人们的极大关注。关于对话系统中用户意向识别的以往方法采用经常神经网络(RNN)或进化神经网络(CNN)来模拟对话历史背景,忽视战术历史和发言人内部关系。在本文件中,我们显示了以变异器为基础的方法加上有条件随机字段(CRF)在具有说服力的战略识别任务方面的局限性。在这个模型中,我们利用了发言人之间和内部的背景语义特征以及标签依赖性来改进识别。尽管进行了广泛的超参数优化,但这一结构未能超越基线方法。我们观察到两种负面的结果。首先,通用报告格式无法捕捉有说服力的标签依赖性,因为有说服力的对话战略并不遵循任何严格的语法或规则,因为命名实体识别(NER)或部分定位(POS)系统内部的语义特征特征特征特征特征特征特征,以及标签的属性依赖性标志性标志性特征特征特征特征,而我们所训练的内变动的内变变的内变的内变的内变的顺序,比变的内变的内变的顺序是较短的顺序,从较短的变的变的顺序考虑较代的变的顺序,我们的内变。