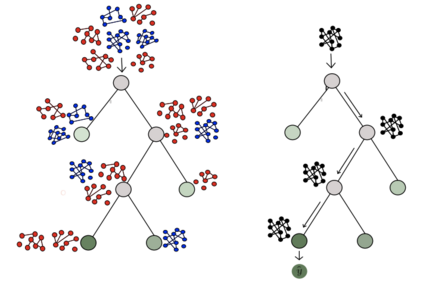

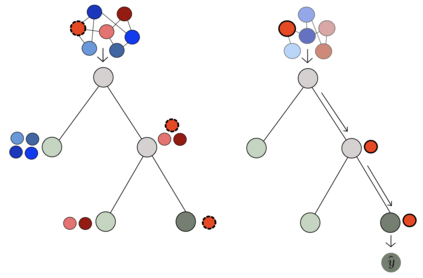

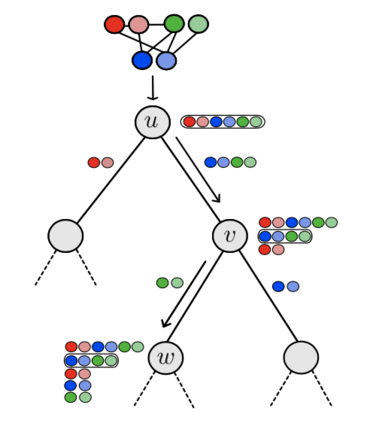

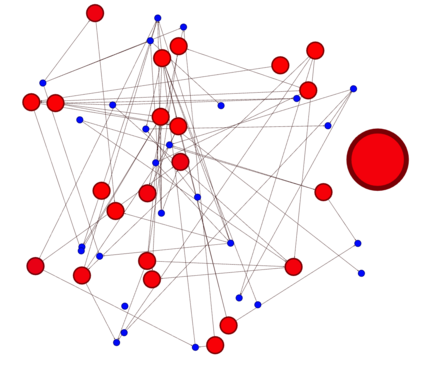

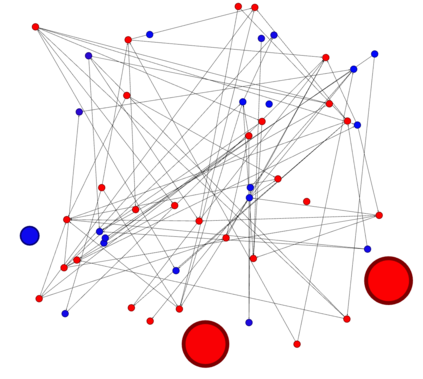

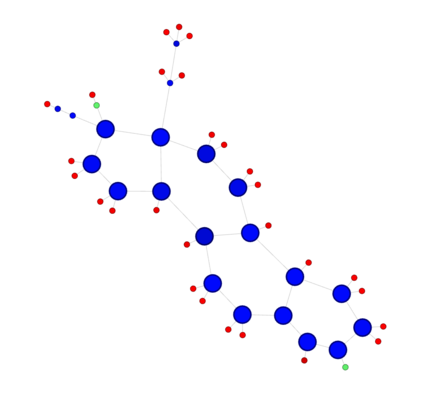

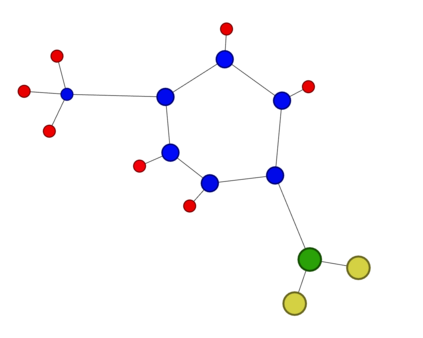

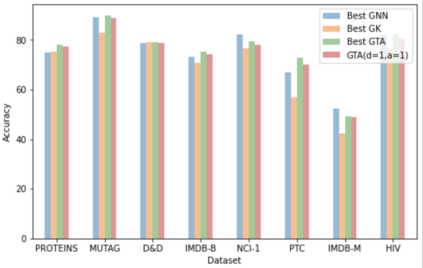

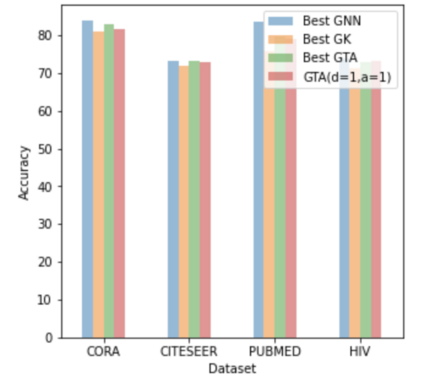

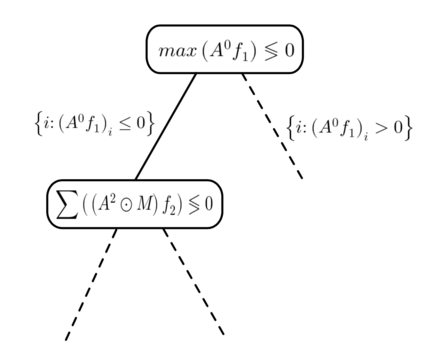

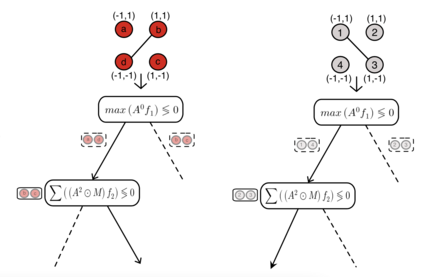

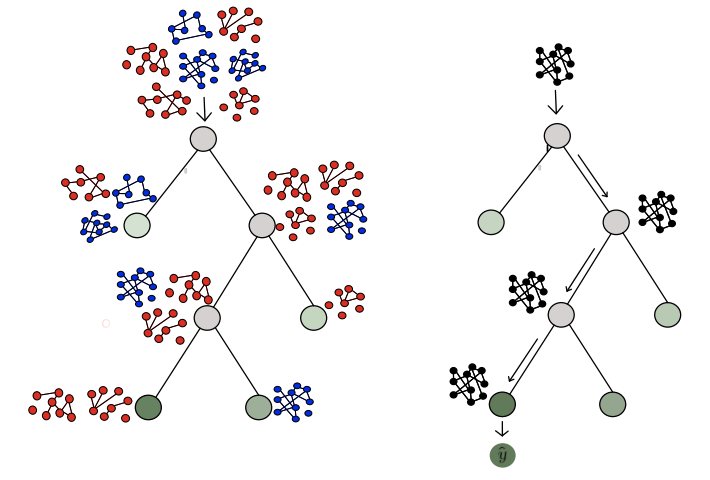

When dealing with tabular data, models based on regression and decision trees are a popular choice due to the high accuracy they provide on such tasks and their ease of application as compared to other model classes. Yet, when it comes to graph-structure data, current tree learning algorithms do not provide tools to manage the structure of the data other than relying on feature engineering. In this work we address the above gap, and introduce Graph Trees with Attention (GTA), a new family of tree-based learning algorithms that are designed to operate on graphs. GTA leverages both the graph structure and the features at the vertices and employs an attention mechanism that allows decisions to concentrate on sub-structures of the graph. We analyze GTA models and show that they are strictly more expressive than plain decision trees. We also demonstrate the benefits of GTA empirically on multiple graph and node prediction benchmarks. In these experiments, GTA always outperformed other tree-based models and often outperformed other types of graph-learning algorithms such as Graph Neural Networks (GNNs) and Graph Kernels. Finally, we also provide an explainability mechanism for GTA, and demonstrate it can provide intuitive explanations.

翻译:在处理表格数据时,基于回归和决定树的模型是一个受欢迎的选择,因为其对于这些任务提供的高度精准性以及与其他模型类相比,这些模型的应用容易。然而,在图形结构数据方面,目前的树学习算法并不提供管理数据结构的工具,而只是依赖地貌工程。在这项工作中,我们处理上述差距,并引入一个关注的图形树(GTA),这是一个设计在图形上运行的基于树的学习算法的新组合。GTA利用图表结构及其在顶端的特征,并使用一种关注机制,使决策能够集中于图形的子结构。我们分析了GTA模型,并表明这些模型比简单的决策树更具有严格的清晰性。我们还展示了GTA在多个图形和节点预测基准上的经验效益。在这些实验中,GTA总是优于其他以树为基础的模型,而且往往优于其他类型的图形学习算法,例如“神经网络”图和“Kernels”图等。最后,我们还为GTATA提供了一种解释性解释机制,并演示它。