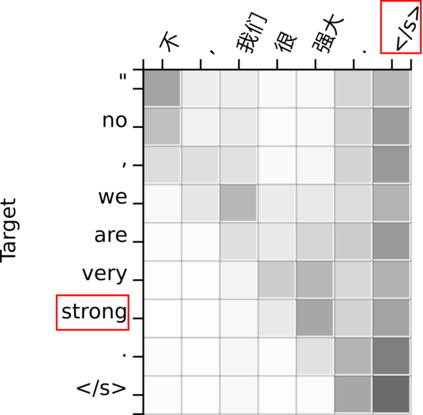

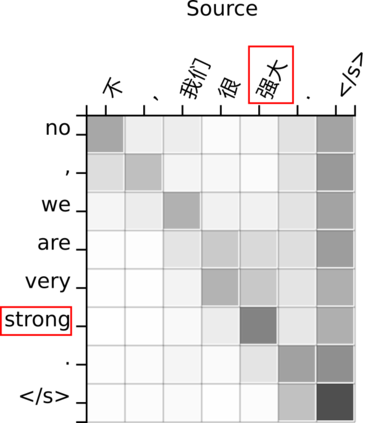

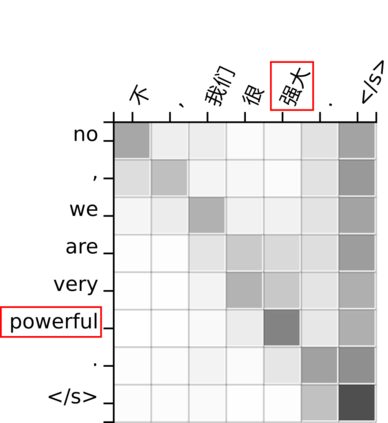

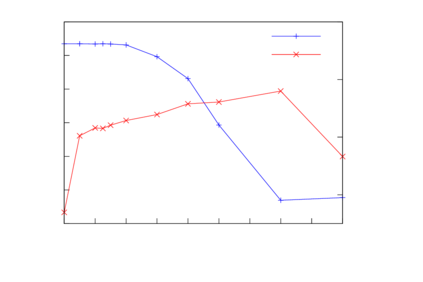

This work investigates the alignment problem in state-of-the-art multi-head attention models based on the transformer architecture. We demonstrate that alignment extraction in transformer models can be improved by augmenting an additional alignment head to the multi-head source-to-target attention component. This is used to compute sharper attention weights. We describe how to use the alignment head to achieve competitive performance. To study the effect of adding the alignment head, we simulate a dictionary-guided translation task, where the user wants to guide translation using pre-defined dictionary entries. Using the proposed approach, we achieve up to $3.8$ % BLEU improvement when using the dictionary, in comparison to $2.4$ % BLEU in the baseline case. We also propose alignment pruning to speed up decoding in alignment-based neural machine translation (ANMT), which speeds up translation by a factor of $1.8$ without loss in translation performance. We carry out experiments on the shared WMT 2016 English$\to$Romanian news task and the BOLT Chinese$\to$English discussion forum task.

翻译:这项工作调查了基于变压器结构的最先进的多头关注模型的校正问题。 我们证明变压器模型的校正提取可以通过增加多头源对目标关注组件的加对准头来改进。 这是用来计算尖锐的注意重量。 我们描述如何使用校正头来取得竞争性的性能。 为了研究添加校正头的效果, 我们模拟由字典指导的翻译任务, 用户希望用预先定义的字典条目来指导翻译。 使用拟议方法, 我们使用字典时可以实现3.8% BLEU的改进, 相对于基线案例中的2.4 美元 BLEU。 我们还建议调整校正, 加速校正神经机翻译的解码, 加速翻译速度为1.8美元, 而不造成翻译损失。 我们实验了2016年通用WMT英语对罗马尼亚元对美元的新闻任务和BOLT中文对英文论坛任务。