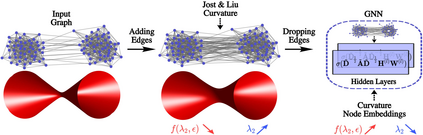

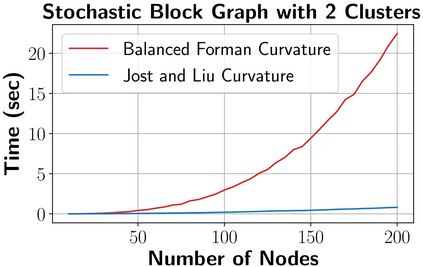

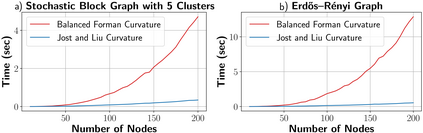

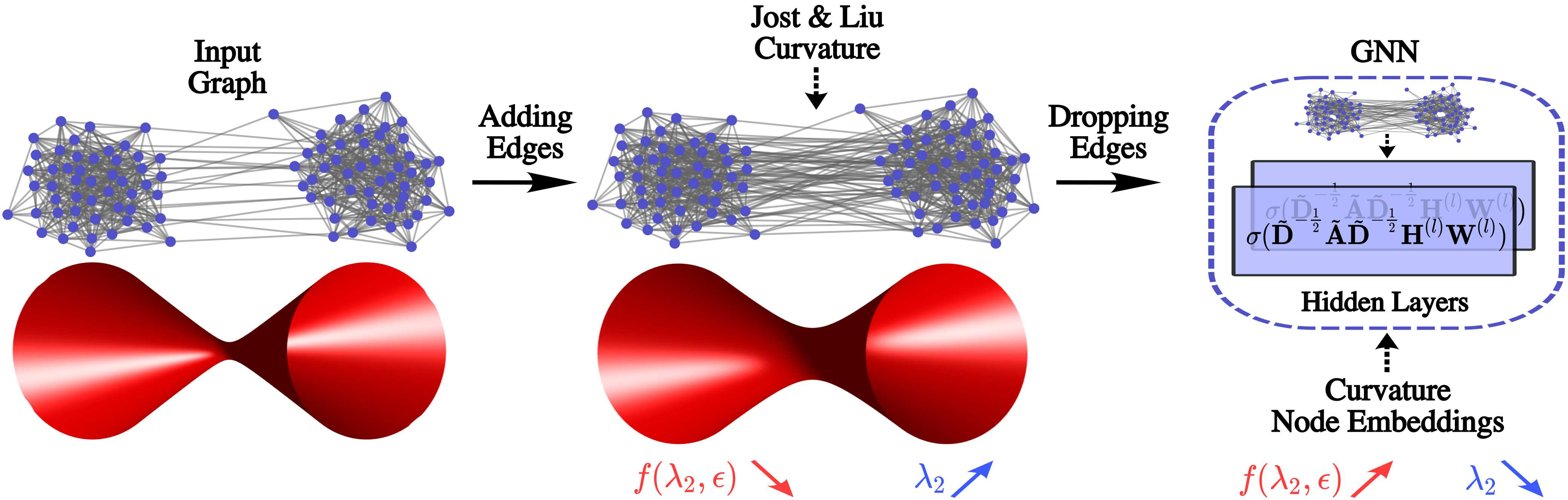

Graph Neural Networks (GNNs) have been successfully applied in many applications in computer sciences. Despite the success of deep learning architectures in other domains, deep GNNs still underperform their shallow counterparts. There are many open questions about deep GNNs, but over-smoothing and over-squashing are perhaps the most intriguing issues. When stacking multiple graph convolutional layers, the over-smoothing and over-squashing problems arise and have been defined as the inability of GNNs to learn deep representations and propagate information from distant nodes, respectively. Even though the widespread definitions of both problems are similar, these phenomena have been studied independently. This work strives to understand the underlying relationship between over-smoothing and over-squashing from a topological perspective. We show that both problems are intrinsically related to the spectral gap of the Laplacian of the graph. Therefore, there is a trade-off between these two problems, i.e., we cannot simultaneously alleviate both over-smoothing and over-squashing. We also propose a Stochastic Jost and Liu curvature Rewiring (SJLR) algorithm based on a bound of the Ollivier's Ricci curvature. SJLR is less expensive than previous curvature-based rewiring methods while retaining fundamental properties. Finally, we perform a thorough comparison of SJLR with previous techniques to alleviate over-smoothing or over-squashing, seeking to gain a better understanding of both problems.

翻译:在计算机科学的许多应用中,已经成功地应用了神经网络(GNNs)图。尽管在其他领域的深层次学习结构取得了成功,但深层次GNNs仍然表现不佳。关于深层次GNS有许多开放的问题,但过度抽动和过度夸大可能是最令人感兴趣的问题。当堆叠多层图形变异层时,过度抽动和过度夸大的问题出现,并被定义为GNNs没有能力分别从远处的节点学习深层的表述和传播信息。尽管这两个问题的定义很相似,但这些现象还是独立研究过。这项工作力求从表面角度理解过度抽动和过度夸大之间的根本关系。我们表明,这两个问题都与图中的拉普图层的光谱差距有着内在的联系。因此,在这两个问题之间,即,我们无法同时缓解过度抽移和过度交织的信息。我们还提议了以更深层的缩略图和缩略图的缩略图性分析方法,而不是以更深层的缩略图为基础的缩缩图和缩图的缩图,我们提议了更深层的缩缩图的缩缩缩图的缩图。