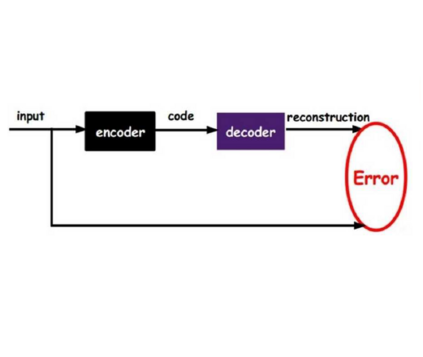

Variational inference (VI) plays an essential role in approximate Bayesian inference due to its computational efficiency and broad applicability. Crucial to the performance of VI is the selection of the associated divergence measure, as VI approximates the intractable distribution by minimizing this divergence. In this paper we propose a meta-learning algorithm to learn the divergence metric suited for the task of interest, automating the design of VI methods. In addition, we learn the initialization of the variational parameters without additional cost when our method is deployed in the few-shot learning scenarios. We demonstrate our approach outperforms standard VI on Gaussian mixture distribution approximation, Bayesian neural network regression, image generation with variational autoencoders and recommender systems with a partial variational autoencoder.

翻译:由于计算效率和广泛适用性,变式推论(VI)在近似贝耶斯推论中起着关键作用。 VI的性能关键在于选择相关的差异度量,因为VI通过尽量减少这种差异,接近棘手的分布。在本文中,我们提出了一个元化学习算法,以学习适合感兴趣任务的差异度量,使六种方法的设计自动化。此外,当我们的方法被运用在微小的学习假想中时,我们学会了在不增加费用的情况下启动变式参数。我们展示了我们的方法优于高山混合物分布近似、巴耶斯神经网络回归、与变异自动编码器成像和带有部分变异自动编码器的建议系统等六标准。