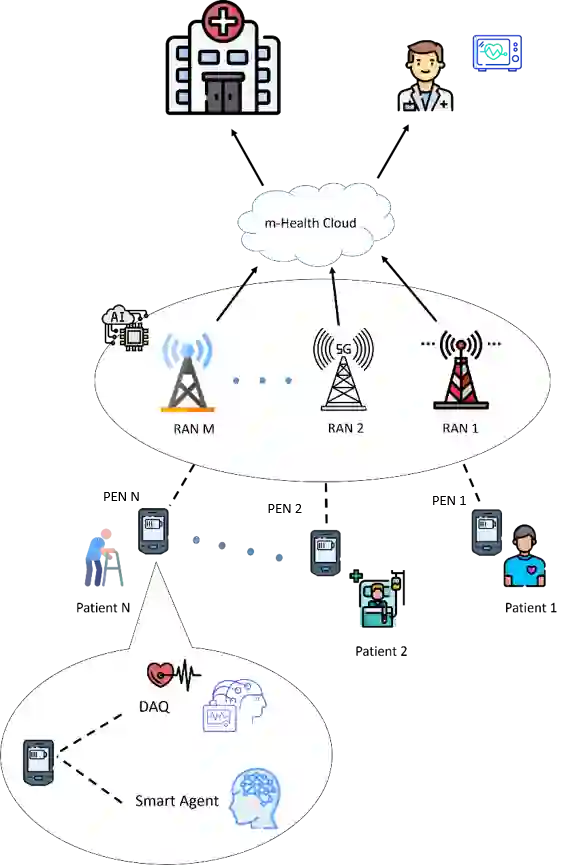

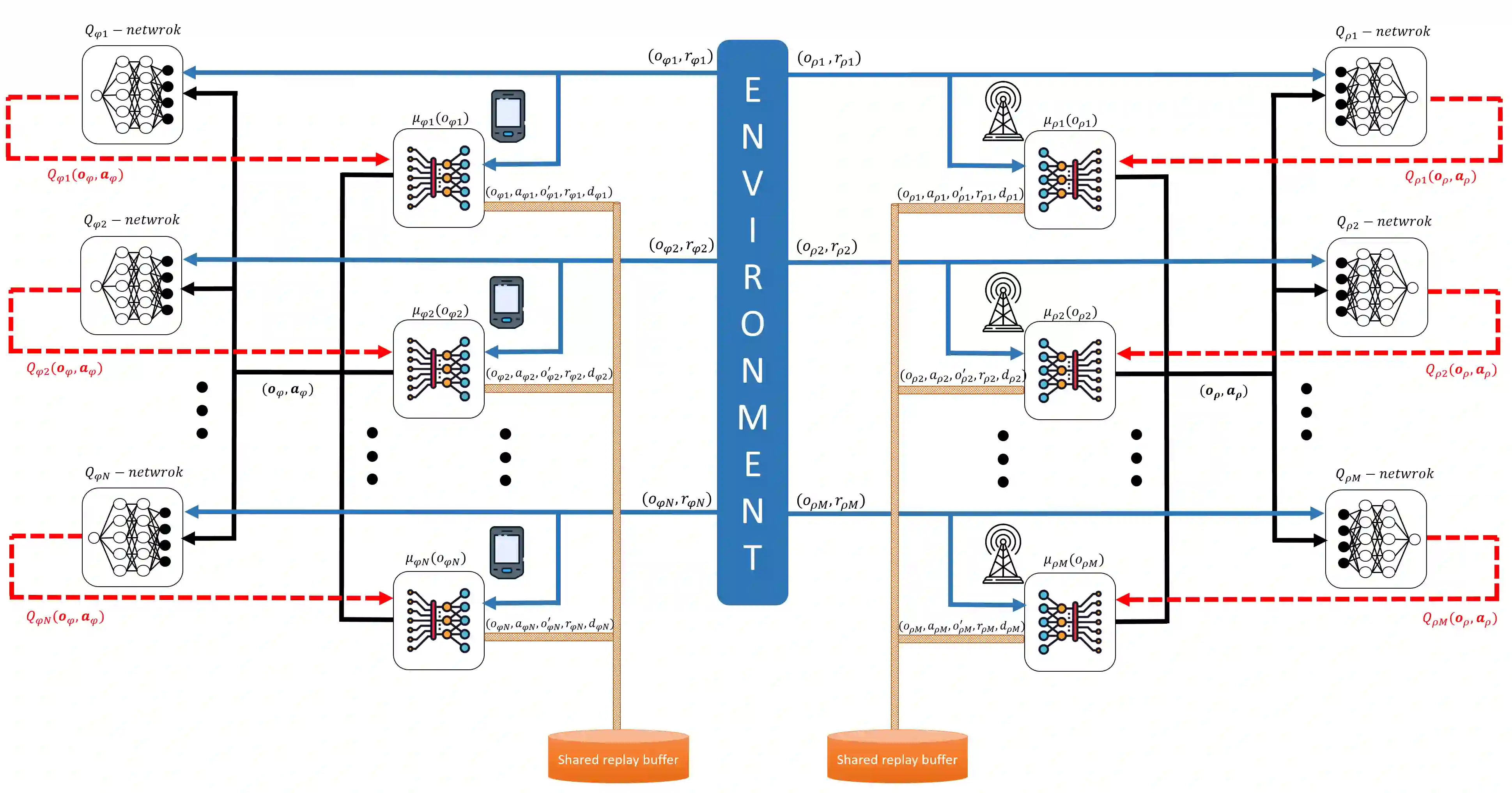

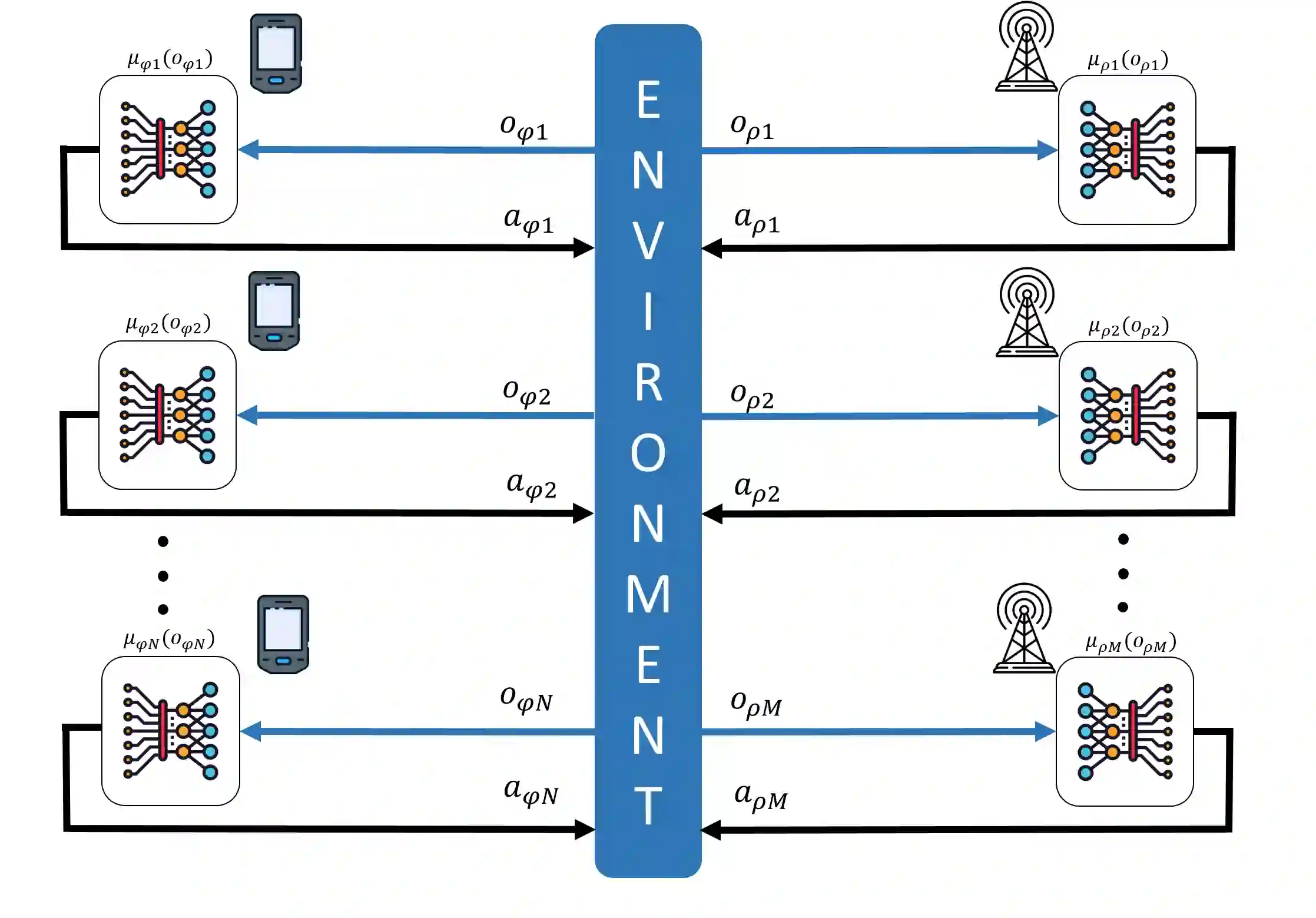

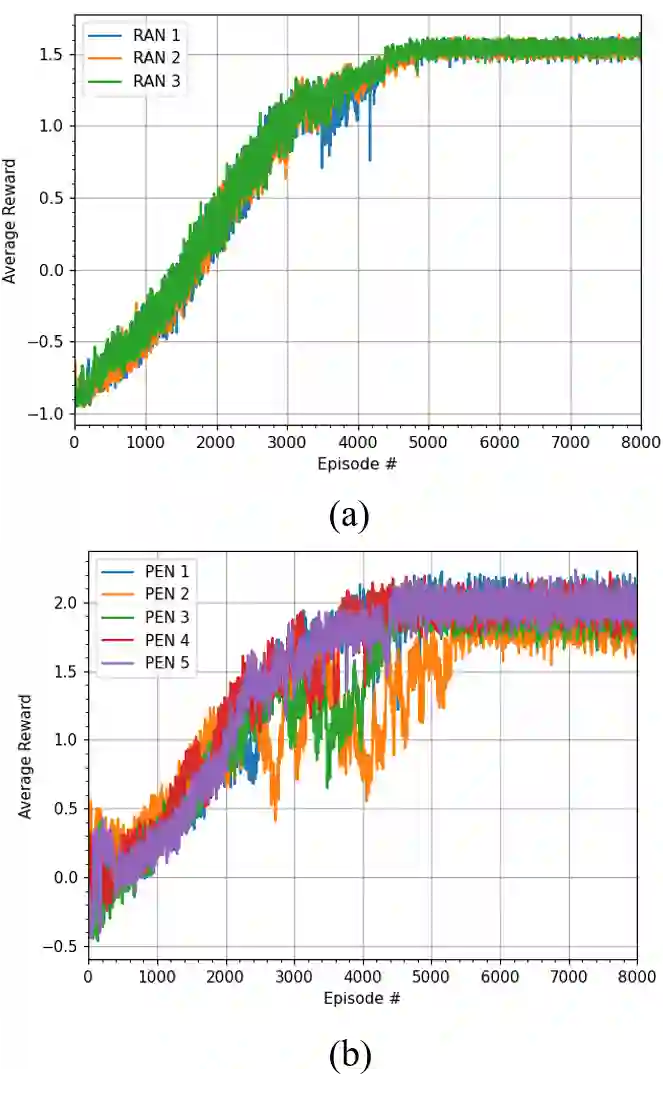

The rapid production of mobile devices along with the wireless applications boom is continuing to evolve daily. This motivates the exploitation of wireless spectrum using multiple Radio Access Technologies (multi-RAT) and developing innovative network selection techniques to cope with such intensive demand while improving Quality of Service (QoS). Thus, we propose a distributed framework for dynamic network selection at the edge level, and resource allocation at the Radio Access Network (RAN) level, while taking into consideration diverse applications' characteristics. In particular, our framework employs a deep Multi-Agent Reinforcement Learning (DMARL) algorithm, that aims to maximize the edge nodes' quality of experience while extending the battery lifetime of the nodes and leveraging adaptive compression schemes. Indeed, our framework enables data transfer from the network's edge nodes, with multi-RAT capabilities, to the cloud in a cost and energy-efficient manner, while maintaining QoS requirements of different supported applications. Our results depict that our solution outperforms state-of-the-art techniques of network selection in terms of energy consumption, latency, and cost.

翻译:移动装置的快速生产以及无线应用的繁荣正在日复一日地发展,这促使利用多个无线电接入技术(多RAT)开发无线频谱,开发创新的网络选择技术,以应对如此密集的需求,同时提高服务质量(Qos ) 。 因此,我们提出在边缘一级进行动态网络选择和在无线电接入网络(RAN)一级分配资源的分布框架,同时考虑到各种应用的特性。特别是,我们的框架采用了深厚的多点强化学习算法,目的是在扩大节点的电池寿命和利用适应性压缩计划的同时,最大限度地提高边缘节点的经验质量。 事实上,我们的框架使得数据能够以成本和能源效率的方式从网络边缘节点转移到云层,同时保持不同支持应用程序的QOS要求。我们的结果表明,我们的解决方案在能源消耗、拉特度和成本方面,超过了最新的网络选择技术。