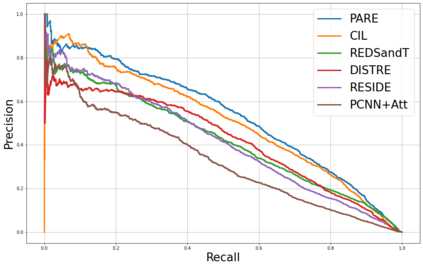

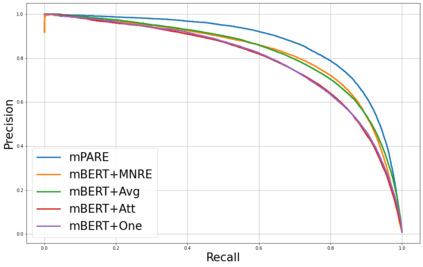

Neural models for distantly supervised relation extraction (DS-RE) encode each sentence in an entity-pair bag separately. These are then aggregated for bag-level relation prediction. Since, at encoding time, these approaches do not allow information to flow from other sentences in the bag, we believe that they do not utilize the available bag data to the fullest. In response, we explore a simple baseline approach (PARE) in which all sentences of a bag are concatenated into a passage of sentences, and encoded jointly using BERT. The contextual embeddings of tokens are aggregated using attention with the candidate relation as query -- this summary of whole passage predicts the candidate relation. We find that our simple baseline solution outperforms existing state-of-the-art DS-RE models in both monolingual and multilingual DS-RE datasets.

翻译:远程监控关系提取(DS-RE)的神经模型将每个句子分别编码在实体-空包中,然后将它们汇总为包级关系预测。由于在编码时,这些方法不允许信息从包中的其他句子中流出,我们认为它们并不最充分地利用现有的包数据。作为回应,我们探索了一种简单的基线方法(PARE ), 将包中的所有句子合并成一个句子, 并使用BERT 进行联合编码。 符号的上下文嵌入会集是在与候选人关系作为查询的注意下, 与候选人关系一起进行汇总的 -- -- 整个段落摘要预测了候选关系。 我们发现,我们简单的基线解决方案在单语言和多语言的DS-RE数据集中都比现有最先进的DS-RE模型要好。