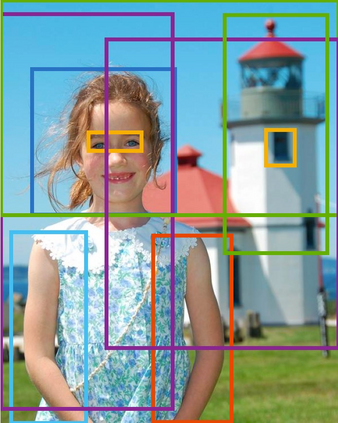

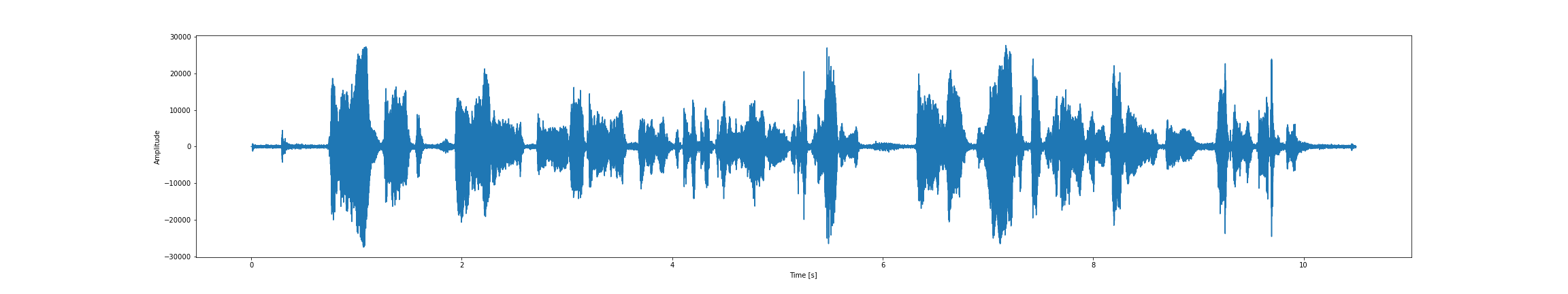

We present Fast-Slow Transformer for Visually Grounding Speech, or FaST-VGS. FaST-VGS is a Transformer-based model for learning the associations between raw speech waveforms and visual images. The model unifies dual-encoder and cross-attention architectures into a single model, reaping the superior retrieval speed of the former along with the accuracy of the latter. FaST-VGS achieves state-of-the-art speech-image retrieval accuracy on benchmark datasets, and its learned representations exhibit strong performance on the ZeroSpeech 2021 phonetic and semantic tasks.

翻译:我们为视觉定位演讲展示快速慢变换器,即FaST-VGS。 FaST-VGS是一种以变换器为基础的模型,用于学习原始语音波形和视觉图像之间的关联。该模型将双编码和交叉注意结构统一成一个单一模型,获得前者的高级检索速度和后者的准确性。 FaST-VGS在基准数据集上实现了最先进的语音图像检索准确性,其学习表现在ZeroSpeech 2021读音和语义任务上表现突出。