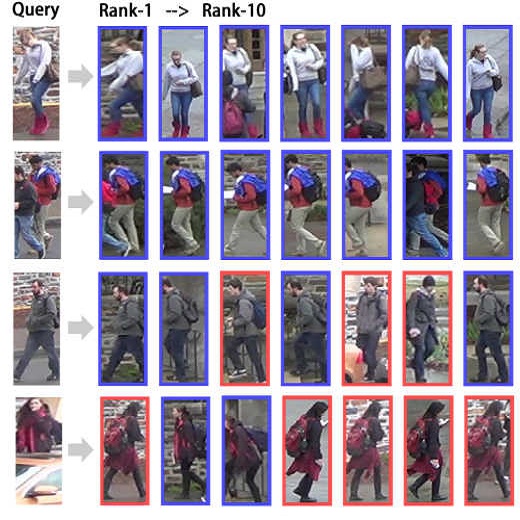

The task of person re-identification (ReID) is to match images of the same person over multiple non-overlapping camera views. Due to the variations in visual factors, previous works have investigated how the person identity, body parts, and attributes benefit the person ReID problem. However, the correlations between attributes, body parts, and within each attribute are not fully utilized. In this paper, we propose a new method to effectively aggregate detailed person descriptions (attributes labels) and visual features (body parts and global features) into a graph, namely Graph-based Person Signature, and utilize Graph Convolutional Networks to learn the topological structure of the visual signature of a person. The graph is integrated into a multi-branch multi-task framework for person re-identification. The extensive experiments are conducted to demonstrate the effectiveness of our proposed approach on two large-scale datasets, including Market-1501 and DukeMTMC-ReID. Our approach achieves competitive results among the state of the art and outperforms other attribute-based or mask-guided methods.

翻译:个人再识别( ReID) 的任务是将同一人的图像与多个非重叠相机视图相匹配。 由于视觉因素的变化,先前的著作调查了个人身份、身体部位和属性如何有利于个人再识别问题。然而,属性、身体部位和每个属性内部的相互关系没有充分利用。在本文件中,我们提出一种新的方法,将详细个人描述(属性标签)和视觉特征(身体部分和全球特征)有效地合并为图表,即基于图表的人签名,并利用图表革命网络学习一个人的视觉特征的表层结构。该图被整合到一个多人重新识别的多分支多任务框架。进行了广泛的实验,以证明我们提议的两种大规模数据集,包括市场1501和DukumMMC-ReID的方法的有效性。我们的方法在艺术状态中取得了竞争性的结果,并超越了其他基于属性或面具的指导方法。

相关内容

- Today (iOS and OS X): widgets for the Today view of Notification Center

- Share (iOS and OS X): post content to web services or share content with others

- Actions (iOS and OS X): app extensions to view or manipulate inside another app

- Photo Editing (iOS): edit a photo or video in Apple's Photos app with extensions from a third-party apps

- Finder Sync (OS X): remote file storage in the Finder with support for Finder content annotation

- Storage Provider (iOS): an interface between files inside an app and other apps on a user's device

- Custom Keyboard (iOS): system-wide alternative keyboards

Source: iOS 8 Extensions: Apple’s Plan for a Powerful App Ecosystem