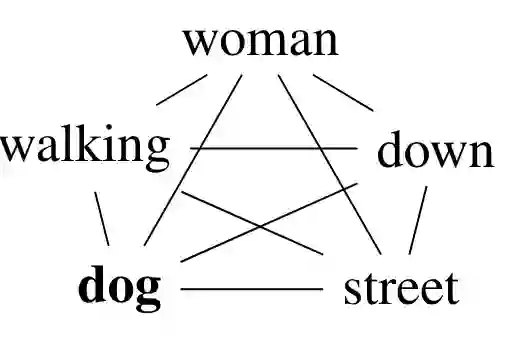

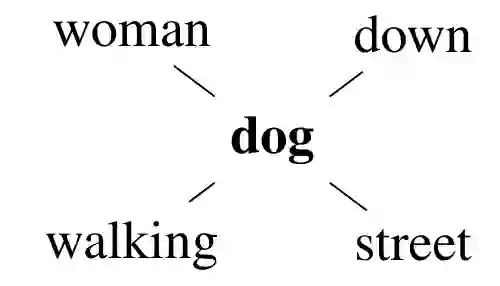

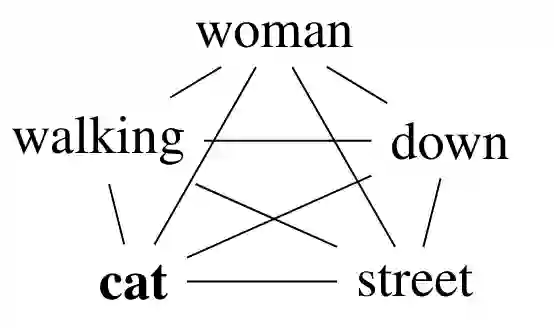

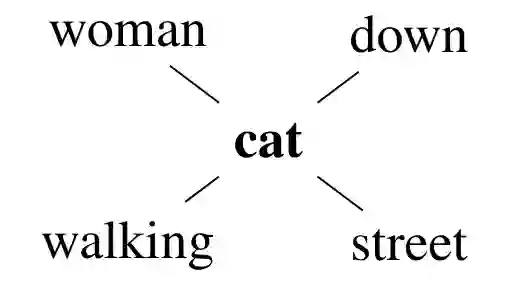

Evaluating the quality of generated text is difficult, since traditional NLG evaluation metrics, focusing more on surface form than meaning, often fail to assign appropriate scores. This is especially problematic for AMR-to-text evaluation, given the abstract nature of AMR. Our work aims to support the development and improvement of NLG evaluation metrics that focus on meaning, by developing a dynamic CheckList for NLG metrics that is interpreted by being organized around meaning-relevant linguistic phenomena. Each test instance consists of a pair of sentences with their AMR graphs and a human-produced textual semantic similarity or relatedness score. Our CheckList facilitates comparative evaluation of metrics and reveals strengths and weaknesses of novel and traditional metrics. We demonstrate the usefulness of CheckList by designing a new metric GraCo that computes lexical cohesion graphs over AMR concepts. Our analysis suggests that GraCo presents an interesting NLG metric worth future investigation and that meaning-oriented NLG metrics can profit from graph-based metric components using AMR.

翻译:由于传统的NLG评价指标更侧重于表面形式而不是意义,因此很难评估生成文本的质量,因为传统的NLG评价指标往往无法分配适当的分数,鉴于AMR的抽象性质,这对AMR对文本的评价特别成问题。我们的工作旨在通过为NLG指标制定一个动态核对表,按照与意义相关的语言现象来解释,支持制定和改进侧重于含义的NLG评价指标。每个试验实例包括一对带有AMR图的句子和由人制作的文本的语义相似性或关联性评分。我们的校对表有助于对指标进行比较评价,并揭示新颖和传统指标的优缺点。我们通过设计一个新的将词汇表与AMR概念相匹配的词汇组来显示校验者的效用。我们的分析表明,GRACO提出了值得今后调查的有趣的NLG指标,而注重意义的NLG指标可以从使用AMR的图表的指数组成部分中获益。