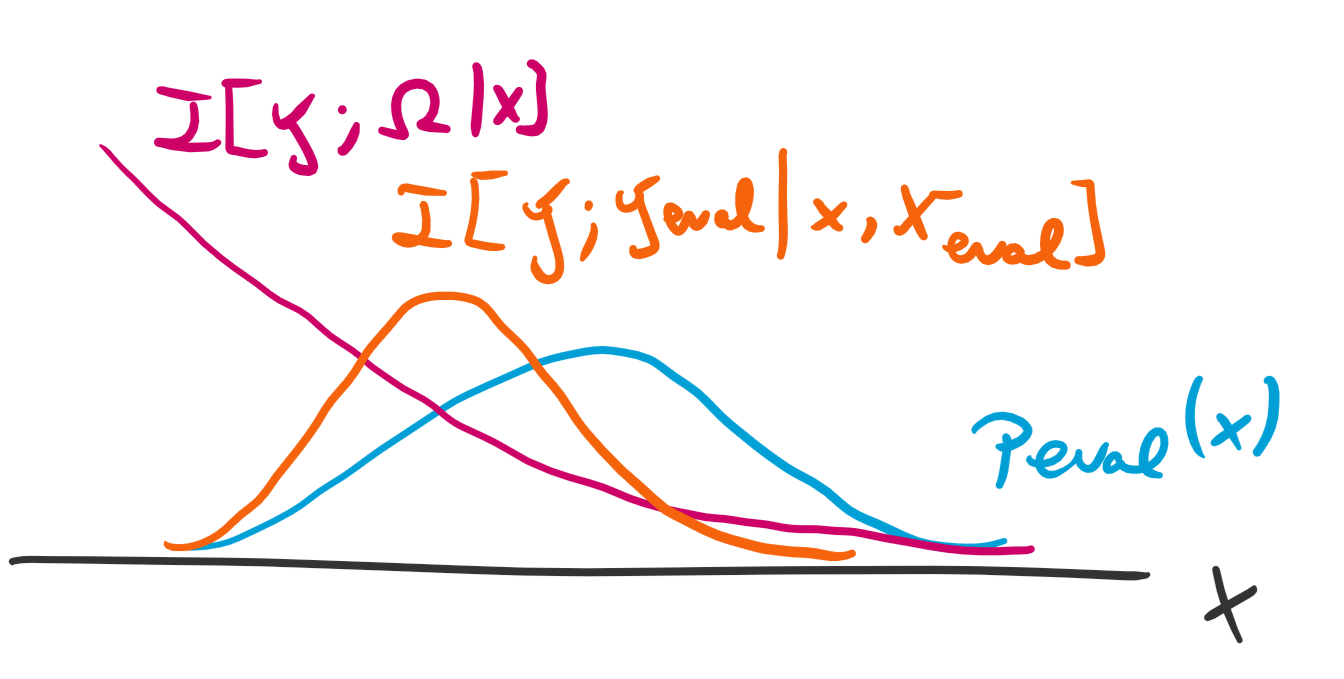

Active Learning is essential for more label-efficient deep learning. Bayesian Active Learning has focused on BALD, which reduces model parameter uncertainty. However, we show that BALD gets stuck on out-of-distribution or junk data that is not relevant for the task. We examine a novel *Expected Predictive Information Gain (EPIG)* to deal with distribution shifts of the pool set. EPIG reduces the uncertainty of *predictions* on an unlabelled *evaluation set* sampled from the test data distribution whose distribution might be different to the pool set distribution. Based on this, our new EPIG-BALD acquisition function for Bayesian Neural Networks selects samples to improve the performance on the test data distribution instead of selecting samples that reduce model uncertainty everywhere, including for out-of-distribution regions with low density in the test data distribution. Our method outperforms state-of-the-art Bayesian active learning methods on high-dimensional datasets and avoids out-of-distribution junk data in cases where current state-of-the-art methods fail.

翻译:积极学习对于提高标签效率的深层学习至关重要。 巴伊西亚积极学习侧重于BALD, 这会减少模型参数的不确定性。 但是, 我们显示, 巴伊萨神经网络的新的 EPIG- BALD 获取功能, 是为了改进测试数据分布的性能, 而不是选择可以减少各地模型不确定性的样本, 包括测试数据分布密度低的流出区域。 我们的方法在高维数据集上优于最先进的巴伊西亚积极学习方法, 并避免在目前最先进方法失败的情况下使用分配的垃圾数据 。