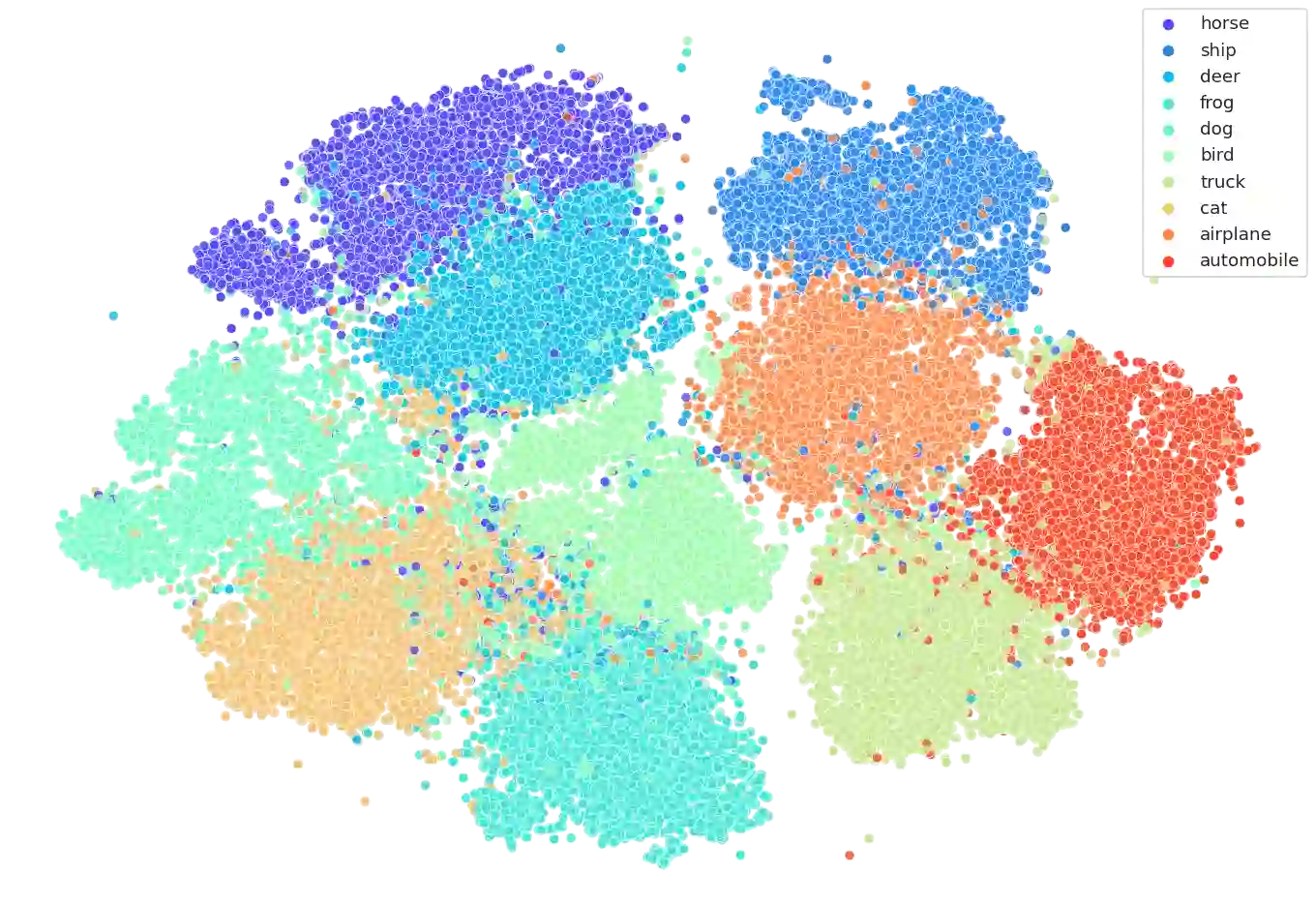

Distributing machine learning predictors enables the collection of large-scale datasets while leaving sensitive raw data at trustworthy sites. We show that locally training support vector machines (SVMs) and computing their averages leads to a learning technique that is scalable to a large number of users, satisfies differential privacy, and is applicable to non-trivial tasks, such as CIFAR-10. For a large number of participants, communication cost is one of the main challenges. We achieve a low communication cost by requiring only a single invocation of an efficient secure multiparty summation protocol. By relying on state-of-the-art feature extractors (SimCLR), we are able to utilize differentially private convex learners for non-trivial tasks such as CIFAR-10. Our experimental results illustrate that for $1{,}000$ users with $50$ data points each, our scheme outperforms state-of-the-art scalable distributed learning methods (differentially private federated learning, short DP-FL) while requiring around $500$ times fewer communication costs: For CIFAR-10, we achieve a classification accuracy of $79.7\,\%$ for an $\varepsilon = 0.59$ while DP-FL achieves $57.6\,\%$. More generally, we prove learnability properties for the average of such locally trained models: convergence and uniform stability. By only requiring strongly convex, smooth, and Lipschitz-continuous objective functions, locally trained via stochastic gradient descent (SGD), we achieve a strong utility-privacy tradeoff.

翻译:通过在可信赖的地点保留敏感的原始数据,我们显示,本地培训支持矢量机(SVMS)和计算其平均值,可以形成一种可扩缩到大量用户的学习技术,满足不同的隐私,适用于非三重任务,如CIFAR-10。对于许多参与者来说,通信成本是主要挑战之一。我们通过只要求一次使用高效安全的多功能比对协议,实现通信成本低,而只需要一次使用高效安全的多功能比对协议。我们依靠最先进的地段提取器(SimCLR),能够利用差异化的私人软骨架学习者从事非三重任务,如CIFAR-10。我们的实验结果表明,对于拥有50美元数据点的1{,000美元用户,我们的计划比目前最先进的分配方法(不同的私人联合学习,短的DP-FLF),而通信成本则比现在低500倍左右:对于CIFAR-10,我们实现了7.7和经过培训的更稳定的私基级学习功能,我们达到了一个稳定值,而经过培训的SD-revilal-lialalalalal-alalalalalalalal-al-al-al-allieval a.