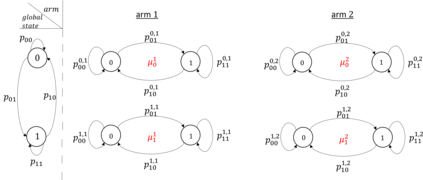

We consider an extension to the restless multi-armed bandit (RMAB) problem with unknown arm dynamics, where an unknown exogenous global Markov process governs the rewards distribution of each arm. Under each global state, the rewards process of each arm evolves according to an unknown Markovian rule, which is non-identical among different arms. At each time, a player chooses an arm out of $N$ arms to play, and receives a random reward from a finite set of reward states. The arms are restless, that is, their local state evolves regardless of the player's actions. Motivated by recent studies on related RMAB settings, the regret is defined as the reward loss with respect to a player that knows the dynamics of the problem, and plays at each time $t$ the arm that maximizes the expected immediate value. The objective is to develop an arm-selection policy that minimizes the regret. To that end, we develop the Learning under Exogenous Markov Process (LEMP) algorithm. We analyze LEMP theoretically and establish a finite-sample bound on the regret. We show that LEMP achieves a logarithmic regret order with time. We further analyze LEMP numerically and present simulation results that support the theoretical findings and demonstrate that LEMP significantly outperforms alternative algorithms.

翻译:我们考虑扩大无休止的多武装匪徒(RMAB)问题,因为那里有未知的外生全球马可夫(Markov)进程,它控制着每个手臂的奖赏分配。在每一个全球国家,每个手臂的奖赏过程都根据未知的马尔科维亚规则演变,而该规则在不同武器之间并不完全相同。玩家每次都选择用一臂来玩,并从一组有限的奖赏国家获得随机奖赏。武器是无休止的,也就是说,它们的当地状态是演化的,而不管玩家的行为如何。根据最近对相关马卡布设置的研究,遗憾被定义为对了解问题动态的玩家的奖赏损失。我们表明,LEMP在每次玩耍时都会用美元来发挥最大预期的直接价值。我们的目标是制定一条选择武器的政策,从而将遗憾降到最低程度。为此,我们在Exgenous Markov 进程(LEMP) 算法下开发了学习方法。我们分析了LEMP理论上的演算法,并在遗憾的基础上进一步建立了一个限定的缩。我们表明LEMP能够大幅地分析数字逻辑和逻辑结果。