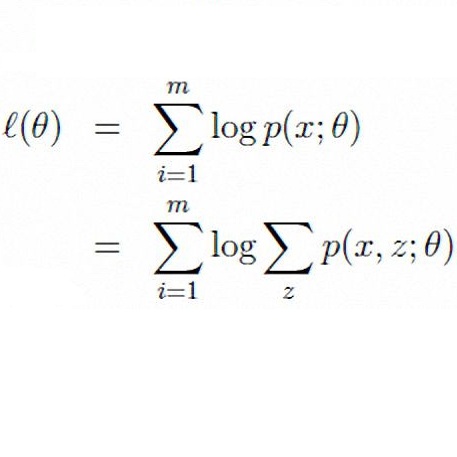

Parameter estimation in logistic regression is a well-studied problem with the Newton-Raphson method being one of the most prominent optimization techniques used in practice. A number of monotone optimization methods including minorization-maximization (MM) algorithms, expectation-maximization (EM) algorithms and related variational Bayes approaches offer a family of useful alternatives guaranteed to increase the logistic regression likelihood at every iteration. In this article, we propose a modified version of a logistic regression EM algorithm which can substantially improve computationally efficiency while preserving the monotonicity of EM and the simplicity of the EM parameter updates. By introducing an additional latent parameter and selecting this parameter to maximize the penalized observed-data log-likelihood at every iteration, our iterative algorithm can be interpreted as a parameter-expanded expectation-condition maximization either (ECME) algorithm, and we demonstrate how to use the parameter-expanded ECME with an arbitrary choice of weights and penalty function. In addition, we describe a generalized version of our parameter-expanded ECME algorithm that can be tailored to the challenges encountered in specific high-dimensional problems, and we study several interesting connections between this generalized algorithm and other well-known methods. Performance comparisons between our method, the EM algorithm, and several other optimization methods are presented using a series of simulation studies based upon both real and synthetic datasets.

翻译:逻辑回归的参数估计是一个广泛研究的问题,其中牛顿-拉弗森方法是最突出的优化技术之一。包括极小化-最大化(MM)算法、期望-最大化(EM)算法和相关变分贝叶斯方法在内的一些单调优化方法提供了一系列有用的替代方案,保证每次迭代增加逻辑回归似然。在本文中,我们提出了一种改进的逻辑回归EM算法,它可以大大提高计算效率,同时保留EM的单调性和EM参数更新的简单性。通过引入附加的潜在参数,并选择该参数以在每次迭代时最大化惩罚观测数据对数似然,我们的迭代算法可以被解释为参数扩展的期望条件最大化。同时,我们演示了如何使用任意权重和惩罚函数选择参数扩展ECME算法。此外,我们描述了我们的参数扩展ECME算法的广义版本,可以针对特定高维问题中遇到的挑战进行定制,并研究了这个广义算法与其他著名方法之间的几个有趣联系。我们使用一系列基于真实和合成数据集的模拟研究来展示我们的方法、EM算法和其他几种优化方法的性能比较。