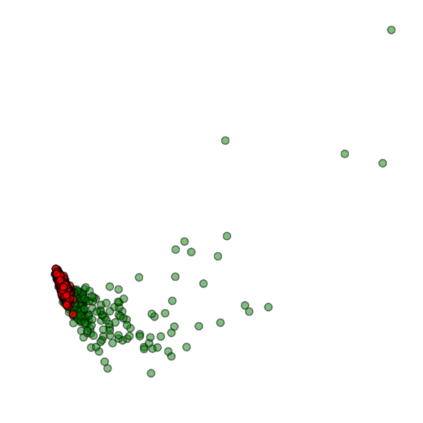

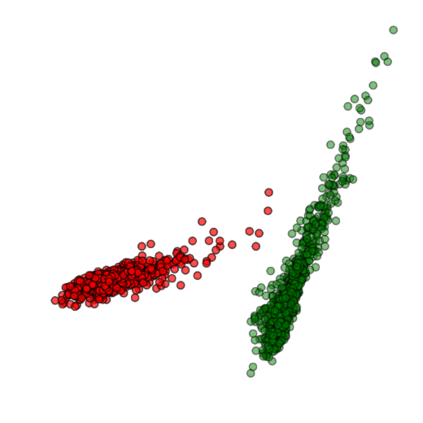

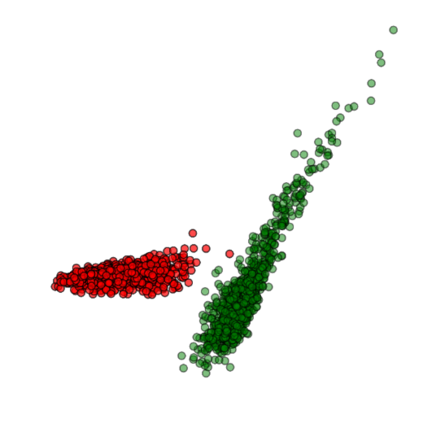

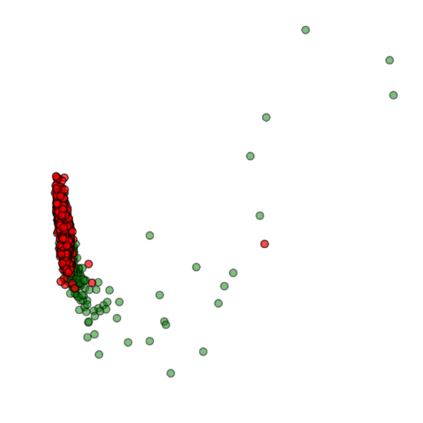

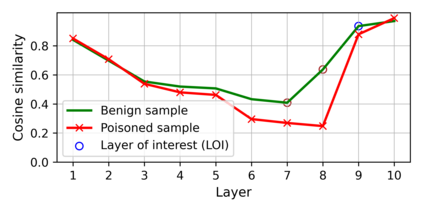

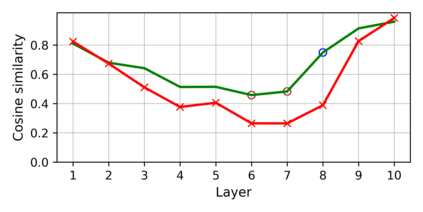

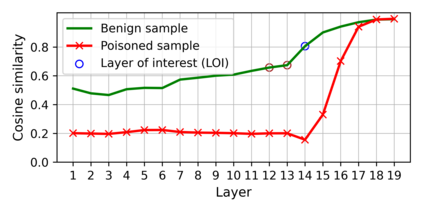

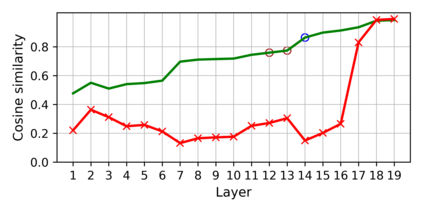

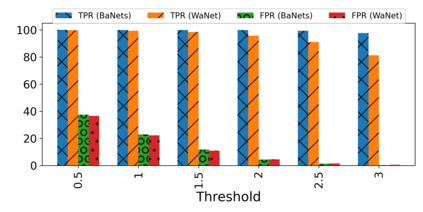

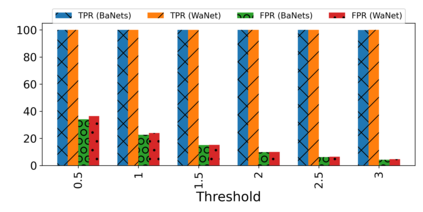

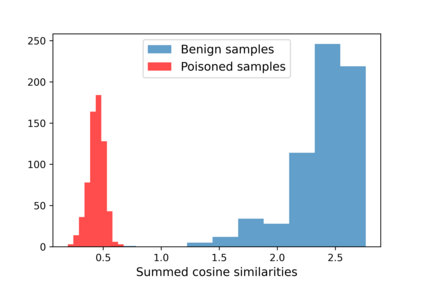

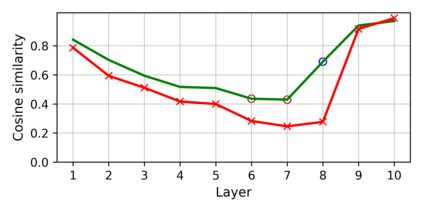

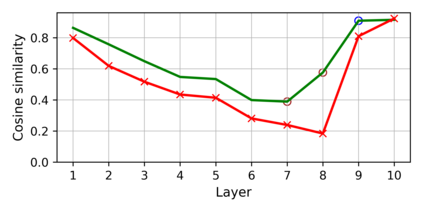

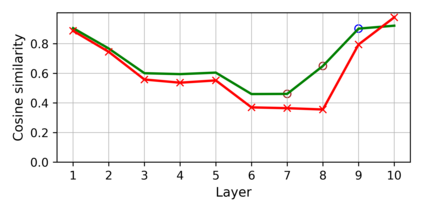

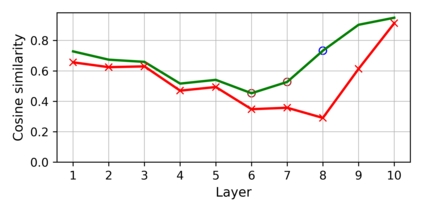

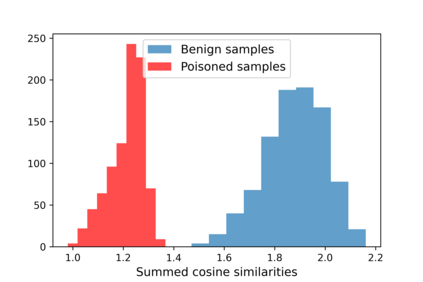

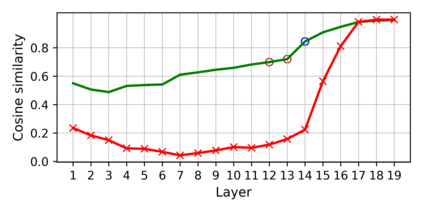

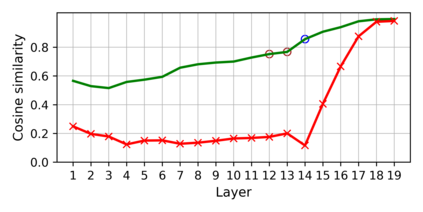

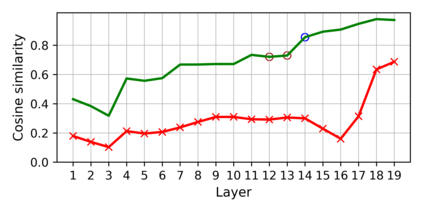

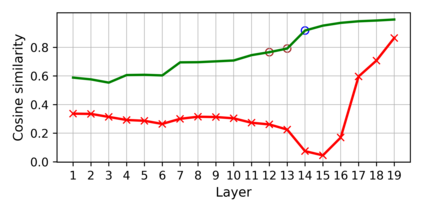

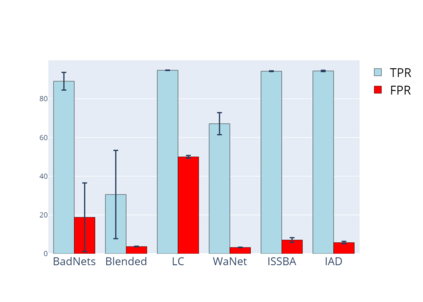

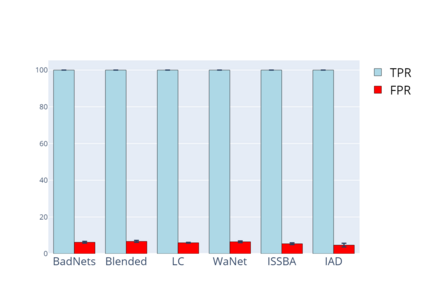

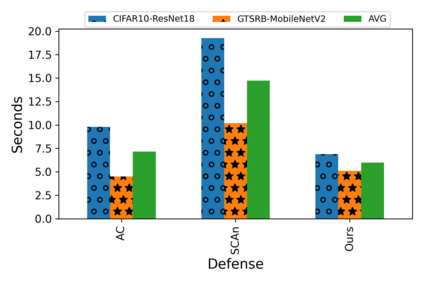

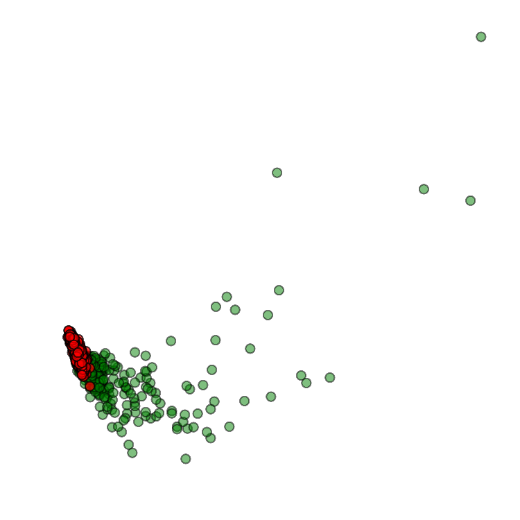

Training deep neural networks (DNNs) usually requires massive training data and computational resources. Users who cannot afford this may prefer to outsource training to a third party or resort to publicly available pre-trained models. Unfortunately, doing so facilitates a new training-time attack (i.e., backdoor attack) against DNNs. This attack aims to induce misclassification of input samples containing adversary-specified trigger patterns. In this paper, we first conduct a layer-wise feature analysis of poisoned and benign samples from the target class. We find out that the feature difference between benign and poisoned samples tends to be maximum at a critical layer, which is not always the one typically used in existing defenses, namely the layer before fully-connected layers. We also demonstrate how to locate this critical layer based on the behaviors of benign samples. We then propose a simple yet effective method to filter poisoned samples by analyzing the feature differences between suspicious and benign samples at the critical layer. We conduct extensive experiments on two benchmark datasets, which confirm the effectiveness of our defense.

翻译:培训深度神经网络(DNN)通常需要大量的培训数据和计算资源。 无法这样做的用户可能宁愿将培训外包给第三方,或诉诸公开的训练前模式。 不幸的是,这样做有利于对DNN进行新的训练时间攻击(即后门攻击)。 这次攻击的目的是诱使含有对手指定的触发模式的输入样本分类错误。 在本文中, 我们首先对目标类别中有毒和无害的样本进行分层特征分析。 我们发现良性和有毒样本之间的特征差异往往在关键层中达到最高值, 而关键层并不是现有防御中通常使用的标准, 即完全连接层之前的层。 我们还展示了如何根据良性样本的行为来定位这一关键层。 我们然后提出一个简单而有效的方法, 通过分析关键层中可疑和良性样本之间的特征差异来过滤有毒样本。 我们对两个基准数据集进行了广泛的实验, 证实了我们防御的有效性。</s>