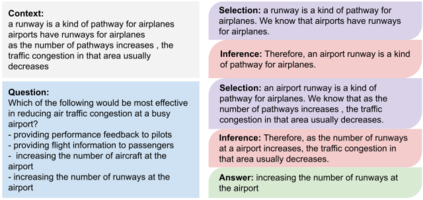

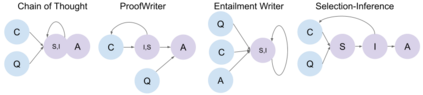

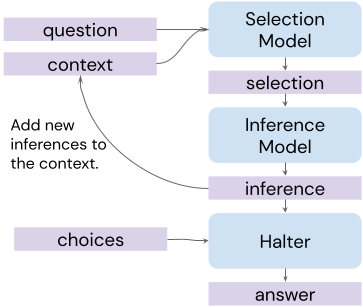

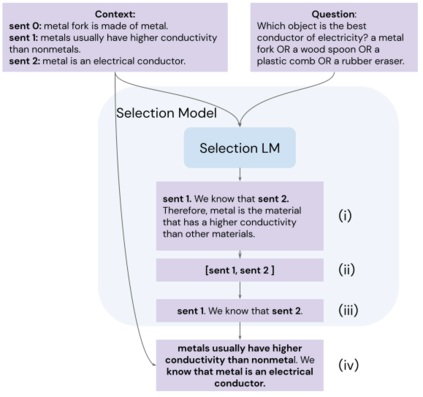

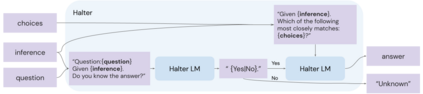

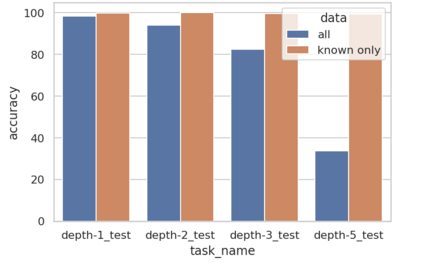

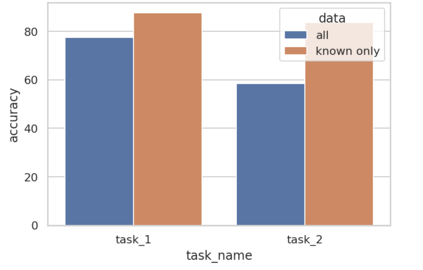

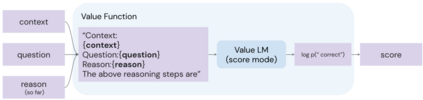

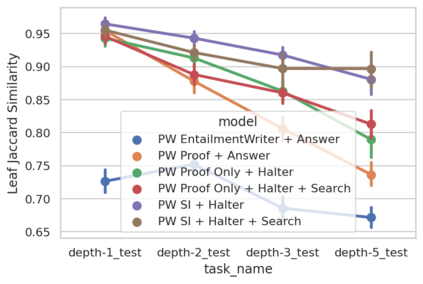

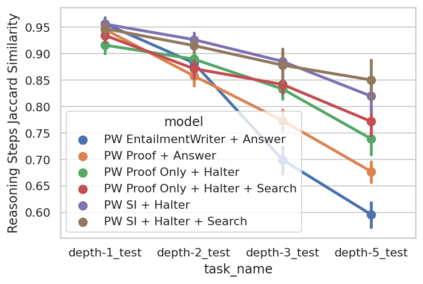

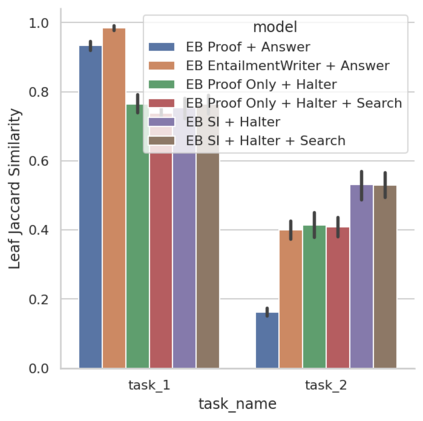

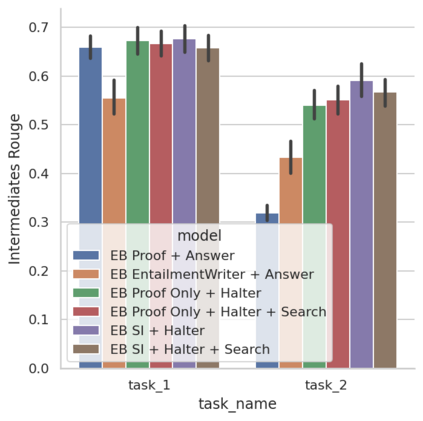

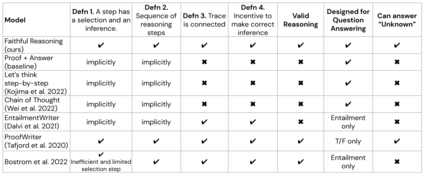

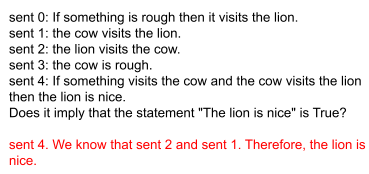

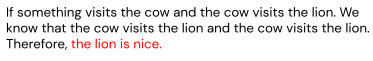

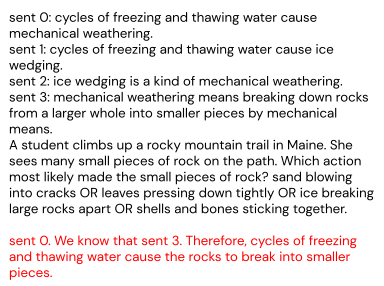

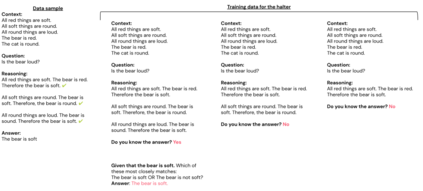

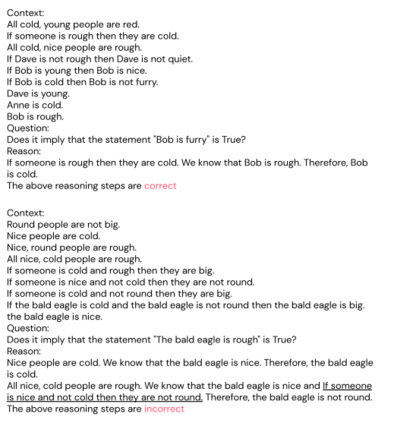

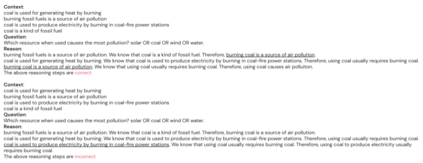

Although contemporary large language models (LMs) demonstrate impressive question-answering capabilities, their answers are typically the product of a single call to the model. This entails an unwelcome degree of opacity and compromises performance, especially on problems that are inherently multi-step. To address these limitations, we show how LMs can be made to perform faithful multi-step reasoning via a process whose causal structure mirrors the underlying logical structure of the problem. Our approach works by chaining together reasoning steps, where each step results from calls to two fine-tuned LMs, one for selection and one for inference, to produce a valid reasoning trace. Our method carries out a beam search through the space of reasoning traces to improve reasoning quality. We demonstrate the effectiveness of our model on multi-step logical deduction and scientific question-answering, showing that it outperforms baselines on final answer accuracy, and generates humanly interpretable reasoning traces whose validity can be checked by the user.

翻译:尽管当代的大型语言模型(LMs)显示了令人印象深刻的问答能力,但其答案通常是对模型的单一呼声的产物,这需要不受欢迎的不透明度和妥协性表现,特别是在固有的多步骤问题上。为了解决这些局限性,我们展示如何使LMs通过一个其因果结构反映问题基本逻辑结构的过程来忠实地进行多步推理。我们的方法是将推理步骤连锁在一起,每个步骤都来自两个经过精细调的LMs,一个用于选择,另一个用于推断,以产生一个有效的推理跟踪。我们的方法通过推理痕迹空间进行波助澜搜索,以提高推理质量。我们展示了我们关于多步逻辑推理和科学解答的模型的有效性,表明它超越了最终答案准确性的基线,并产生了可以由用户检查其有效性的可解释的推理痕迹。