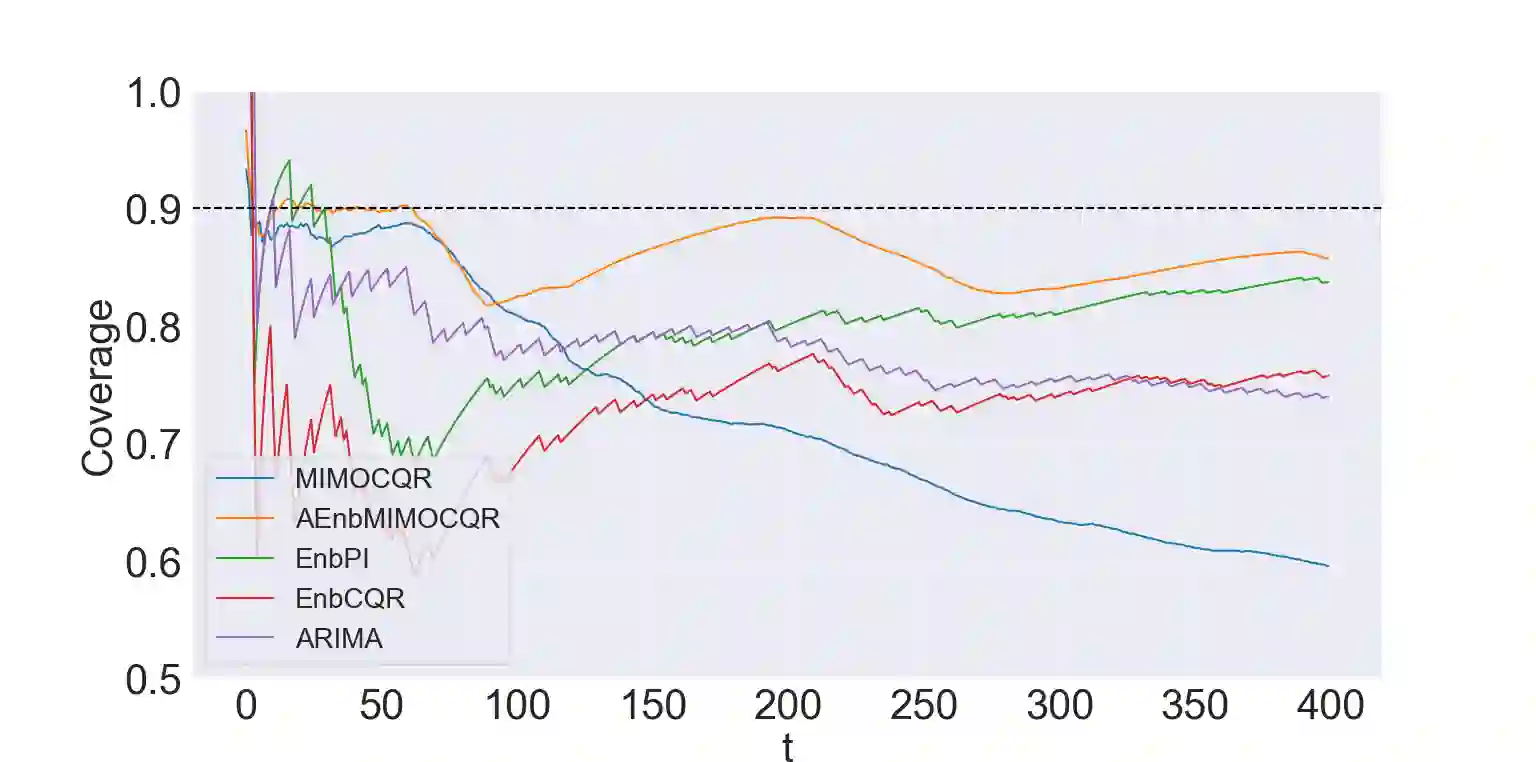

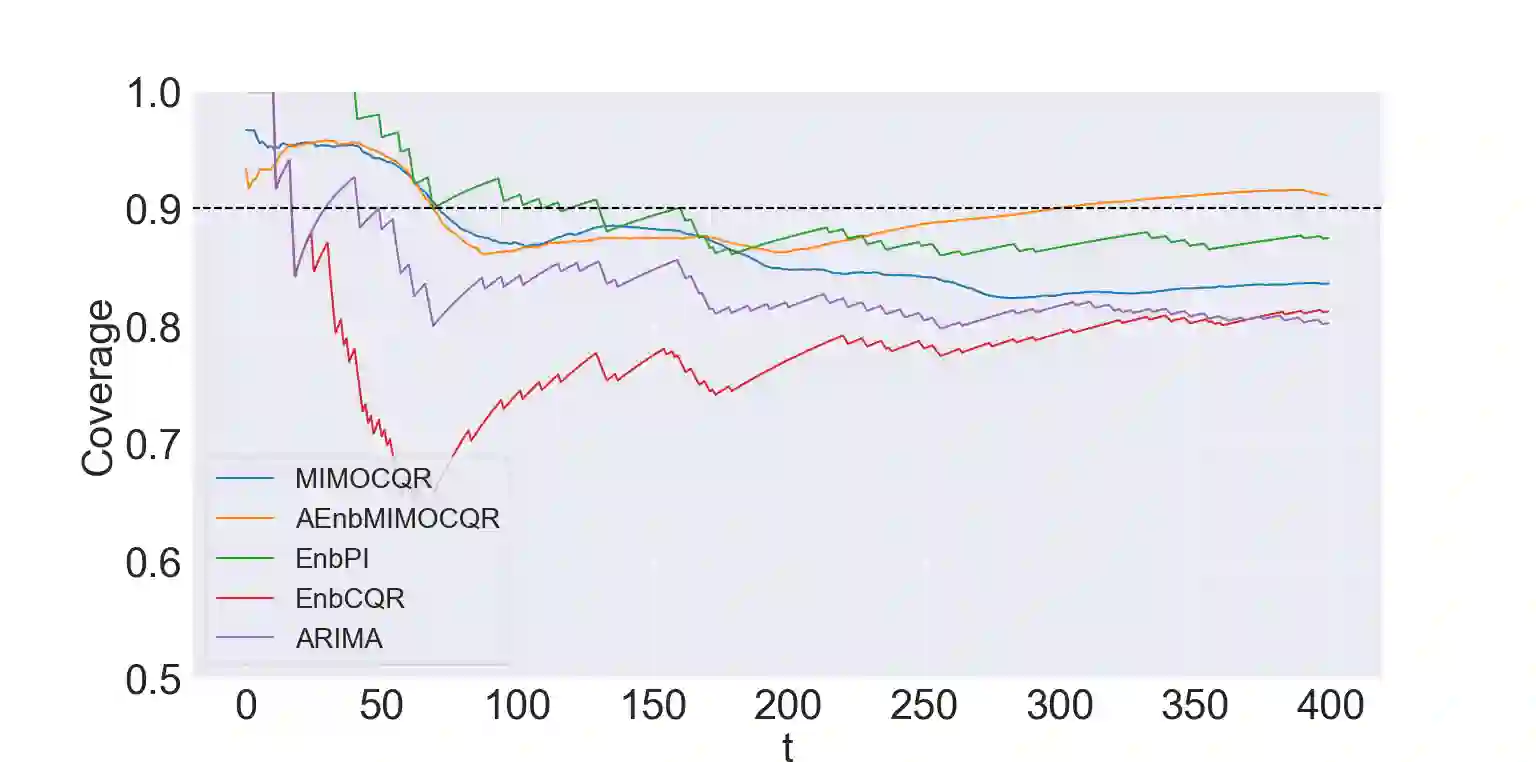

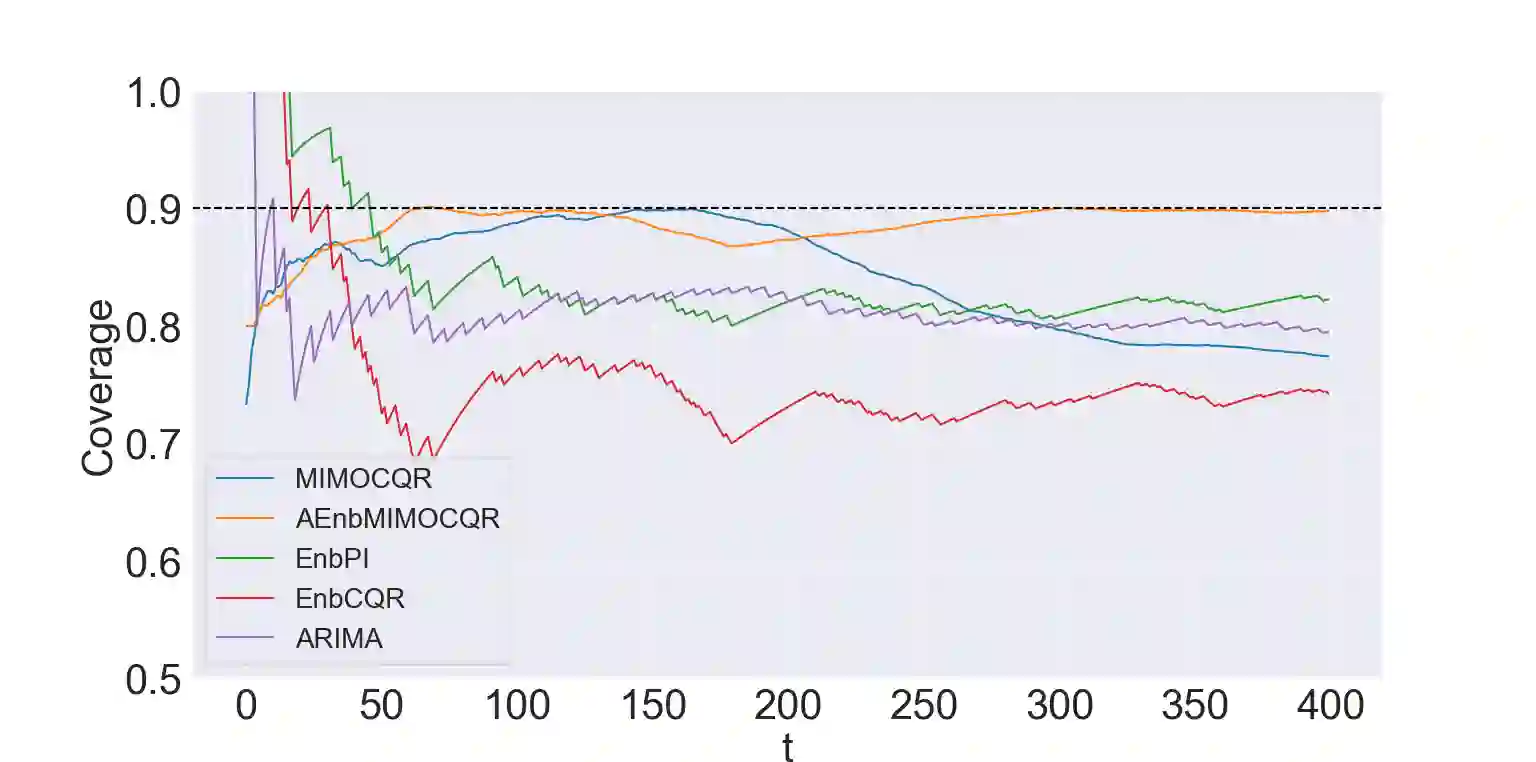

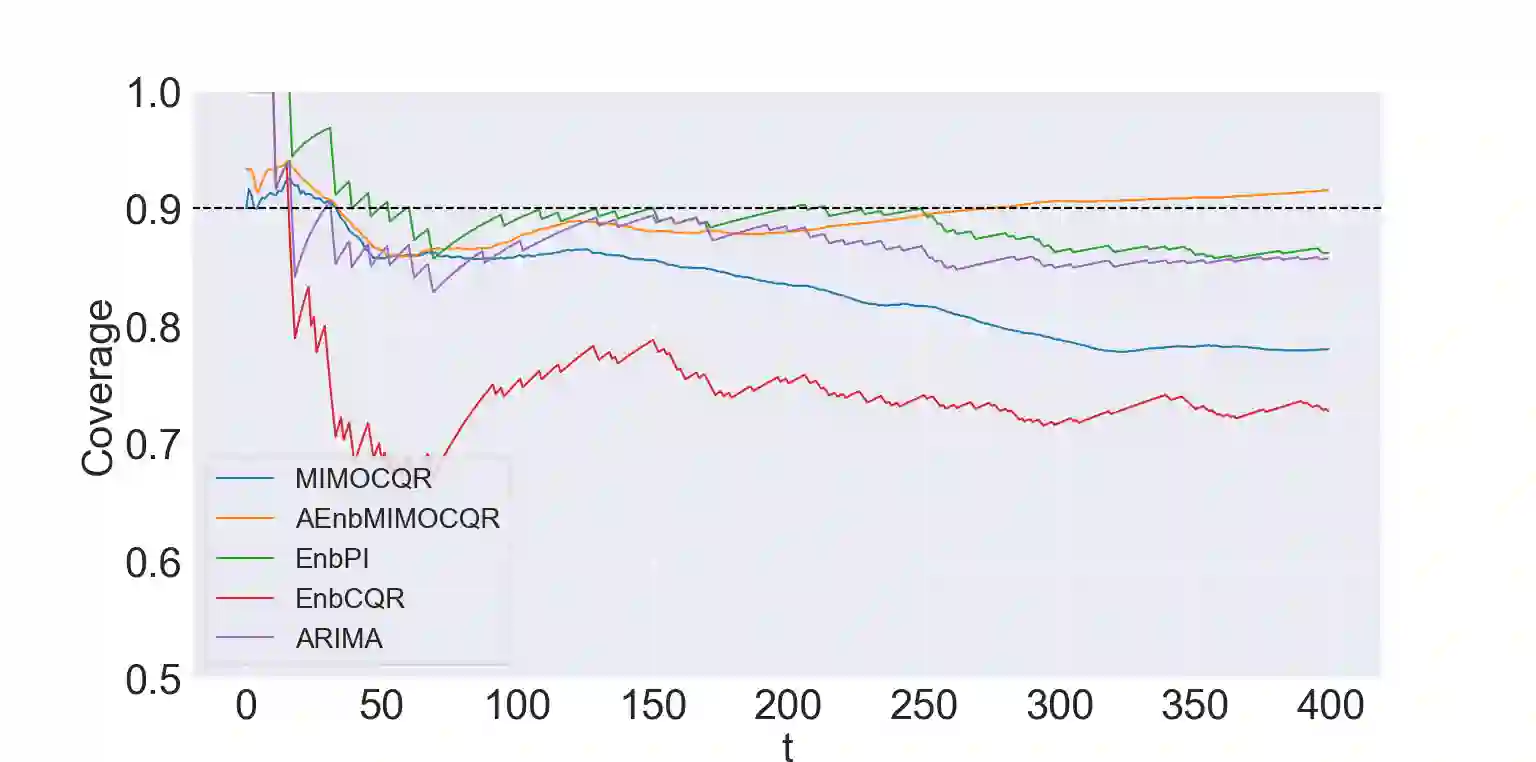

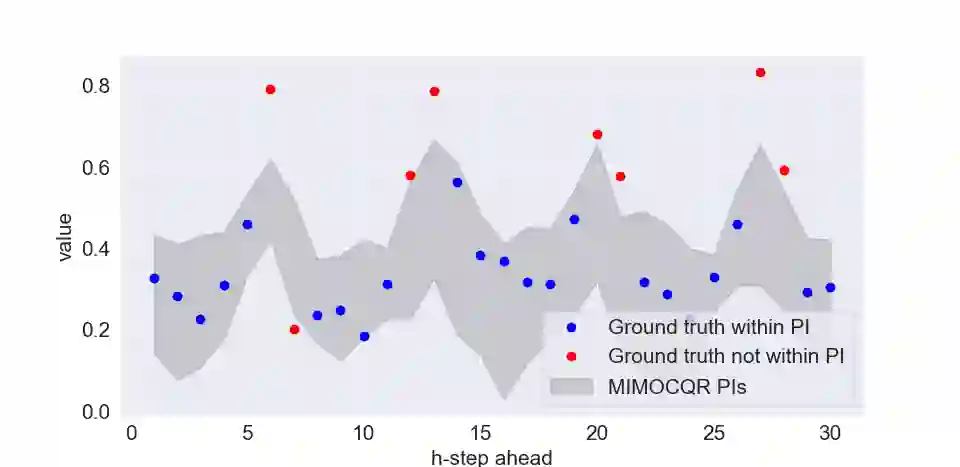

The exponential growth of machine learning (ML) has prompted a great deal of interest in quantifying the uncertainty of each prediction for a user-defined level of confidence. Reliable uncertainty quantification is crucial and is a step towards increased trust in AI results. It becomes especially important in high-stakes decision-making, where the true output must be within the confidence set with high probability. Conformal prediction (CP) is a distribution-free uncertainty quantification framework that works for any black-box model and yields prediction intervals (PIs) that are valid under the mild assumption of exchangeability. CP-type methods are gaining popularity due to being easy to implement and computationally cheap; however, the exchangeability assumption immediately excludes time series forecasting. Although recent papers tackle covariate shift, this is not enough for the general time series forecasting problem of producing H-step ahead valid PIs. To attain such a goal, we propose a new method called AEnbMIMOCQR (Adaptive ensemble batch multiinput multi-output conformalized quantile regression), which produces asymptotic valid PIs and is appropriate for heteroscedastic time series. We compare the proposed method against state-of-the-art competitive methods in the NN5 forecasting competition dataset. All the code and data to reproduce the experiments are made available

翻译:机器学习(ML)的指数增长激发了人们对量化每种预测的不确定性以达到用户确定的信任水平的极大兴趣。可靠的不确定性量化至关重要,是提高对AI结果信任的一个步骤。在高比例决策中,真实产出必须是在高度概率的保密范围内,这一点变得特别重要。非正式预测(CP)是一个无分配的不确定性量化框架,用于任何黑箱模型,并产生在温和的互换假设下有效的预测间隔。CP型方法由于易于执行和计算成本低而越来越受欢迎;然而,可交换性假设立即排除了时间序列预测。尽管最近的论文解决了变换式变化,但对于预测H-step 有效 PIS 的一般性时间序列问题来说,这还不够。为了实现这一目标,我们提出了一个名为AEnbMIMOCQR(Adaptition comple commonble diction-publical-compulation compalizional Returnation)的新方法。我们建议采用的新的方法,这是用来对可控性PIS和可控性数据复制性数据进行对比性测试的方法。