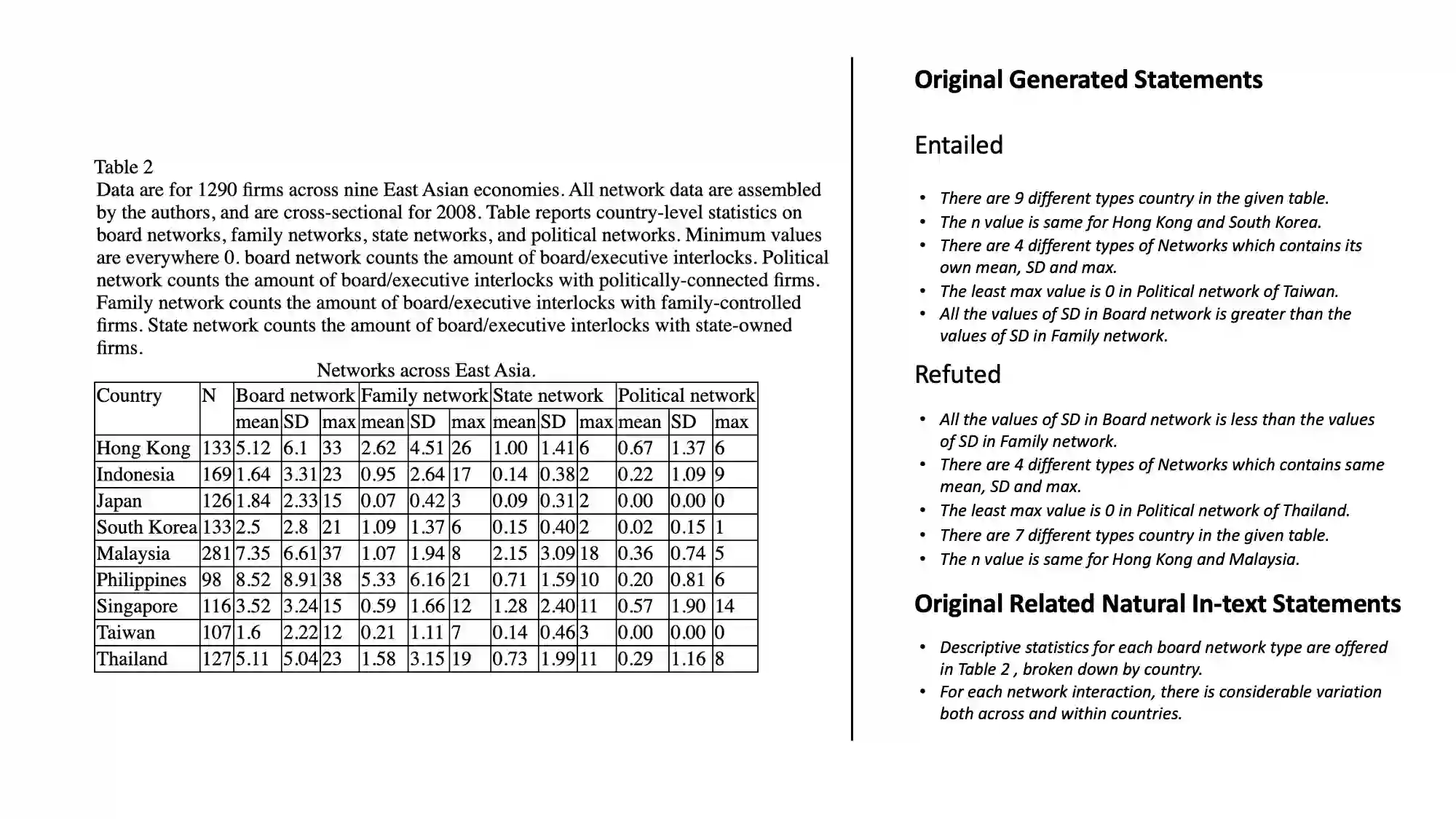

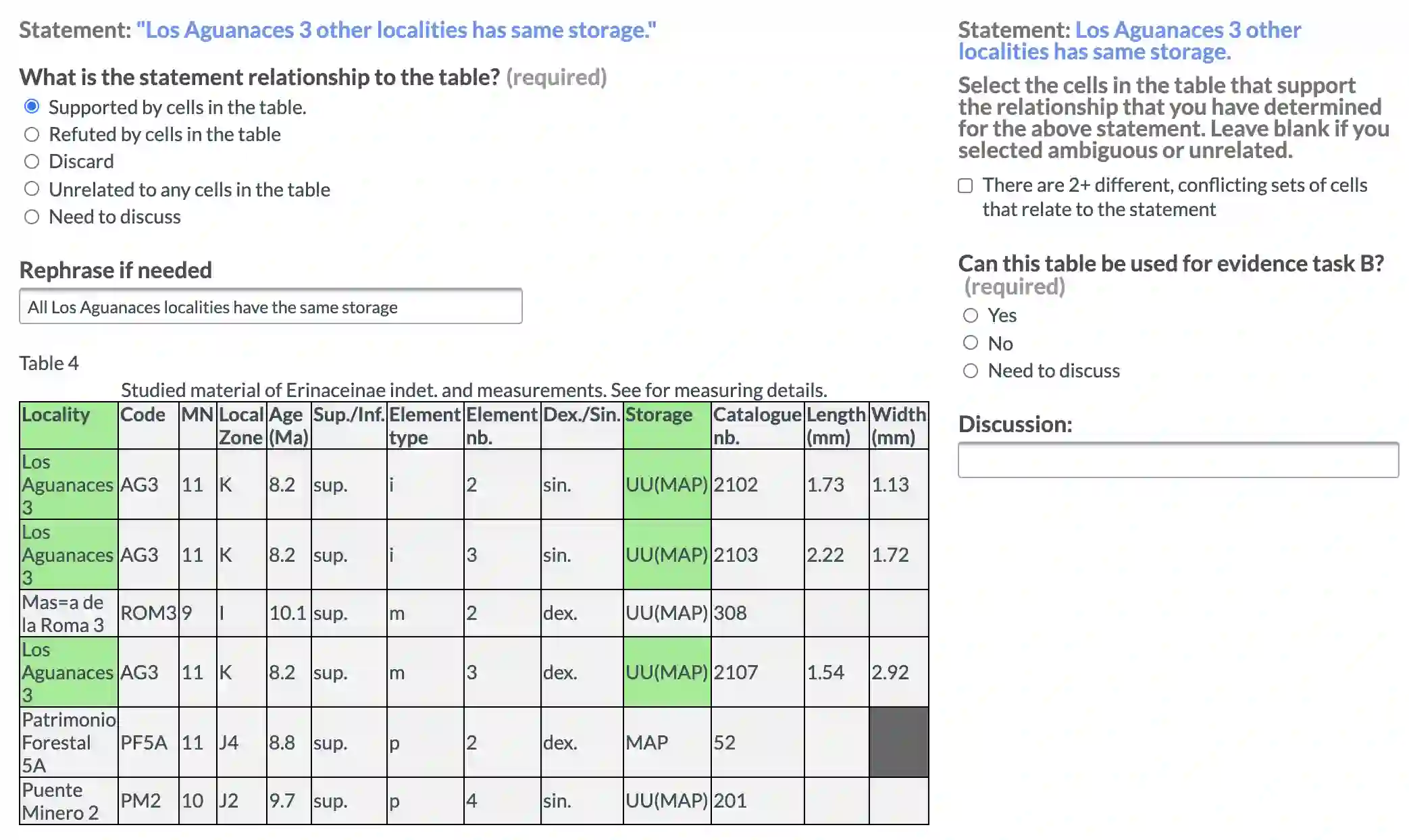

Understanding tables is an important and relevant task that involves understanding table structure as well as being able to compare and contrast information within cells. In this paper, we address this challenge by presenting a new dataset and tasks that addresses this goal in a shared task in SemEval 2020 Task 9: Fact Verification and Evidence Finding for Tabular Data in Scientific Documents (SEM-TAB-FACTS). Our dataset contains 981 manually-generated tables and an auto-generated dataset of 1980 tables providing over 180K statement and over 16M evidence annotations. SEM-TAB-FACTS featured two sub-tasks. In sub-task A, the goal was to determine if a statement is supported, refuted or unknown in relation to a table. In sub-task B, the focus was on identifying the specific cells of a table that provide evidence for the statement. 69 teams signed up to participate in the task with 19 successful submissions to subtask A and 12 successful submissions to subtask B. We present our results and main findings from the competition.

翻译:理解表格是一项重要而相关的任务,需要理解表格结构,并能对单元格内的信息进行比较和对比。在本文件中,我们通过在SemEval 2020任务9:科学文件中的图表数据的事实核查和证据查找(SEM-TAB-FACTS)的共同任务中提出新的数据集和任务来应对这一挑战。我们的数据集包含981个人工生成的表格和1980年表格中自动生成的数据集,这些表格提供了180K以上语句和16M以上证据说明。SEM-TAB-FACTS有两个子任务。在子任务A中,目标是确定一份声明是否支持、反驳或不知道与表格有关的内容。在子任务B中,重点是确定为报表提供证据的表格的具体单元。69个小组签署了任务,其中19个成功提交子任务A,12个成功提交子任务B。我们介绍了我们的成果和竞争的主要结果。