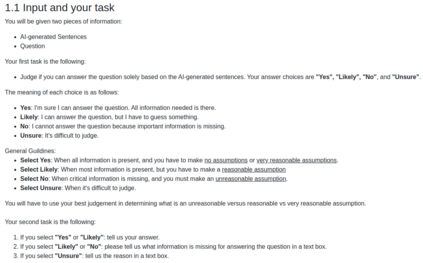

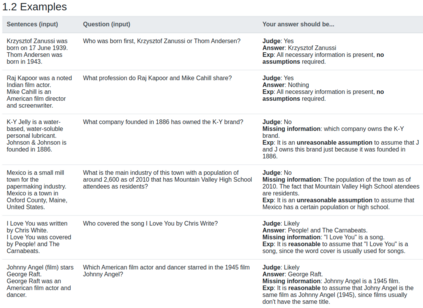

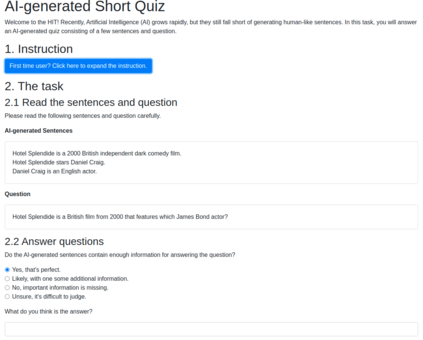

How can we generate concise explanations for multi-hop Reading Comprehension (RC)? The current strategies of identifying supporting sentences can be seen as an extractive question-focused summarization of the input text. However, these extractive explanations are not necessarily concise i.e. not minimally sufficient for answering a question. Instead, we advocate for an abstractive approach, where we propose to generate a question-focused, abstractive summary of input paragraphs and then feed it to an RC system. Given a limited amount of human-annotated abstractive explanations, we train the abstractive explainer in a semi-supervised manner, where we start from the supervised model and then train it further through trial and error maximizing a conciseness-promoted reward function. Our experiments demonstrate that the proposed abstractive explainer can generate more compact explanations than an extractive explainer with limited supervision (only 2k instances) while maintaining sufficiency.

翻译:如何为多动阅读理解(RC)提供简明的解释? 目前的确定支持性判决的战略可以被视为对输入文本的抽取式、以问题为焦点的总结。 但是,这些抽取性解释并不一定是简洁的,也就是说,不足以回答一个问题。 相反,我们主张抽象的方法,即我们提议对输入段落产生一个以问题为焦点的抽象摘要,然后将其反馈到一个RC系统。 鉴于数量有限的人文附加说明的抽象解释,我们以半监督的方式培训抽象的解释者,我们从受监督的模式开始,然后通过试验和错误进行进一步的培训,最大限度地发挥简洁性奖励功能。 我们的实验表明,拟议的抽象解释者在保持充分性的同时,可以产生比一个监督有限的采掘解释者(仅2个实例)更简明的解释。