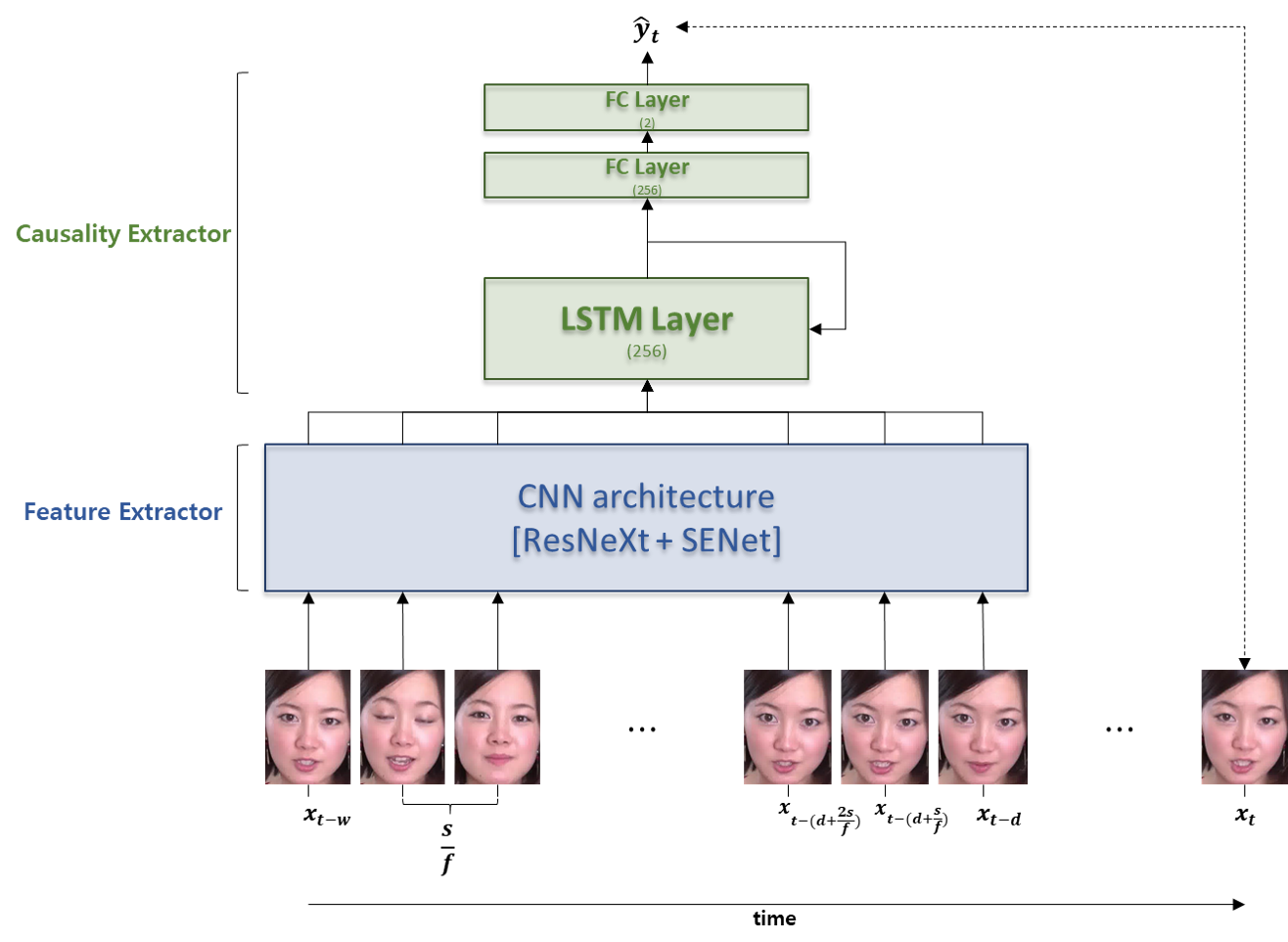

Among human affective behavior research, facial expression recognition research is improving in performance along with the development of deep learning. However, for improved performance, not only past images but also future images should be used along with corresponding facial images, but there are obstacles to the application of this technique to real-time environments. In this paper, we propose the causal affect prediction network (CAPNet), which uses only past facial images to predict corresponding affective valence and arousal. We train CAPNet to learn causal inference between past images and corresponding affective valence and arousal through supervised learning by pairing the sequence of past images with the current label using the Aff-Wild2 dataset. We show through experiments that the well-trained CAPNet outperforms the baseline of the second challenge of the Affective Behavior Analysis in-the-wild (ABAW2) Competition by predicting affective valence and arousal only with past facial images one-third of a second earlier. Therefore, in real-time application, CAPNet can reliably predict affective valence and arousal only with past data.

翻译:在人类感官行为研究中,面部表情识别研究随着深层学习的发展,在性能表现方面正在改善。但是,为了提高性能,不仅应当使用过去图像,而且应当使用未来的图像,同时使用相应的面部图像,但是在将这一技术应用于实时环境方面存在着障碍。在本文中,我们提议了因果影响预测网络(CAPNet),它仅使用过去的面部图像来预测相应的影响值和刺激值。我们培训了CAPNet,以便通过监督学习,了解过去图像与相应的影响值和刺激值之间的因果关系。因此,在实时应用中,CAPNet能够可靠地预测过去图像的序列与使用Aff-Wild2数据集的当前标签之对齐。我们通过实验表明,经过良好训练的CAPNet,通过预测影响性贝哈维尔分析(ABAW2)的第二个挑战的基线,即通过预测影响值,以及仅用先前的三分之一的面部图像来刺激。因此,通过实时应用,CAPNet可以可靠地预测影响值,只有用过去的数据才能预测。