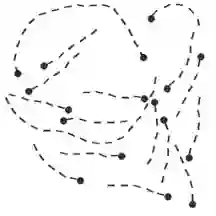

Sequence models based on linear state spaces (SSMs) have recently emerged as a promising choice of architecture for modeling long range dependencies across various modalities. However, they invariably rely on discretization of a continuous state space, which complicates their presentation and understanding. In this work, we dispose of the discretization step, and propose a model based on vanilla Diagonal Linear RNNs ($\mathrm{DLR}$). We empirically show that $\mathrm{DLR}$ is as performant as previously-proposed SSMs in the presence of strong supervision, despite being conceptually much simpler. Moreover, we characterize the expressivity of SSMs (including $\mathrm{DLR}$) and attention-based models via a suite of $13$ synthetic sequence-to-sequence tasks involving interactions over tens of thousands of tokens, ranging from simple operations, such as shifting an input sequence, to detecting co-dependent visual features over long spatial ranges in flattened images. We find that while SSMs report near-perfect performance on tasks that can be modeled via $\textit{few}$ convolutional kernels, they struggle on tasks requiring $\textit{many}$ such kernels and especially when the desired sequence manipulation is $\textit{context-dependent}$. For example, $\mathrm{DLR}$ learns to perfectly shift a $0.5M$-long input by an arbitrary number of positions but fails when the shift size depends on context. Despite these limitations, $\mathrm{DLR}$ reaches high performance on two higher-order reasoning tasks $\mathrm{ListOpsSubTrees}$ and $\mathrm{PathfinderSegmentation}\text{-}\mathrm{256}$ with input lengths $8K$ and $65K$ respectively, and gives encouraging performance on $\mathrm{PathfinderSegmentation}\text{-}\mathrm{512}$ with input length $262K$ for which attention is not a viable choice.

翻译:基于线性状态空间的序列模型(SSM)最近出现,作为建模不同模式的长距离依赖性模型的有希望的架构选择 {351{351{353}美元。然而,它们总是依赖连续状态空间的离散化,从而使其演示和理解复杂化。在这项工作中,我们处理离散步骤,并提议一个基于香草对角线RNNS($\mathrm{DLR$}的模型。我们从经验上显示,$\ mathr{$$(美元美元),与先前提议的SMSM(美元)相同,尽管在概念上要简单得多。 此外,我们把SSM(包括$\mathrm{DLR}美元)的表达性能和基于关注的模型通过一套13美元的合成序列处理,涉及数万个符号的相互作用,从简单的操作,例如转换输入序列,到在直方平坦图像中发现,SSS(美元)报告在直径直方的框架中的接近效果绩效,可以通过 $(美元) IM) 和正压任务中,这些动作的动作,特别需要通过正压(美元) 动作的动作,这些动作(美元) 的动作(美元) 和正压(美元) 。