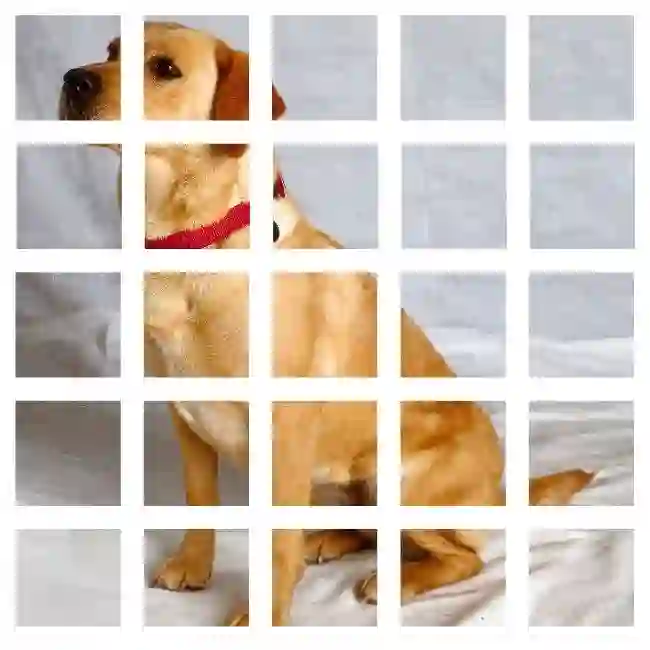

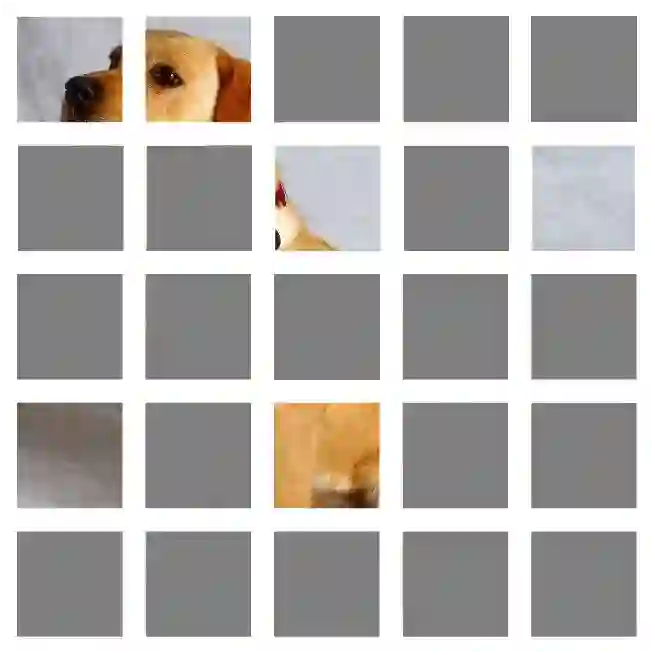

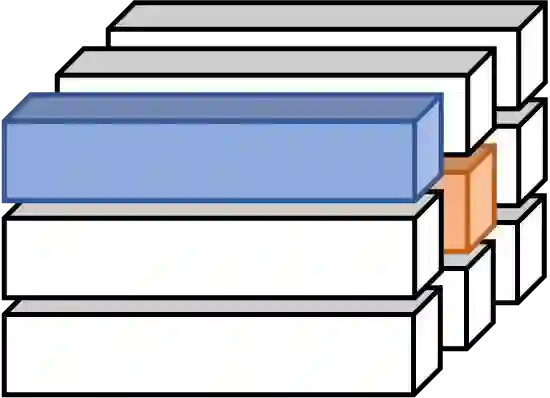

Since the development of self-supervised visual representation learning from contrastive learning to masked image modeling, there is no significant difference in essence, that is, how to design proper pretext tasks for vision dictionary look-up. Masked image modeling recently dominates this line of research with state-of-the-art performance on vision Transformers, where the core is to enhance the patch-level visual context capturing of the network via denoising auto-encoding mechanism. Rather than tailoring image tokenizers with extra training stages as in previous works, we unleash the great potential of contrastive learning on denoising auto-encoding and introduce a new pre-training method, ConMIM, to produce simple intra-image inter-patch contrastive constraints as the learning objectives for masked patch prediction. We further strengthen the denoising mechanism with asymmetric designs, including image perturbations and model progress rates, to improve the network pre-training. ConMIM-pretrained vision Transformers with various scales achieve promising results on downstream image classification, semantic segmentation, object detection, and instance segmentation tasks.

翻译:由于开发了自我监督的视觉演示,从对比学习到遮蔽图像模型,因此在本质上没有重大区别,即如何为视觉字典的审视设计适当的托辞任务。最近,遮蔽图像模型以视觉变形器的最先进的性能主导了这一研究线,核心是通过解密自动编码机制加强网络的补丁级视觉背景捕获。我们不是像以前的工作那样,用额外的培训阶段来调整图像符号,而是在解密自动编码方面进行对比学习的巨大潜力,并采用新的培训前方法,即ConMIM,以产生简单的图像内部对比性制约,作为遮蔽补形预测的学习目标。我们进一步加强非对称设计除色机制,包括图像扰动和模型进展率,以改进网络的预培训。由ConMIM培训过的具有不同规模的视觉变形器在下游图像分类、语义分割、对象探测和实例分割任务方面,取得了大有希望的结果。