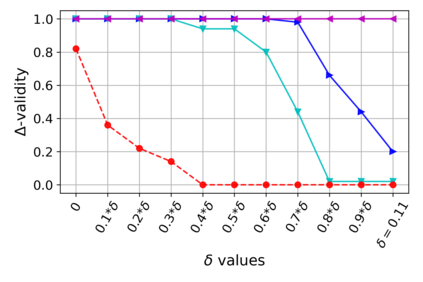

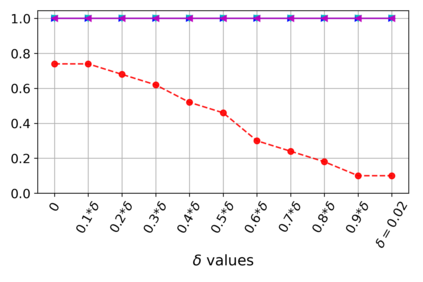

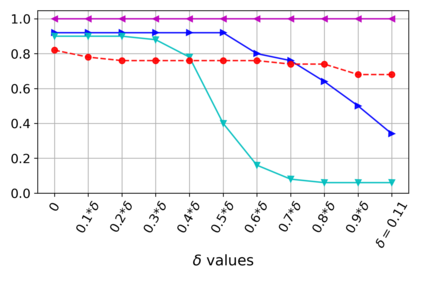

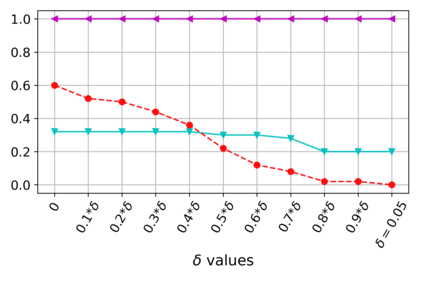

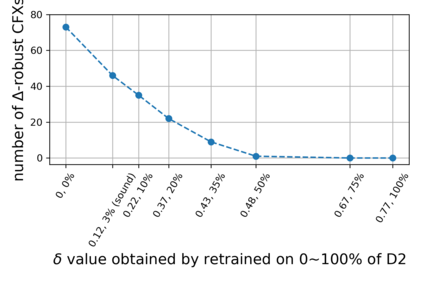

The use of counterfactual explanations (CFXs) is an increasingly popular explanation strategy for machine learning models. However, recent studies have shown that these explanations may not be robust to changes in the underlying model (e.g., following retraining), which raises questions about their reliability in real-world applications. Existing attempts towards solving this problem are heuristic, and the robustness to model changes of the resulting CFXs is evaluated with only a small number of retrained models, failing to provide exhaustive guarantees. To remedy this, we propose {\Delta}-robustness, the first notion to formally and deterministically assess the robustness (to model changes) of CFXs for neural networks. We introduce an abstraction framework based on interval neural networks to verify the {\Delta}-robustness of CFXs against a possibly infinite set of changes to the model parameters, i.e., weights and biases. We then demonstrate the utility of this approach in two distinct ways. First, we analyse the {\Delta}-robustness of a number of CFX generation methods from the literature and show that they unanimously host significant deficiencies in this regard. Second, we demonstrate how embedding {\Delta}-robustness within existing methods can provide CFXs which are provably robust.

翻译:反事实解释(CFX)的使用是日益流行的机器学习模型解释战略,然而,最近的研究表明,这些解释可能不足以应对基本模型的变化(例如再培训后),这使人们对实际应用中的可靠性产生疑问。目前解决这一问题的尝试是超常的,因此,对由此产生的CFX模型变化模型的可靠性的评价只是少量经过再培训的模型,未能提供详尽的保证。为了纠正这一点,我们提出了“超标准”-有机质,这是正式和决定性地评估神经网络的CFX模型变化的强度(至模型变化)的第一个概念。我们引入了一个基于间线性网络的抽象框架,以核实SFX的超标准与模型参数可能发生的无限变化(即权重和偏差)的强性。然后,我们用两种不同的方式展示了这一方法的实用性。首先,我们分析了用于正式和决定性地评估神经系统网络的CFX系统变化(CFX)的模型变化模式变化模式的强度(模型)的第一个概念。我们引入了基于间线性CFX生成的多种重要方法,从文献中展示了我们现有的CFCFX生成缺陷。