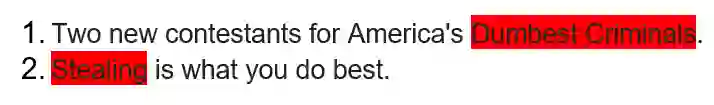

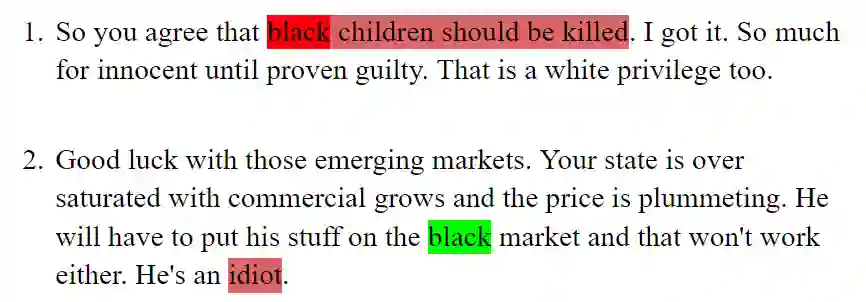

Social network platforms are generally used to share positive, constructive, and insightful content. However, in recent times, people often get exposed to objectionable content like threat, identity attacks, hate speech, insults, obscene texts, offensive remarks or bullying. Existing work on toxic speech detection focuses on binary classification or on differentiating toxic speech among a small set of categories. This paper describes the system proposed by team Cisco for SemEval-2021 Task 5: Toxic Spans Detection, the first shared task focusing on detecting the spans in the text that attribute to its toxicity, in English language. We approach this problem primarily in two ways: a sequence tagging approach and a dependency parsing approach. In our sequence tagging approach we tag each token in a sentence under a particular tagging scheme. Our best performing architecture in this approach also proved to be our best performing architecture overall with an F1 score of 0.6922, thereby placing us 7th on the final evaluation phase leaderboard. We also explore a dependency parsing approach where we extract spans from the input sentence under the supervision of target span boundaries and rank our spans using a biaffine model. Finally, we also provide a detailed analysis of our results and model performance in our paper.

翻译:社会网络平台通常用于分享积极、建设性和有见地的内容。然而,在近期,人们往往暴露于威胁、身份攻击、仇恨言论、侮辱、淫秽文字、攻击性言论或欺凌等令人厌恶的内容中,例如威胁、身份攻击、仇恨言论、侮辱、淫秽文字、攻击性言论或欺凌。关于有毒言论检测的现有工作侧重于二进制分类,或将有毒言论分为小类。本文描述了Cisco团队为SemEval-2021任务5:有毒西班牙人检测(SemEval-2021任务5:有毒西班牙人检测)提议的系统,这是第一个共同任务,重点是用英语探测文本中与其毒性有关的范围。我们主要以两种方式处理这一问题:一种顺序标记方法和依赖性分辨方法。在我们的顺序标记方法中,我们将每个标注标记在特定的标记计划下。我们这一方法中的最佳运作架构也被证明是我们总体运作的最佳架构,F1分为0.6922,从而将我们置于最后评价阶段领导板上。我们还探索一种依赖性区分方法,即我们从目标边界监督下的投入句中抽取出分,并用双臂模型排列我们的屁股。我们的文件模型提供详细的业绩分析。