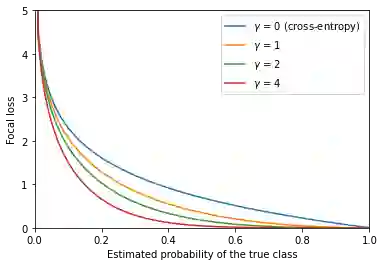

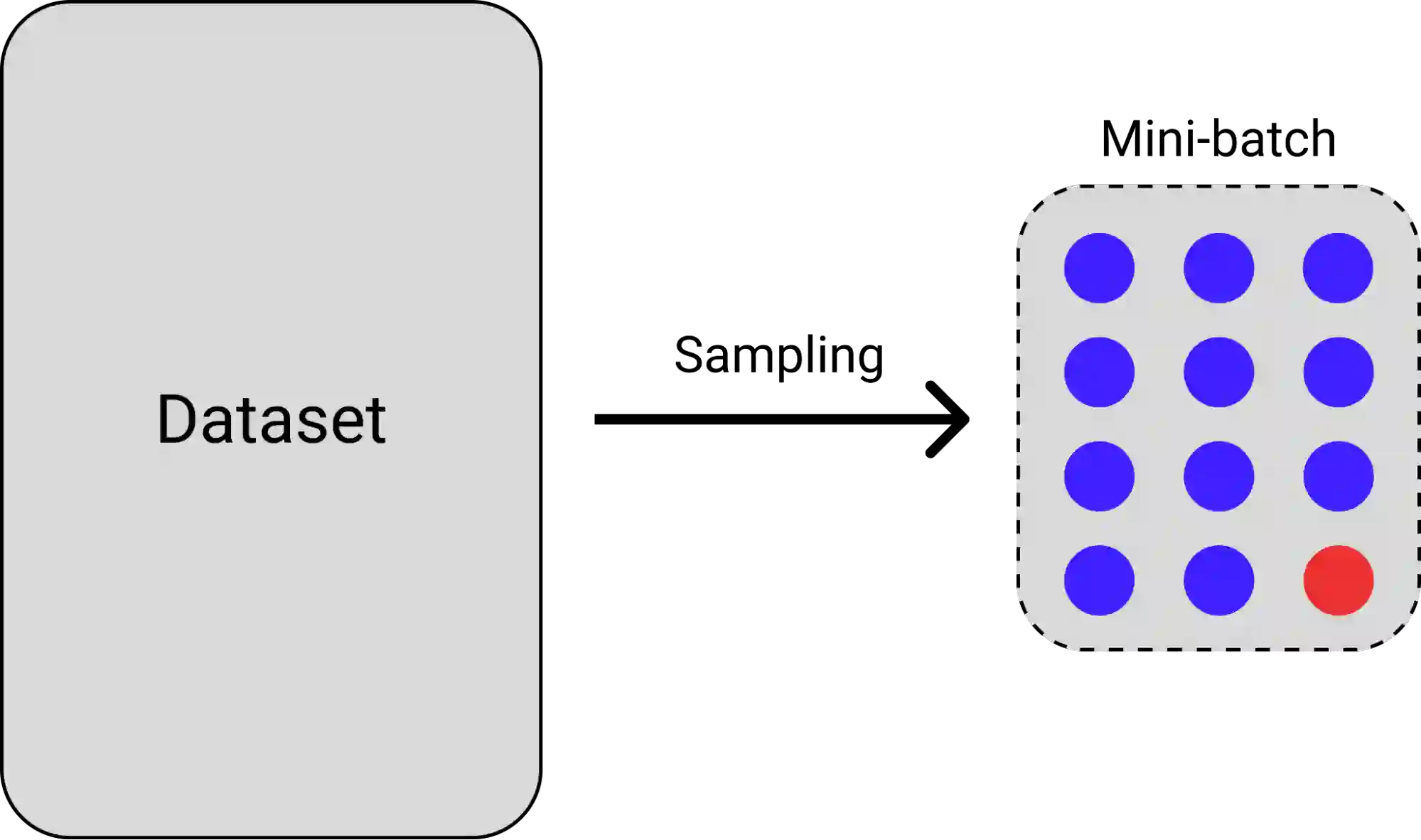

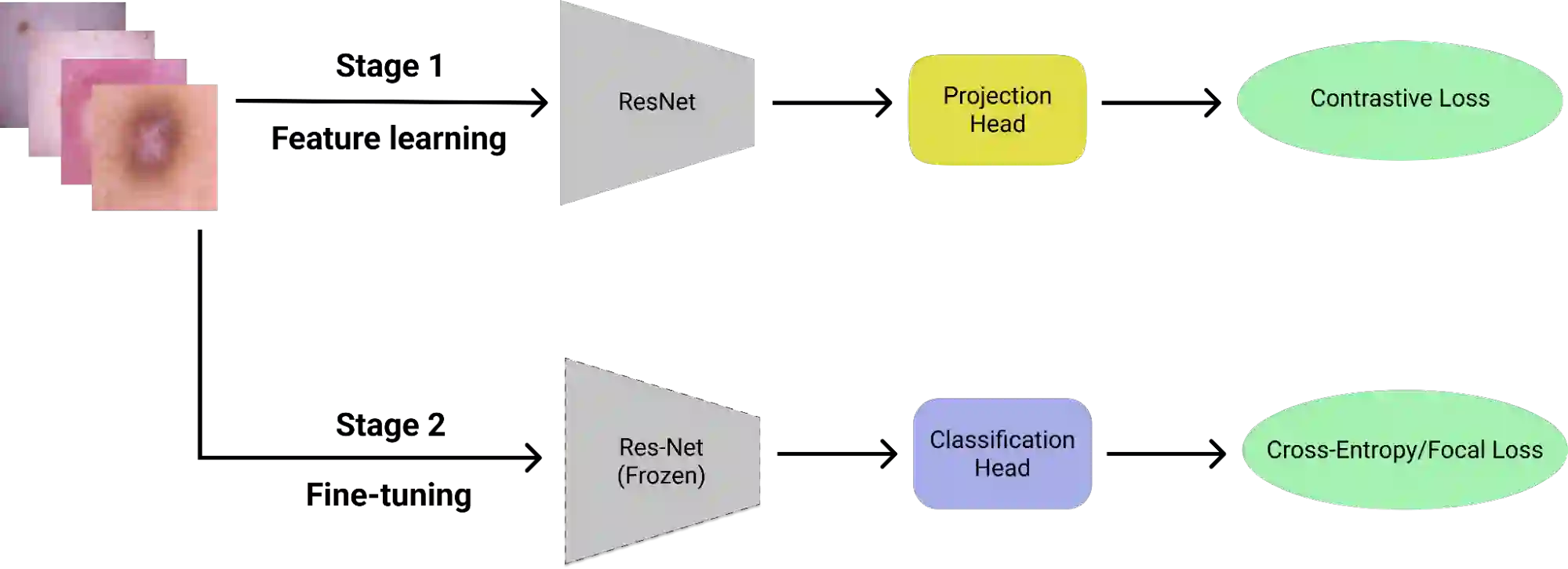

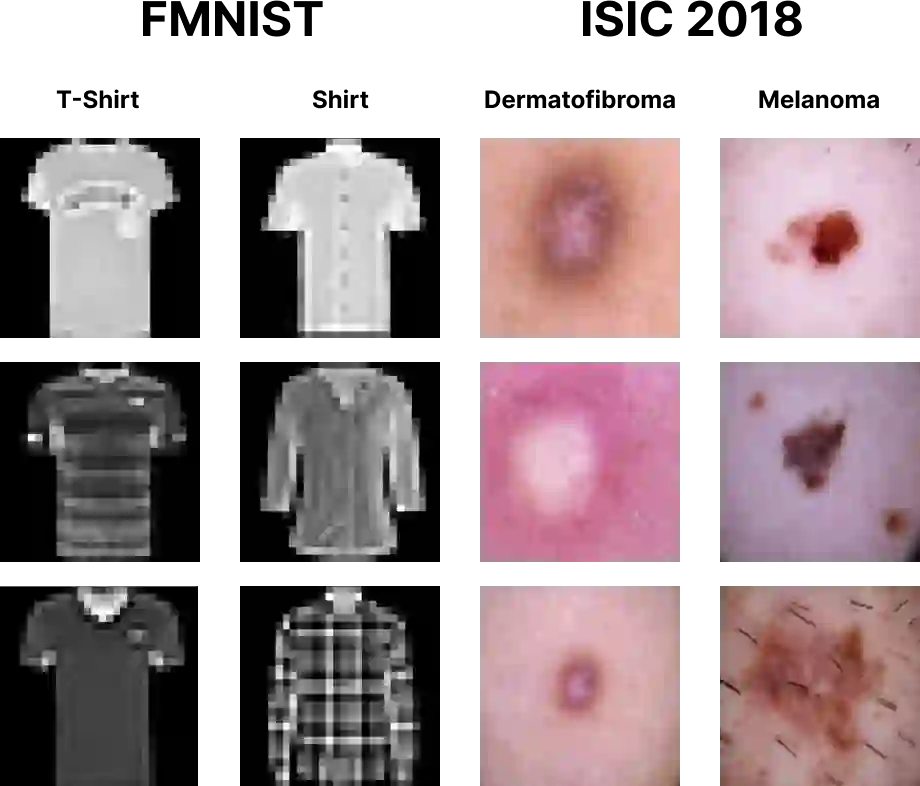

Contrastive learning is a representation learning method performed by contrasting a sample to other similar samples so that they are brought closely together, forming clusters in the feature space. The learning process is typically conducted using a two-stage training architecture, and it utilizes the contrastive loss (CL) for its feature learning. Contrastive learning has been shown to be quite successful in handling imbalanced datasets, in which some classes are overrepresented while some others are underrepresented. However, previous studies have not specifically modified CL for imbalanced datasets. In this work, we introduce an asymmetric version of CL, referred to as ACL, in order to directly address the problem of class imbalance. In addition, we propose the asymmetric focal contrastive loss (AFCL) as a further generalization of both ACL and focal contrastive loss (FCL). Results on the FMNIST and ISIC 2018 imbalanced datasets show that AFCL is capable of outperforming CL and FCL in terms of both weighted and unweighted classification accuracies. In the appendix, we provide a full axiomatic treatment on entropy, along with complete proofs.

翻译:对比性学习是一种代表式学习方法,通过将抽样与其他类似抽样进行比较,将它们紧密地结合在一起,形成特征空间中的集群。学习过程通常使用两阶段培训结构进行,并使用对比性损失(CL)进行特征学习。对比性学习已证明在处理不平衡数据集方面相当成功,在其中某些类别任职人数过多,而另一些类别任职人数偏低。然而,以前的研究没有具体修改关于不平衡数据集的CL。在这项工作中,我们引入了被称为ACL的不对称CL版本,以直接解决阶级不平衡问题。此外,我们提议将不对称焦点对比性损失(AFCL)作为ACL和焦点对比性损失(FCL)的进一步概括。FMNIST和ISICE 2018不平衡数据集的结果显示,AFCLC在加权和未加权分类方面都能够超过CL和FCL。在附录中,我们提供了一种完全的证据,同时对摄取的完全的氧化处理。