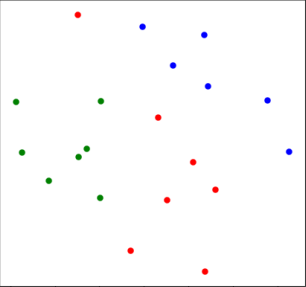

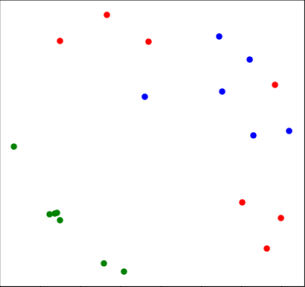

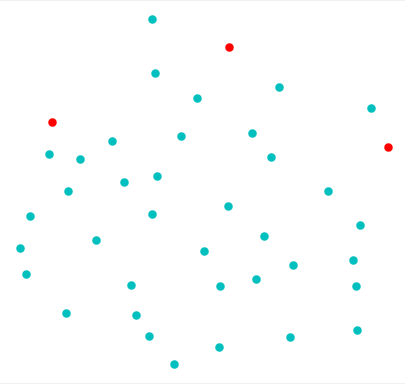

The existing Zero-Shot learning (ZSL) methods may suffer from the vague class attributes that are highly overlapped for different classes. Unlike these methods that ignore the discrimination among classes, in this paper, we propose to classify unseen image by rectifying the semantic space guided by the visual space. First, we pre-train a Semantic Rectifying Network (SRN) to rectify semantic space with a semantic loss and a rectifying loss. Then, a Semantic Rectifying Generative Adversarial Network (SR-GAN) is built to generate plausible visual feature of unseen class from both semantic feature and rectified semantic feature. To guarantee the effectiveness of rectified semantic features and synthetic visual features, a pre-reconstruction and a post reconstruction networks are proposed, which keep the consistency between visual feature and semantic feature. Experimental results demonstrate that our approach significantly outperforms the state-of-the-arts on four benchmark datasets.

翻译:现有的零热学习(ZSL)方法可能受到不同类别高度重叠的模糊等级特征的影响。 与本文中忽视各类别之间差别的方法不同,我们提议通过纠正视觉空间引导的语义空间,对不可见图像进行分类。 首先,我们预先培训一个语义校正网络(SRN),以纠正具有语义损失和正在纠正损失的语义空间。 然后,建立一个语义校正化基因对立网络(SR-GAN),从语义特征和经校正的语义特征中产生可见的不可见阶级的视觉特征。 为了保证校正的语义特征和合成视觉特征的有效性,我们建议了一个预建构和后重建网络,保持视觉特征和语义特征的一致性。 实验结果表明,我们的方法大大超越了四个基准数据集的状态。