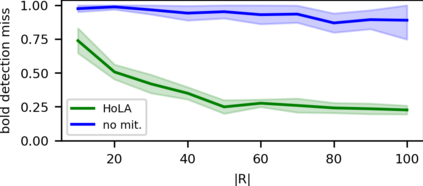

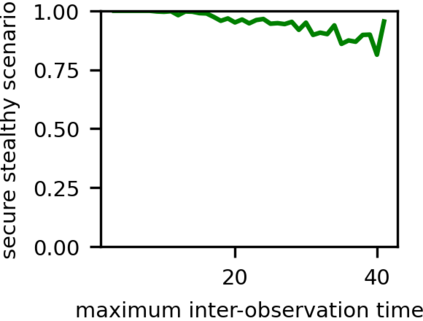

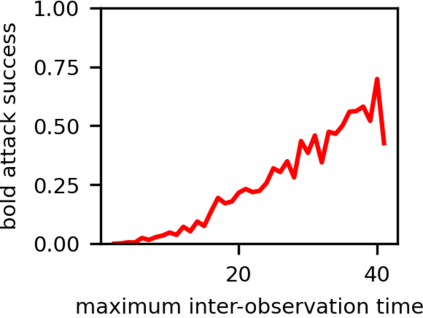

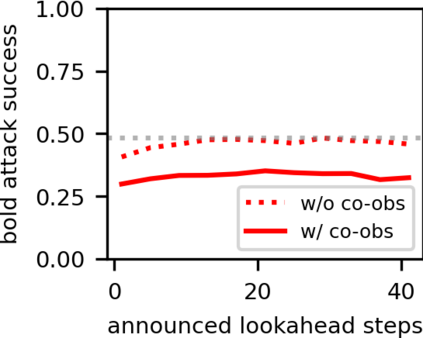

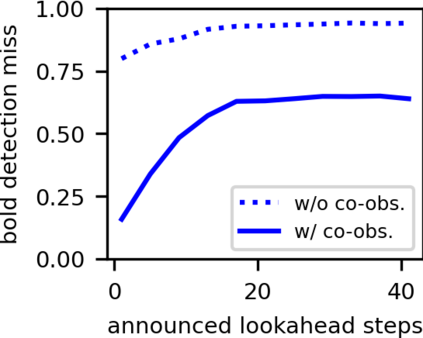

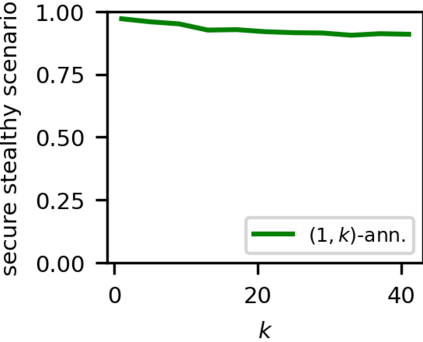

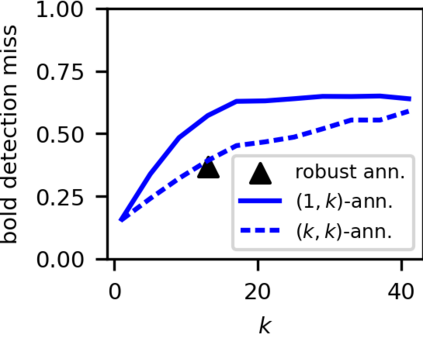

Emerging multi-robot systems rely on cooperation between humans and robots, with robots following automatically generated motion plans to service application-level tasks. Given the safety requirements associated with operating in proximity to humans and expensive infrastructure, it is important to understand and mitigate the security vulnerabilities of such systems caused by compromised robots who diverge from their assigned plans. We focus on centralized systems, where a *central entity* (CE) is responsible for determining and transmitting the motion plans to the robots, which report their location as they move following the plan. The CE checks that robots follow their assigned plans by comparing their expected location to the location they self-report. We show that this self-reporting monitoring mechanism is vulnerable to *plan-deviation attacks* where compromised robots don't follow their assigned plans while trying to conceal their movement by mis-reporting their location. We propose a two-pronged mitigation for plan-deviation attacks: (1) an attack detection technique leveraging both the robots' local sensing capabilities to report observations of other robots and *co-observation schedules* generated by the CE, and (2) a prevention technique where the CE issues *horizon-limiting announcements* to the robots, reducing their instantaneous knowledge of forward lookahead steps in the global motion plan. On a large-scale automated warehouse benchmark, we show that our solution enables attack prevention guarantees from a stealthy attacker that has compromised multiple robots.

翻译:新兴的多机器人系统依赖于人类和机器人之间的合作,机器人遵循自动生成的运动计划,为应用层面的任务提供服务。鉴于在靠近人类和昂贵的基础设施运行时的安全要求,必须理解和减轻这些系统的安全脆弱性,因为与指定计划不同而妥协的机器人与指定计划不同。我们侧重于中央系统,由*中央实体*(CE)负责确定和向机器人传送运动计划,根据计划行动报告其位置。CE检查,机器人按照预定计划,将预期地点与其自我报告的地点进行比较。我们表明,这一自我报告监测机制容易受到*计划降级攻击* 的威胁,在此情况下,受损害的机器人在试图通过错误报告其位置来掩盖其行动的同时,不遵循其指定计划。我们建议对计划降级攻击采取双管齐下的缓解措施:(1) 利用机器人的当地感知能力报告其他机器人和* 共同观察计划* 的观察计划,将其预期地点与其自我报告的地点进行比较。我们表明,这种自我报告机制很容易受到* 计划降级的防范技术,从而使得CE峰会的防范性指令能够显示我们的防范性步骤。