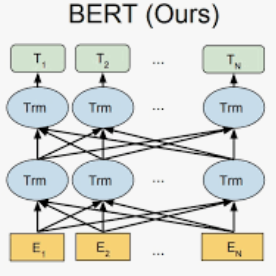

Bidirectional Encoder Representations from Transformers or BERT~\cite{devlin-etal-2019-bert} has been one of the base models for various NLP tasks due to its remarkable performance. Variants customized for different languages and tasks are proposed to further improve the performance. In this work, we investigate supervised continued pre-training~\cite{gururangan-etal-2020-dont} on BERT for Chinese topic classification task. Specifically, we incorporate prompt-based learning and contrastive learning into the pre-training. To adapt to the task of Chinese topic classification, we collect around 2.1M Chinese data spanning various topics. The pre-trained Chinese Topic Classification BERTs (TCBERTs) with different parameter sizes are open-sourced at \url{https://huggingface.co/IDEA-CCNL}.

翻译:变换者或变换者或BERT ⁇ cite{devlin-etal-2019-bert}提供的双向编码器代表由于其出色的表现,一直是各种国家语言规划任务的基础模型之一,建议为不同语言和任务量身定制的变体,以进一步改进业绩。在这项工作中,我们调查了在BERT上持续进行的中国专题分类任务培训前“cite{gurururrangan-etal-2020-dont}监督的继续培训前 ⁇ cite{gurrangan-etal-2020-dont}。具体地说,我们将即时学习和对比学习纳入培训前的训练。为了适应中国专题分类的任务,我们收集了涵盖不同主题的约2.1M中国数据。经过培训的具有不同参数大小的中国专题分类分类BERT(TCBERTs)是在\ url{https://huggface.co/IDA-CCNL}公开来源。