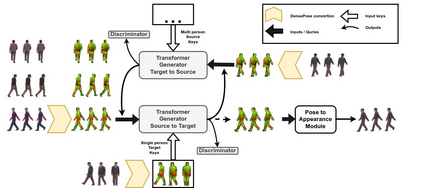

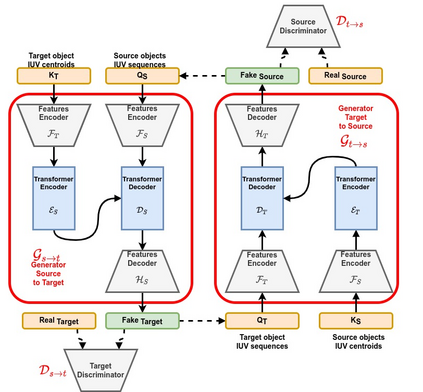

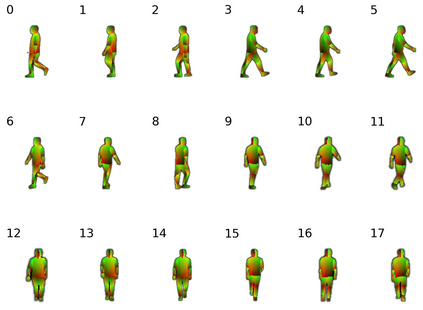

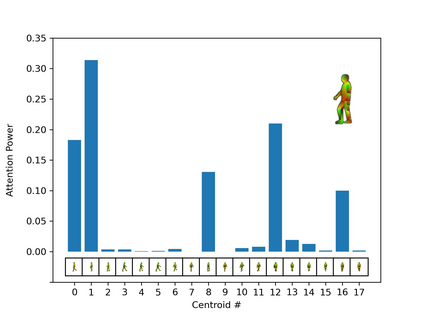

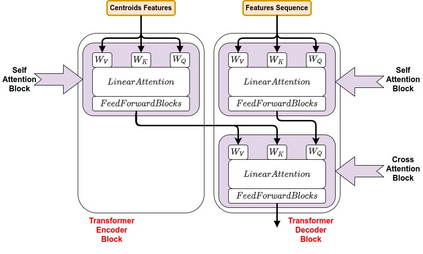

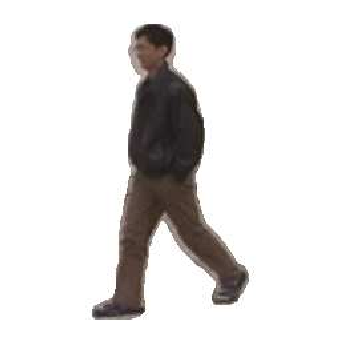

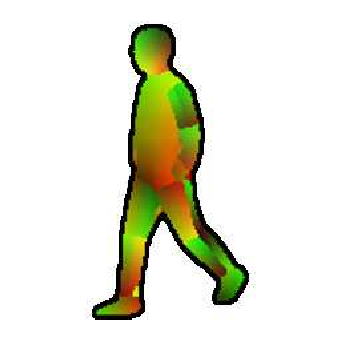

We attempt for the first time to address the problem of gait transfer. In contrast to motion transfer, the objective here is not to imitate the source's normal motions, but rather to transform the source's motion into a typical gait pattern for the target. Using gait recognition models, we demonstrate that existing techniques yield a discrepancy that can be easily detected. We introduce a novel model, Cycle Transformers GAN (CTrGAN), that can successfully generate the target's natural gait. CTrGAN's generators consist of a decoder and encoder, both Transformers, where the attention is on the temporal domain between complete images rather than the spatial domain between patches. While recent Transformer studies in computer vision mainly focused on discriminative tasks, we introduce an architecture that can be applied to synthesis tasks. Using a widely-used gait recognition dataset, we demonstrate that our approach is capable of producing over an order of magnitude more realistic personalized gaits than existing methods, even when used with sources that were not available during training.

翻译:我们第一次尝试解决行为转移问题。 与运动转移相比, 这里的目的不是模仿源的正常动作, 而是将源的动作转换成目标的典型动作模式。 我们使用动作识别模型, 证明现有技术产生了可以轻易检测到的差异。 我们引入了一个新颖的模型, 循环变换器 GAN (CTrGAN), 它可以成功生成目标的自然动作。 CTrGAN 的生成器由解码器和编码器组成, 两者都是变换器, 它的注意力都放在完整图像之间的时间域, 而不是补丁之间的空间域。 虽然最近的计算机变换器研究主要侧重于歧视性任务, 我们引入了一个可用于合成任务的结构。 我们使用广泛使用的数识别数据集, 我们证明我们的方法能够产生比现有方法更现实的个数级, 即便在培训期间没有可用的来源使用 。