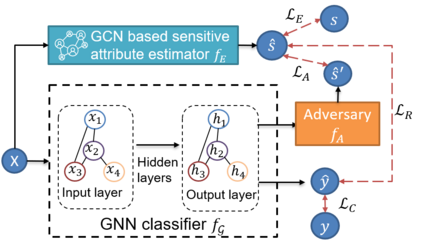

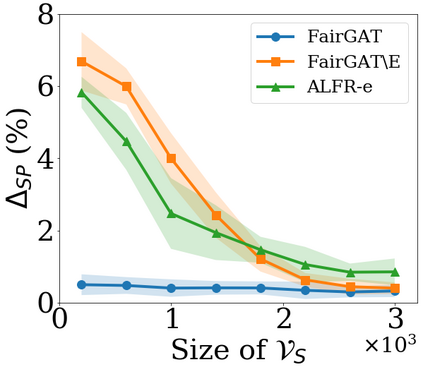

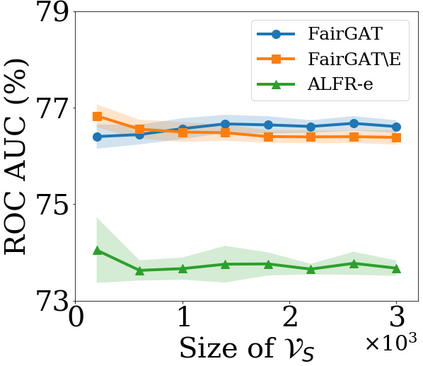

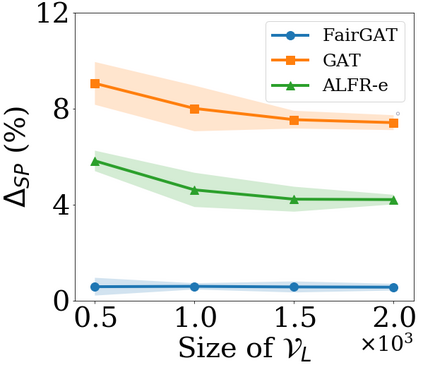

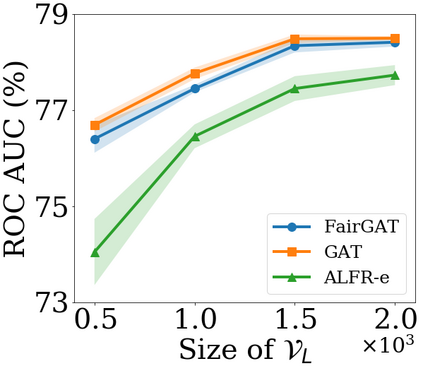

Graph neural networks (GNNs) have shown great power in modeling graph structured data. However, similar to other machine learning models, GNNs may make predictions biased on protected sensitive attributes, e.g., skin color and gender. Because machine learning algorithms including GNNs are trained to reflect the distribution of the training data which often contains historical bias towards sensitive attributes. In addition, the discrimination in GNNs can be magnified by graph structures and the message-passing mechanism. As a result, the applications of GNNs in sensitive domains such as crime rate prediction would be largely limited. Though extensive studies of fair classification have been conducted on i.i.d data, methods to address the problem of discrimination on non-i.i.d data are rather limited. Furthermore, the practical scenario of sparse annotations in sensitive attributes is rarely considered in existing works. Therefore, we study the novel and important problem of learning fair GNNs with limited sensitive attribute information. FairGNN is proposed to eliminate the bias of GNNs whilst maintaining high node classification accuracy by leveraging graph structures and limited sensitive information. Our theoretical analysis shows that FairGNN can ensure the fairness of GNNs under mild conditions given limited nodes with known sensitive attributes. Extensive experiments on real-world datasets also demonstrate the effectiveness of FairGNN in debiasing and keeping high accuracy.

翻译:然而,与其他机器学习模式类似,全球网络网络可能会对受保护的敏感属性,例如肤色和性别做出偏差预测。因为包括全球网络在内的机器学习算法经过培训,能够反映通常对敏感属性具有历史偏见的培训数据分布情况。此外,全球网络中的歧视可以通过图形结构和信息传递机制放大。因此,全球网络在犯罪率预测等敏感领域的应用将大受限制。尽管对i.d数据进行了广泛的公平分类研究,但解决非i.i.d数据歧视问题的方法相当有限。此外,在现有工作中,很少考虑敏感属性中稀少说明的实际情景。因此,我们研究在敏感属性信息有限的情况下学习公平 GNN的新的重要问题。建议通过利用图形结构和有限的敏感信息,消除GNN的偏差,同时保持高的不偏差分类准确性。我们的理论分析显示,FairGNNN在已知的敏感性全球数据库中,也能够确保敏感度高质量的可靠性。

相关内容

- Today (iOS and OS X): widgets for the Today view of Notification Center

- Share (iOS and OS X): post content to web services or share content with others

- Actions (iOS and OS X): app extensions to view or manipulate inside another app

- Photo Editing (iOS): edit a photo or video in Apple's Photos app with extensions from a third-party apps

- Finder Sync (OS X): remote file storage in the Finder with support for Finder content annotation

- Storage Provider (iOS): an interface between files inside an app and other apps on a user's device

- Custom Keyboard (iOS): system-wide alternative keyboards

Source: iOS 8 Extensions: Apple’s Plan for a Powerful App Ecosystem