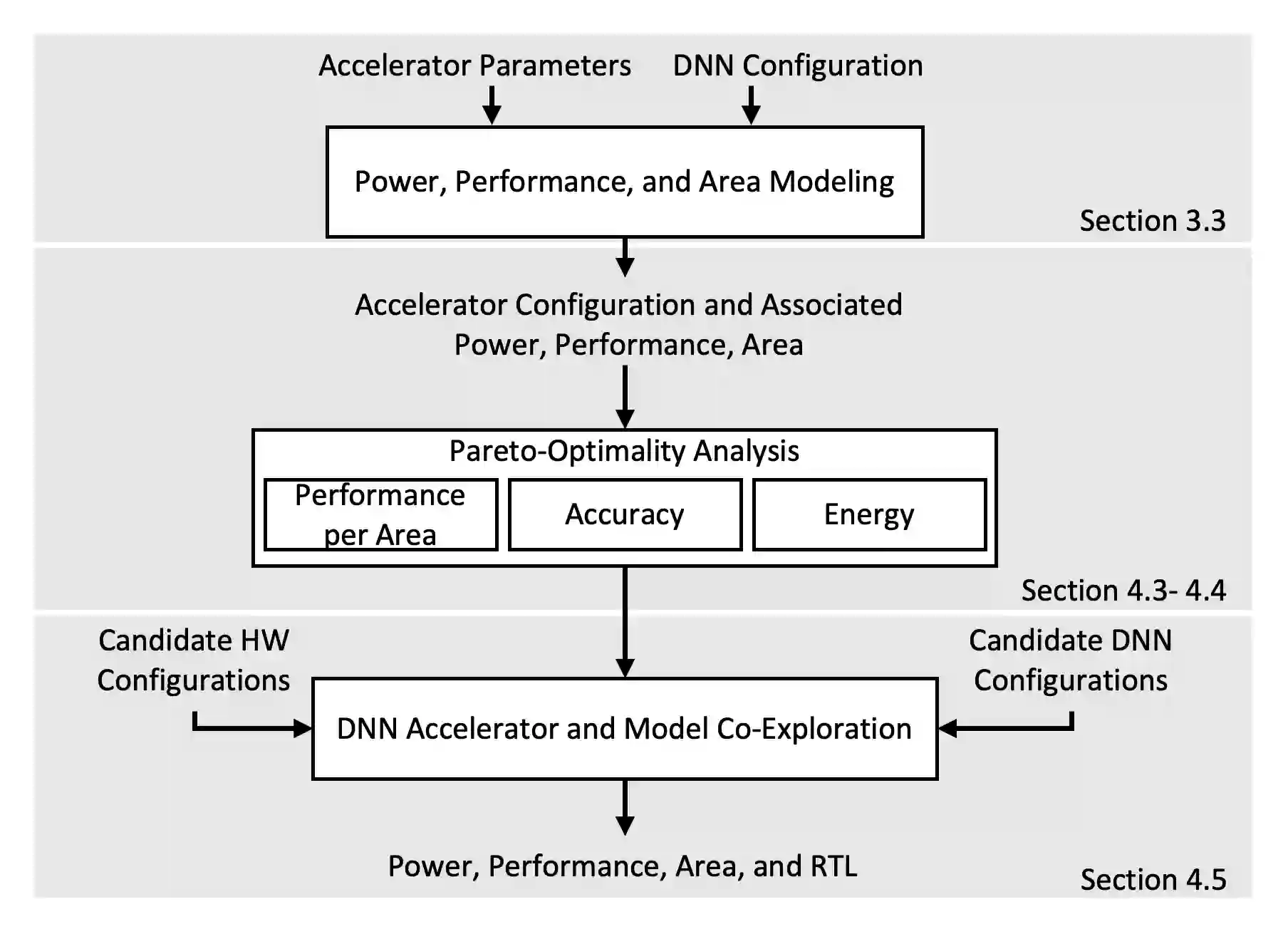

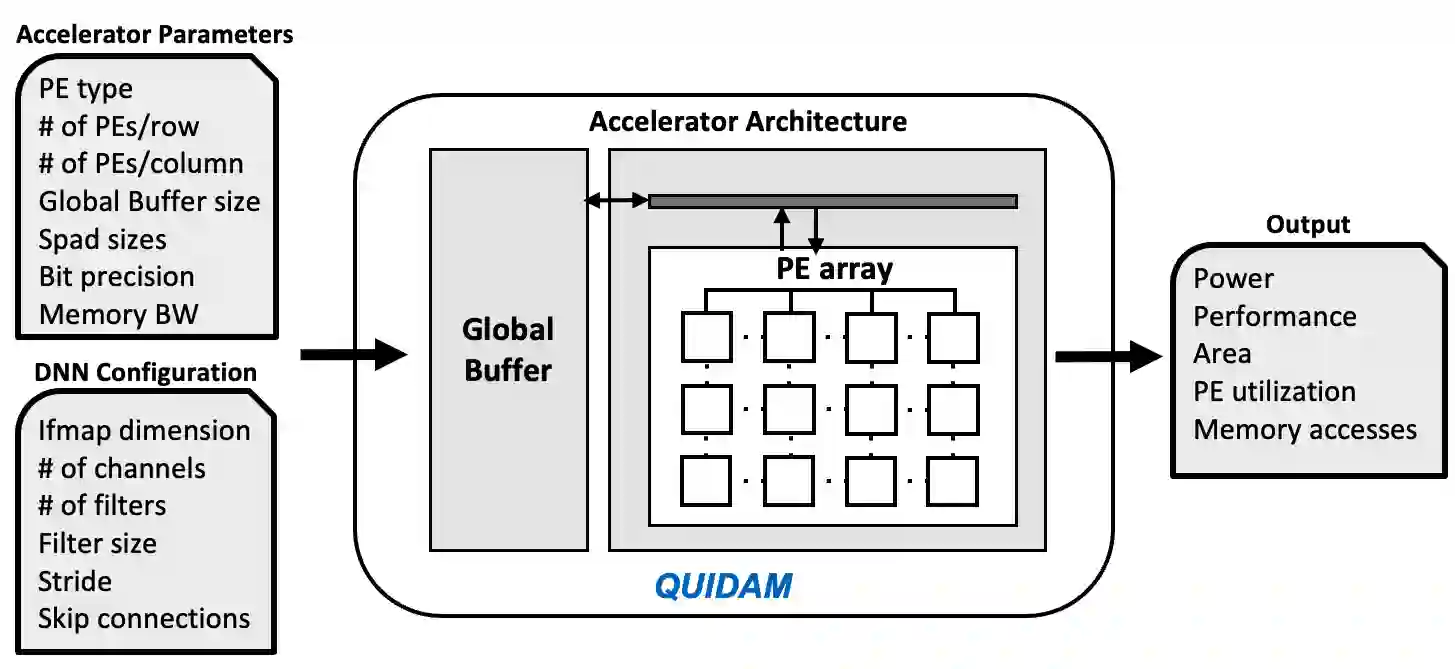

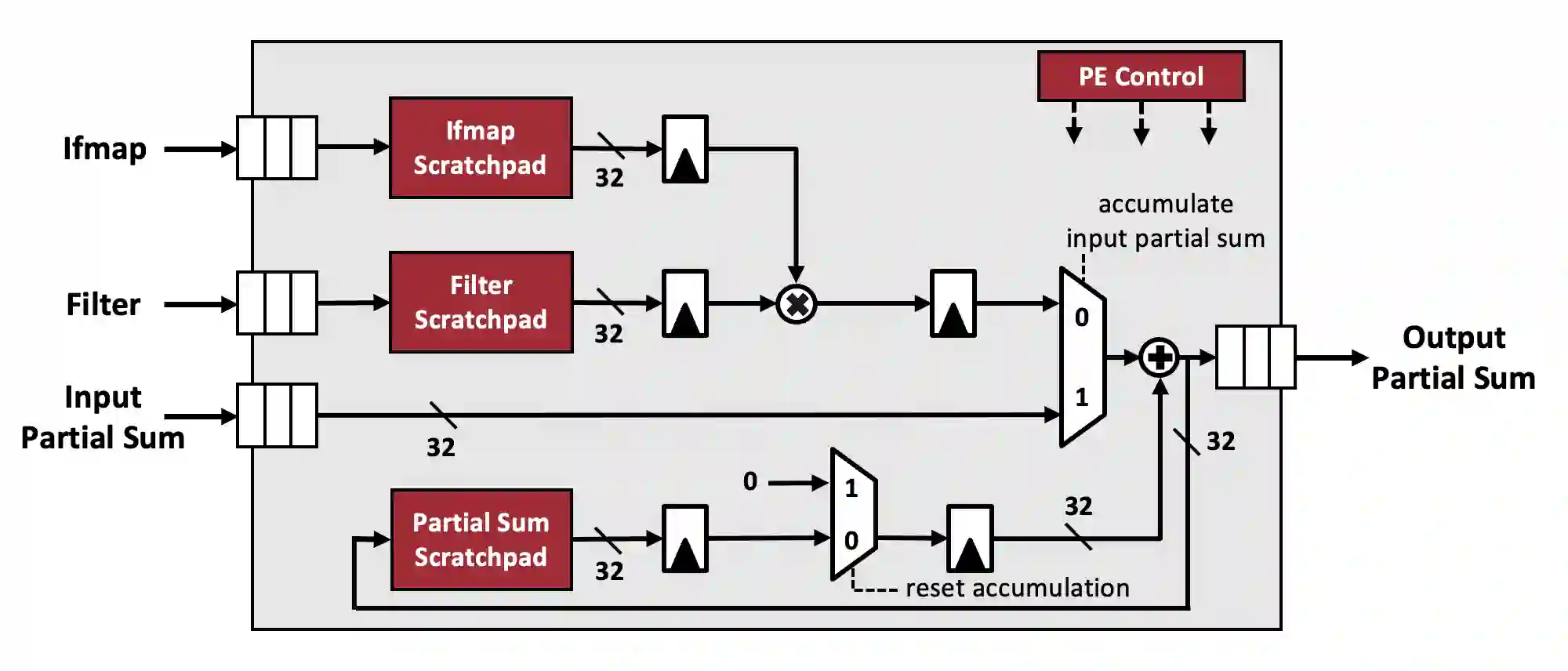

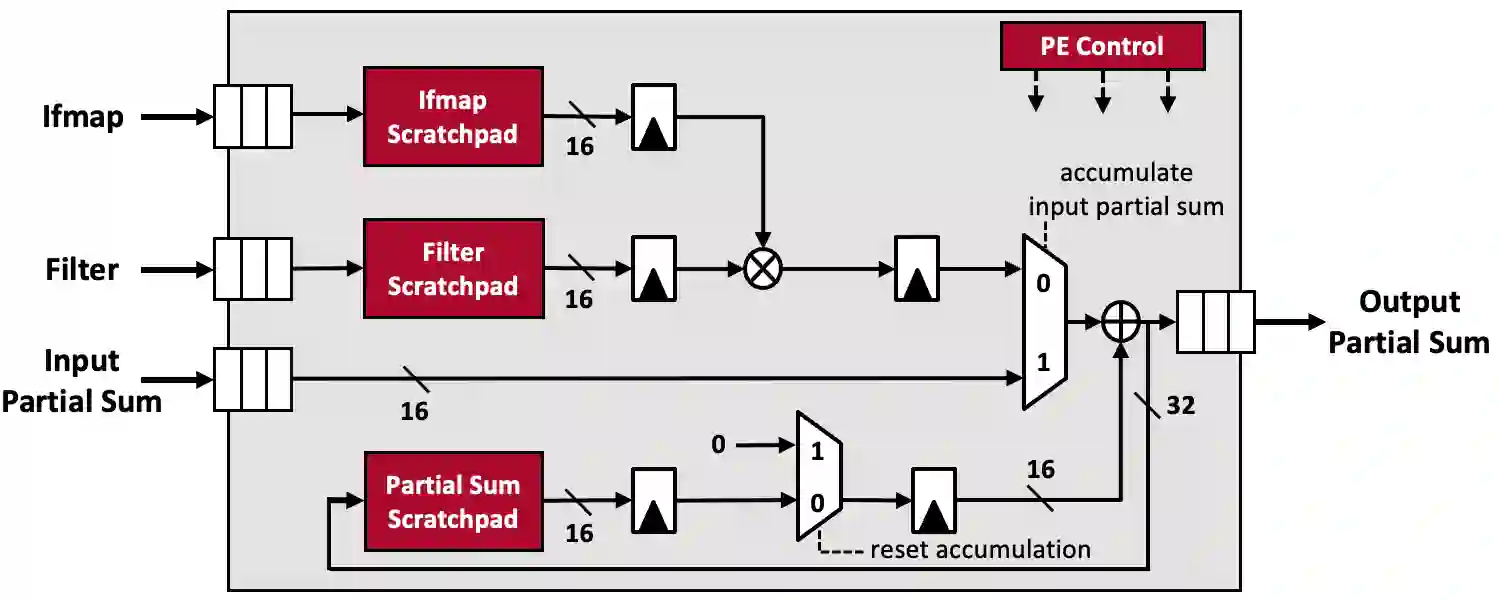

As the machine learning and systems communities strive to achieve higher energy-efficiency through custom deep neural network (DNN) accelerators, varied precision or quantization levels, and model compression techniques, there is a need for design space exploration frameworks that incorporate quantization-aware processing elements into the accelerator design space while having accurate and fast power, performance, and area models. In this work, we present QUIDAM, a highly parameterized quantization-aware DNN accelerator and model co-exploration framework. Our framework can facilitate future research on design space exploration of DNN accelerators for various design choices such as bit precision, processing element type, scratchpad sizes of processing elements, global buffer size, number of total processing elements, and DNN configurations. Our results show that different bit precisions and processing element types lead to significant differences in terms of performance per area and energy. Specifically, our framework identifies a wide range of design points where performance per area and energy varies more than 5x and 35x, respectively. With the proposed framework, we show that lightweight processing elements achieve on par accuracy results and up to 5.7x more performance per area and energy improvement when compared to the best INT16 based implementation. Finally, due to the efficiency of the pre-characterized power, performance, and area models, QUIDAM can speed up the design exploration process by 3-4 orders of magnitude as it removes the need for expensive synthesis and characterization of each design.

翻译:机器学习和系统社区通过定制的深神经网络加速器、不同精度或量度的精确度或量度水平以及模型压缩技术,努力提高能源效率。在机器学习和系统社区通过定制的深神经网络加速器、不同的精确度或量度水平以及模型压缩技术,需要设计空间探索框架,将孔化-有孔化-有孔化-有孔化-有孔化-有孔化-有孔化-有孔化-有孔化-加速器和模型共同勘探框架,将之纳入加速器设计空间设计空间,同时具有精确度和快速功率、性能和面积模型。在这项工作中,我们提出了QUIDAM,这是一个高度参数化-有孔化-有孔化-有孔化-有孔化-有孔化-孔化-孔化-孔化-孔化-孔化-孔化-孔化-孔化-孔化-孔化-孔化-孔化-孔化-孔化-孔化-孔化-孔化-孔化-孔化-孔化-孔化-孔化-孔化-孔化-孔化-孔化-层-层-层-层-层-层-层-层-层-层-层-层-层-层-层-层-层-层-层-层-层-层-层-层-层-层-层-层-层-层-层-层-层-层-层-层-层-层-层-层-层-层-层-层-层-层-层-层-层-层-层-层-层-层-层-层-层-层-层-层-层-层-层-层-层-层-层-层-层-层-层-层-层-层--层-层-层-层-层-层-层-层-层-层-层-层-层-层-层-层-层-层-层-层-层-层-层-层-层-层-层-层-层-层-层-层-层-层-层-层-层-层-层-层-层-层-层-层-层-层-层-层-层-层-层-层-层-层-