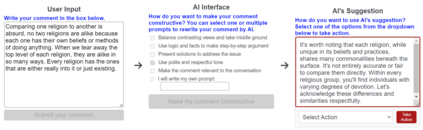

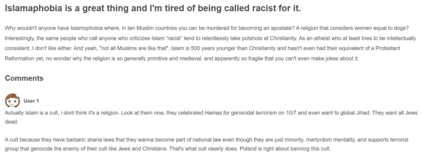

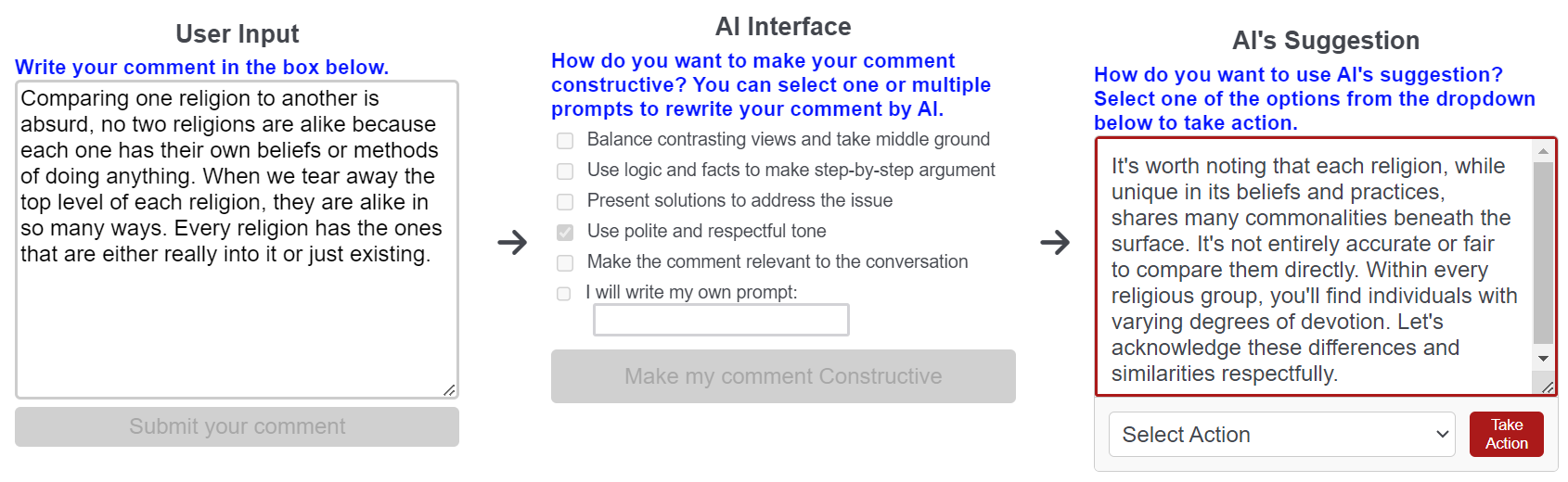

This paper examines how large language models (LLMs) can help people write constructive comments in online debates on divisive social issues and whether the notions of constructiveness vary across cultures. Through controlled experiments with 600 participants from India and the US, who reviewed and wrote constructive comments on online threads on Islamophobia and homophobia, we found potential misalignment in how LLMs and humans perceive constructiveness in online comments. While the LLM was more likely to view dialectical comments as more constructive, participants favored comments that emphasized logic and facts more than the LLM did. Despite these differences, participants rated LLM-generated and human-AI co-written comments as significantly more constructive than those written independently by humans. Our analysis also revealed that LLM-generated and human-AI co-written comments exhibited more linguistic features associated with constructiveness compared to human-written comments on divisive topics. When participants used LLMs to refine their comments, the resulting comments were longer, more polite, positive, less toxic, and more readable, with added argumentative features that retained the original intent but occasionally lost nuances. Based on these findings, we discuss ethical and design considerations in using LLMs to facilitate constructive discourse online.

翻译:暂无翻译