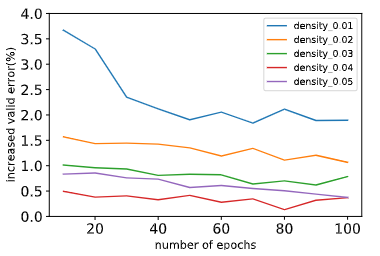

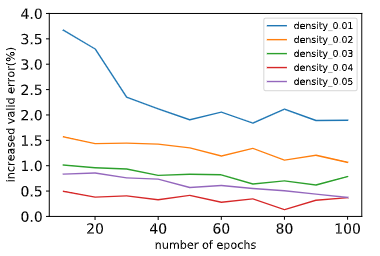

Modern deep neural networks (DNNs) are vulnerable to adversarial attacks and adversarial training has been shown to be a promising method for improving the adversarial robustness of DNNs. Pruning methods have been considered in adversarial context to reduce model capacity and improve adversarial robustness simultaneously in training. Existing adversarial pruning methods generally mimic the classical pruning methods for natural training, which follow the three-stage 'training-pruning-fine-tuning' pipelines. We observe that such pruning methods do not necessarily preserve the dynamics of dense networks, making it potentially hard to be fine-tuned to compensate the accuracy degradation in pruning. Based on recent works of \textit{Neural Tangent Kernel} (NTK), we systematically study the dynamics of adversarial training and prove the existence of trainable sparse sub-network at initialization which can be trained to be adversarial robust from scratch. This theoretically verifies the \textit{lottery ticket hypothesis} in adversarial context and we refer such sub-network structure as \textit{Adversarial Winning Ticket} (AWT). We also show empirical evidences that AWT preserves the dynamics of adversarial training and achieve equal performance as dense adversarial training.

翻译:现代深心神经网络(DNNS)很容易受到对抗性攻击,而对抗性培训被证明是改善DNNS对抗性强力的有希望的方法。在对抗性环境下,已经考虑过节方法,以减少模型能力,同时提高对抗性强力。现有的对抗性研究方法一般模仿典型的自然培训修剪方法,这种修剪方法遵循三阶段的“训练-操纵-调试-调试”管道。我们认为,这种修剪方法不一定能保持密集网络的动态,因此可能很难加以微调,以弥补修剪的精度下降。我们根据最近“Textit{Neural Tangent Kernel} (NTKK) (NTK)) 的作品,系统地研究对抗性培训的动态,并证明在初始化时存在可训练的稀疏松的子网络,可以从抓起成为对抗性强力的管道。这种理论上的校准方法,在对抗性环境下,我们把这种子网络结构称为“Textitit{Adversari wintial chicktal train train train train train train viataltialtiality dust) (AWest) shalviolviews dust salviews) 。我们也展示了对战力保持了对战力。(WWTWTWTWTWTA。