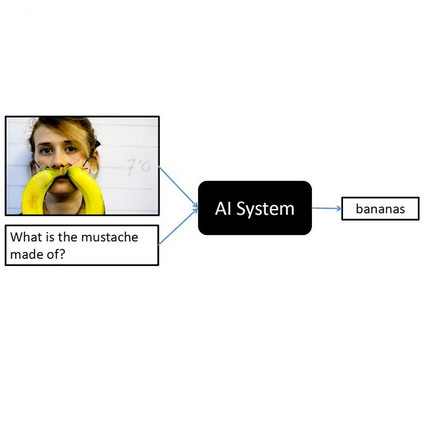

We consider the problem of Visual Question Answering (VQA). Given an image and a free-form, open-ended, question, expressed in natural language, the goal of VQA system is to provide accurate answer to this question with respect to the image. The task is challenging because it requires simultaneous and intricate understanding of both visual and textual information. Attention, which captures intra- and inter-modal dependencies, has emerged as perhaps the most widely used mechanism for addressing these challenges. In this paper, we propose an improved attention-based architecture to solve VQA. We incorporate an Attention on Attention (AoA) module within encoder-decoder framework, which is able to determine the relation between attention results and queries. Attention module generates weighted average for each query. On the other hand, AoA module first generates an information vector and an attention gate using attention results and current context; and then adds another attention to generate final attended information by multiplying the two. We also propose multimodal fusion module to combine both visual and textual information. The goal of this fusion module is to dynamically decide how much information should be considered from each modality. Extensive experiments on VQA-v2 benchmark dataset show that our method achieves the state-of-the-art performance.

翻译:我们考虑了视觉问题解答(VQA)的问题。鉴于图像和以自然语言表达的免费形式、开放的、开放的、问题,VQA系统的目标是就图像问题提供准确的答案。任务具有挑战性,因为它要求对视觉和文字信息需要同时和复杂的理解。关注,它捕捉了不同模式内部和不同模式之间的依赖性。关注或许是用来应对这些挑战的最广泛使用的机制。在本文件中,我们建议改进解决VQA的基于关注的架构。我们把关注(AoA)模块纳入编码器解密框架,从而能够确定关注结果和查询之间的关系。关注模块为每个查询生成加权平均值。另一方面,AoA模块首先利用关注结果和当前背景生成信息矢量和关注门;然后增加另一个关注点,通过将两种模式乘以生成最终的共享信息。我们还提出了将视觉和文本信息结合起来的多式联运模块。这个聚合模块的目标是动态地决定如何从每个模式上实现我们的基准数据。