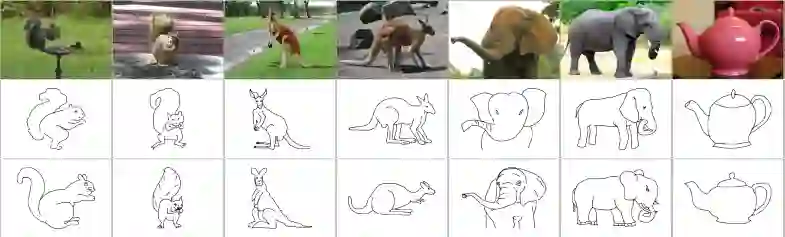

Sketch-based image retrieval (SBIR) is the task of retrieving natural images (photos) that match the semantics and the spatial configuration of hand-drawn sketch queries. The universality of sketches extends the scope of possible applications and increases the demand for efficient SBIR solutions. In this paper, we study classic triplet-based SBIR solutions and show that a persistent invariance to horizontal flip (even after model finetuning) is harming performance. To overcome this limitation, we propose several approaches and evaluate in depth each of them to check their effectiveness. Our main contributions are twofold: We propose and evaluate several intuitive modifications to build SBIR solutions with better flip equivariance. We show that vision transformers are more suited for the SBIR task, and that they outperform CNNs with a large margin. We carried out numerous experiments and introduce the first models to outperform human performance on a large-scale SBIR benchmark (Sketchy). Our best model achieves a recall of 62.25% (at k = 1) on the sketchy benchmark compared to previous state-of-the-art methods 46.2%.

翻译:以绘图为基础的图像检索( SIR) 是检索自然图像( 照片) 的任务, 这些图像与手绘的草图查询的语义和空间配置相匹配。 草图的普遍性扩大了可能的应用范围, 增加了对高效的SBIR解决方案的需求。 在本文中, 我们研究传统的三重基的SBIR解决方案, 并表明横向翻转( 即使在模型微调后) 的持续偏差会损害性能。 为了克服这一限制, 我们提出了几种方法, 并深入评估其中的每个方法以检查其有效性。 我们的主要贡献是双重的: 我们提出并评价了几项直观的修改, 以更好的翻转式方式构建SBIR 解决方案。 我们显示, 视觉变异器更适合执行SBIR 任务, 并大大超过CNN 。 我们进行了无数的实验, 并引入了第一个模型, 以在大型的SBIR 基准( sketchy) 上超越人类的绩效。 我们的最佳模型比先前的状态方法46. 2 % 。