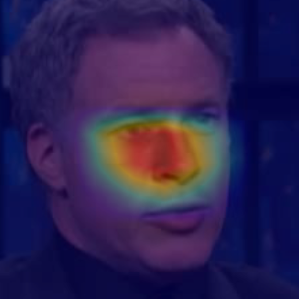

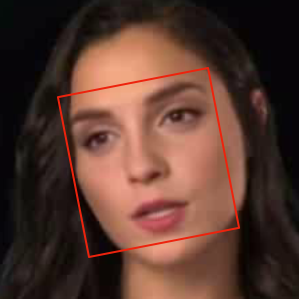

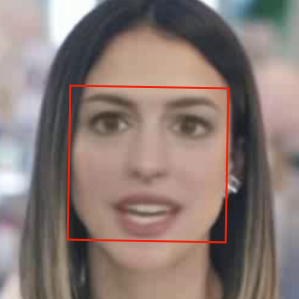

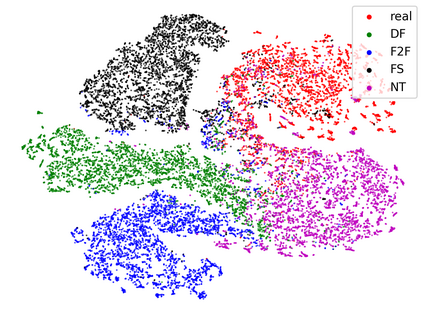

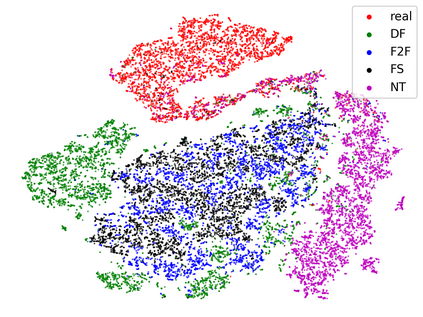

Face manipulation techniques develop rapidly and arouse widespread public concerns. Despite that vanilla convolutional neural networks achieve acceptable performance, they suffer from the overfitting issue. To relieve this issue, there is a trend to introduce some erasing-based augmentations. We find that these methods indeed attempt to implicitly induce more consistent representations for different augmentations via assigning the same label for different augmented images. However, due to the lack of explicit regularization, the consistency between different representations is less satisfactory. Therefore, we constrain the consistency of different representations explicitly and propose a simple yet effective framework, COnsistent REpresentation Learning (CORE). Specifically, we first capture the different representations with different augmentations, then regularize the cosine distance of the representations to enhance the consistency. Extensive experiments (in-dataset and cross-dataset) demonstrate that CORE performs favorably against state-of-the-art face forgery detection methods.

翻译:面部操纵技术迅速发展并引起广泛的公众关注。尽管香草进化神经网络取得了可接受的性能,但它们却受到过度装配问题的影响。为了缓解这一问题,我们发现,这些方法确实试图通过为不同的增强图像分配相同的标签来暗含对不同增强力的更一致的表述;然而,由于缺乏明确的正规化,不同表述之间的一致性不那么令人满意。因此,我们限制不同表述的一致,并提议一个简单而有效的框架,即耐力再现学习(CORE ) 。 具体地说,我们首先用不同的增强力捕捉不同的表达方式,然后将表达的内缘距离规范化,以加强一致性。广泛的实验(数据集和交叉数据集)表明,对最先进的面部造假检测方法来说,共同作用是有利的。