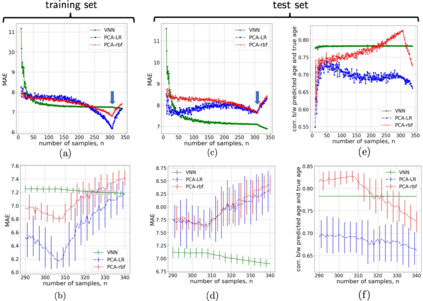

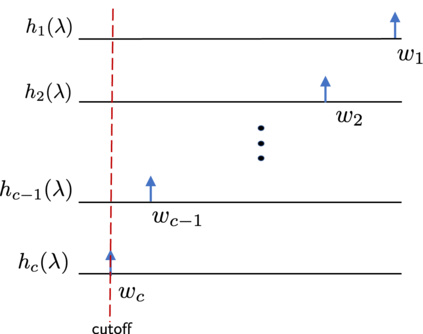

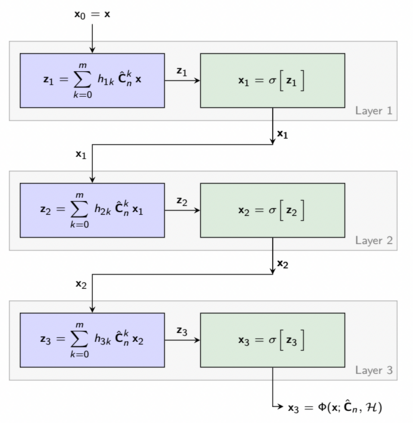

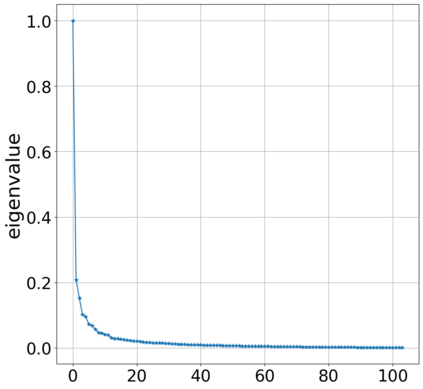

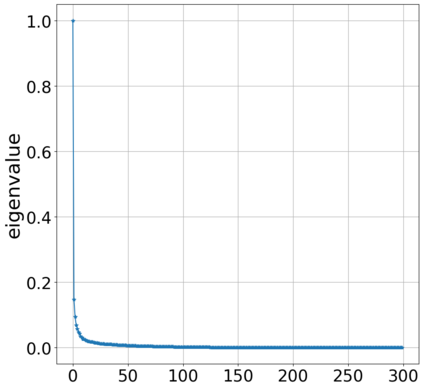

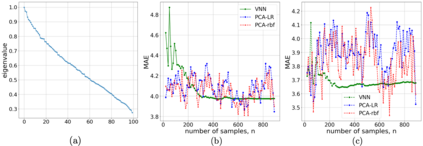

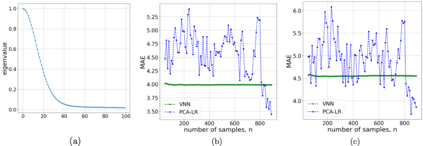

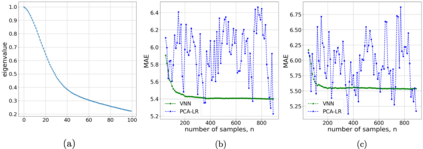

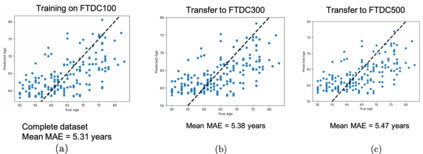

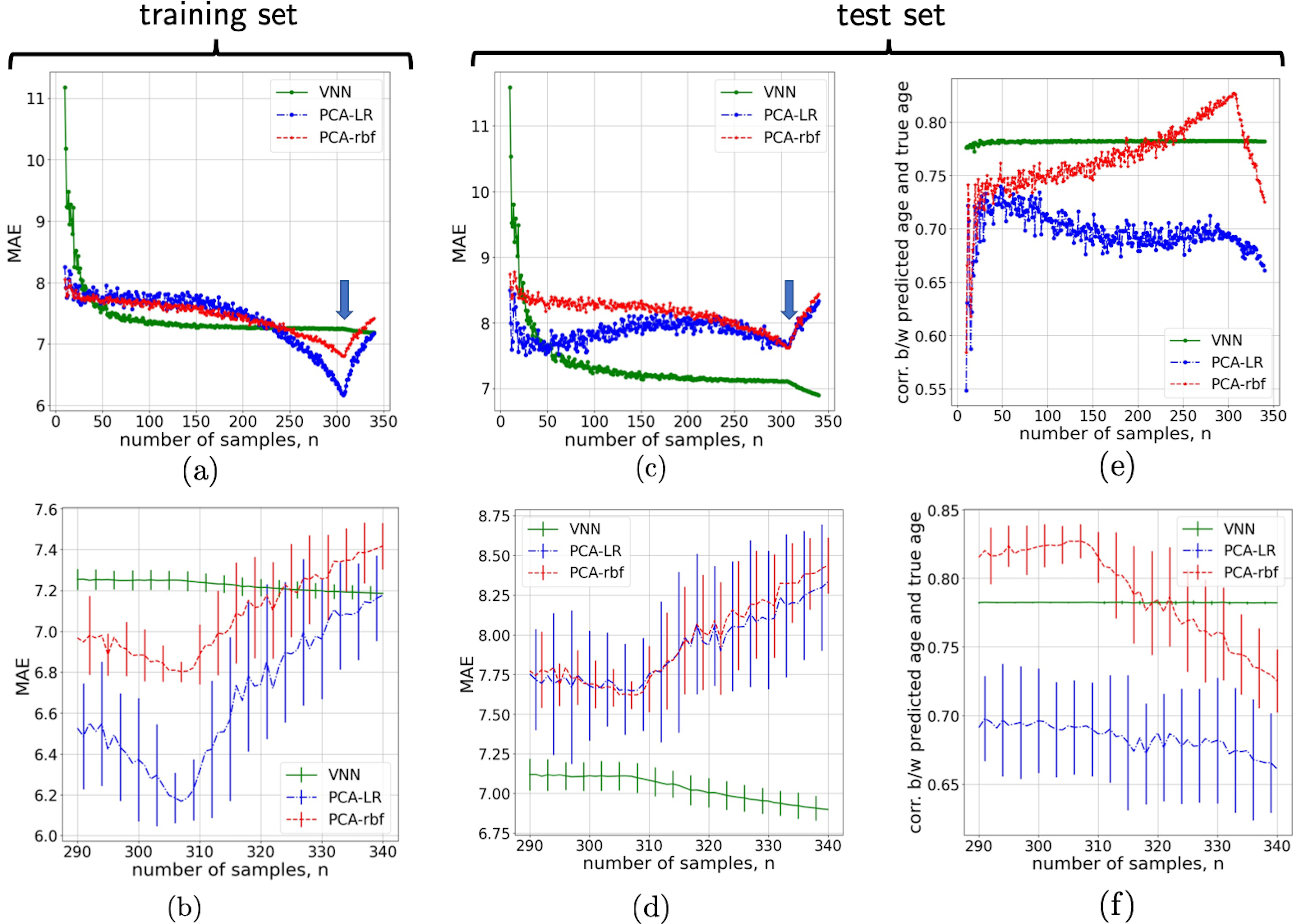

Graph neural networks (GNN) are an effective framework that exploit inter-relationships within graph-structured data for learning. Principal component analysis (PCA) involves the projection of data on the eigenspace of the covariance matrix and draws similarities with the graph convolutional filters in GNNs. Motivated by this observation, we propose a GNN architecture, called coVariance neural network (VNN), that operates on sample covariance matrices as graphs. We theoretically establish the stability of VNNs to perturbations in the covariance matrix, thus, implying an advantage over standard PCA-based data analysis approaches that are prone to instability due to principal components associated with close eigenvalues. Our experiments on real-world datasets validate our theoretical results and show that VNN performance is indeed more stable than PCA-based statistical approaches. Moreover, our experiments on multi-resolution datasets also demonstrate that VNNs are amenable to transferability of performance over covariance matrices of different dimensions; a feature that is infeasible for PCA-based approaches.

翻译:图表神经网络(GNN)是利用图表结构数据内的关系进行学习的有效框架。主要组成部分分析(PCA)涉及对共变矩阵的光度数据进行预测,并与GNNS的图层革命过滤器相类似。我们以这一观察为动力,提议了一个GNN结构,称为共变神经网络(VNN),以样本共变矩阵为图表运行。我们从理论上确定VNNs在共变矩阵中的稳定性,使其与共变矩阵中的扰动相适应。因此,我们从理论上确定VNNS的稳定性,使其相对于标准的五氯苯甲醚数据分析方法而言具有优势,这些方法很容易因与接近的易变异值有关的主要组成部分而不稳定。我们在现实世界数据集上的实验证实了我们的理论结果,并表明VNNN的性能确实比以五氯苯甲醚为基础的统计方法更为稳定。此外,我们在多分辨率数据集方面的实验还表明,VNNS很容易在不同层面的可变性矩阵上转移性能;对于以五氯苯为基础的方法来说,这是一个不可行的特征。