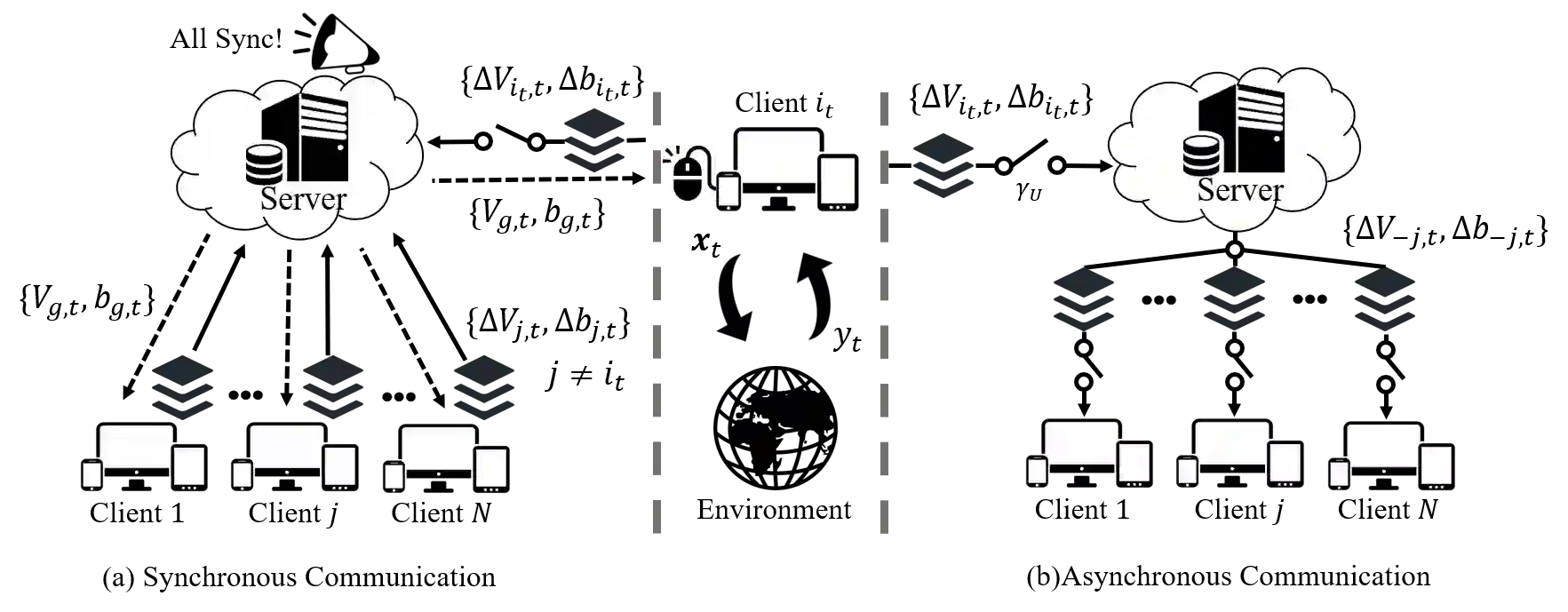

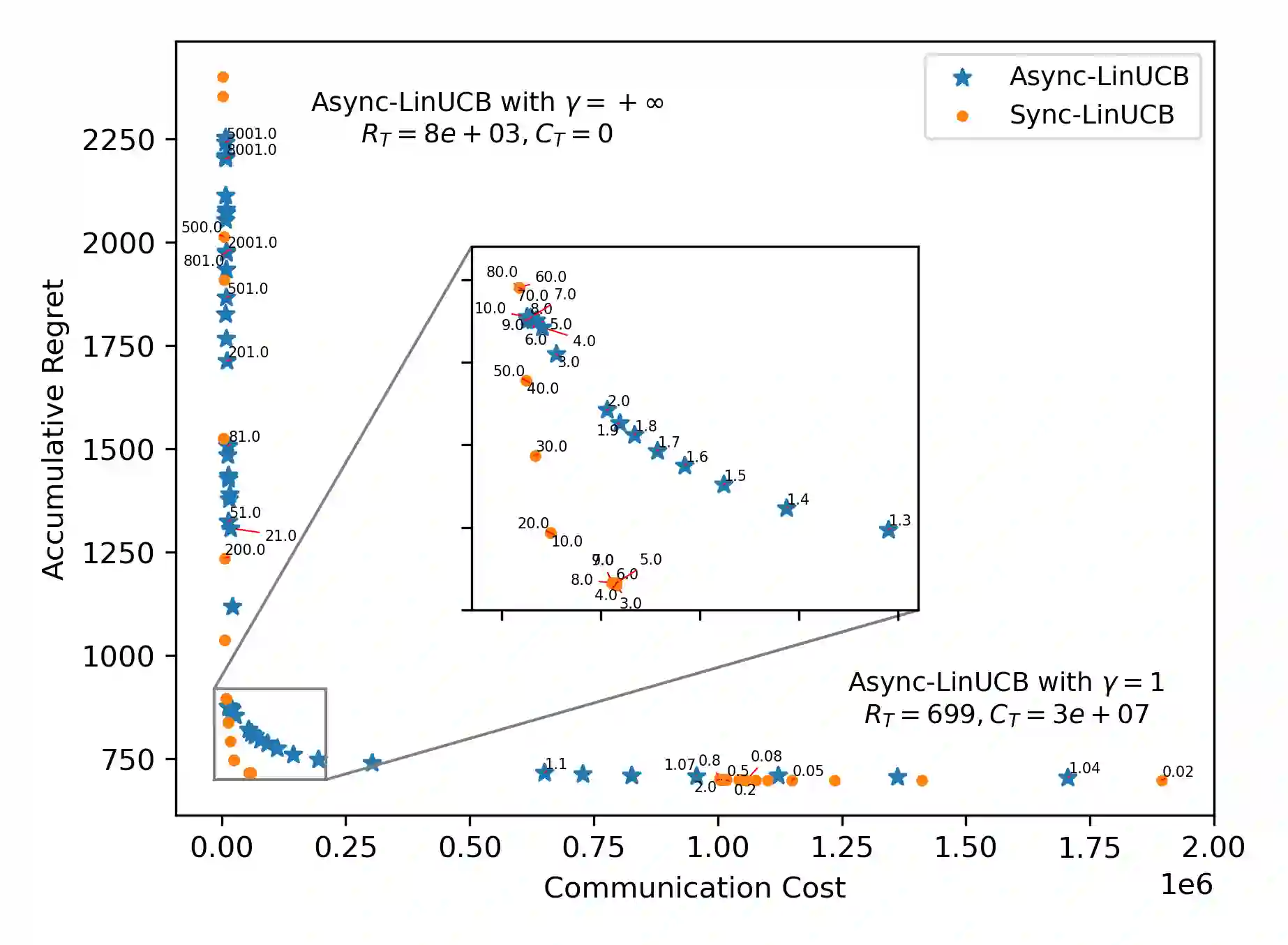

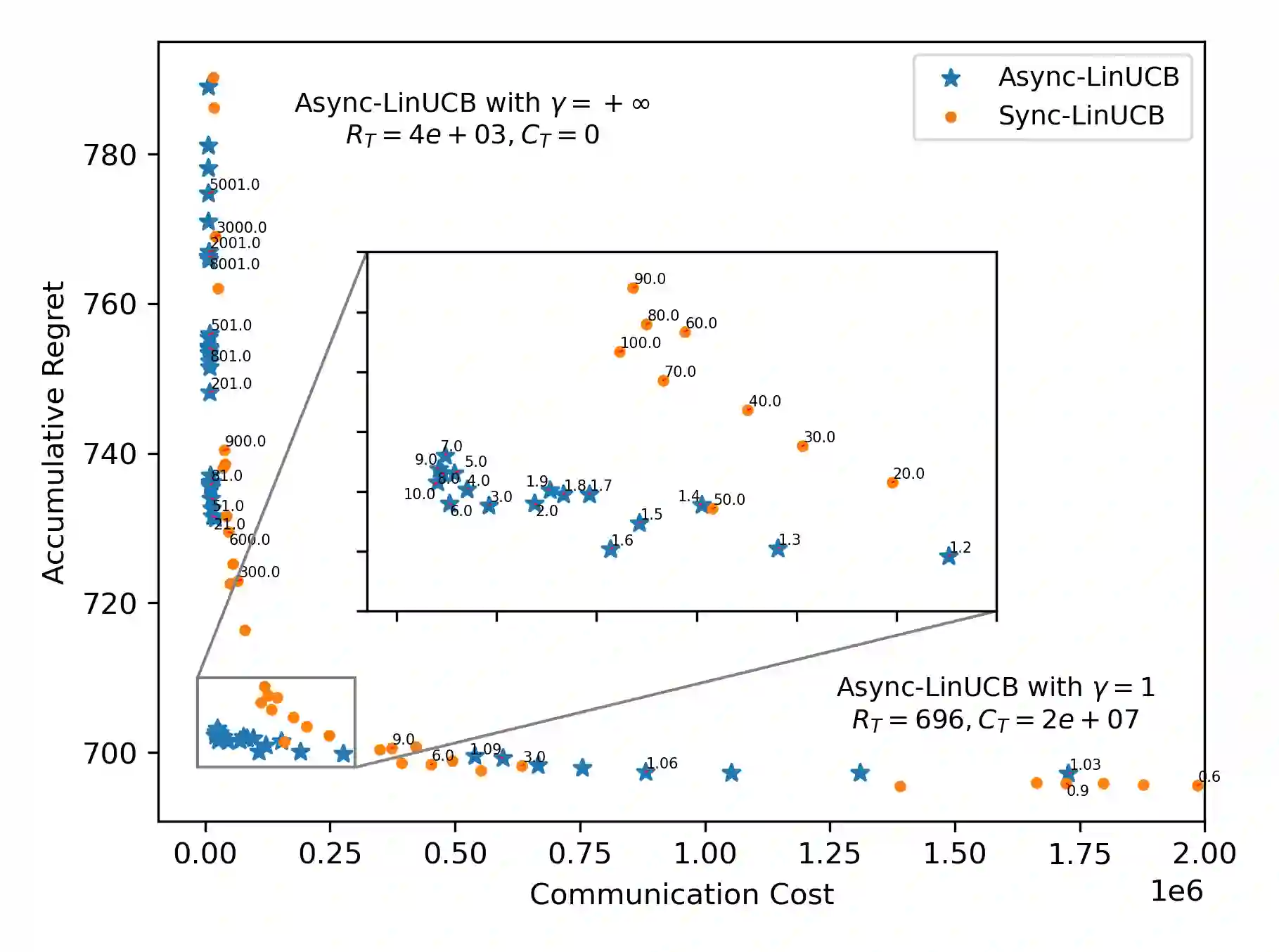

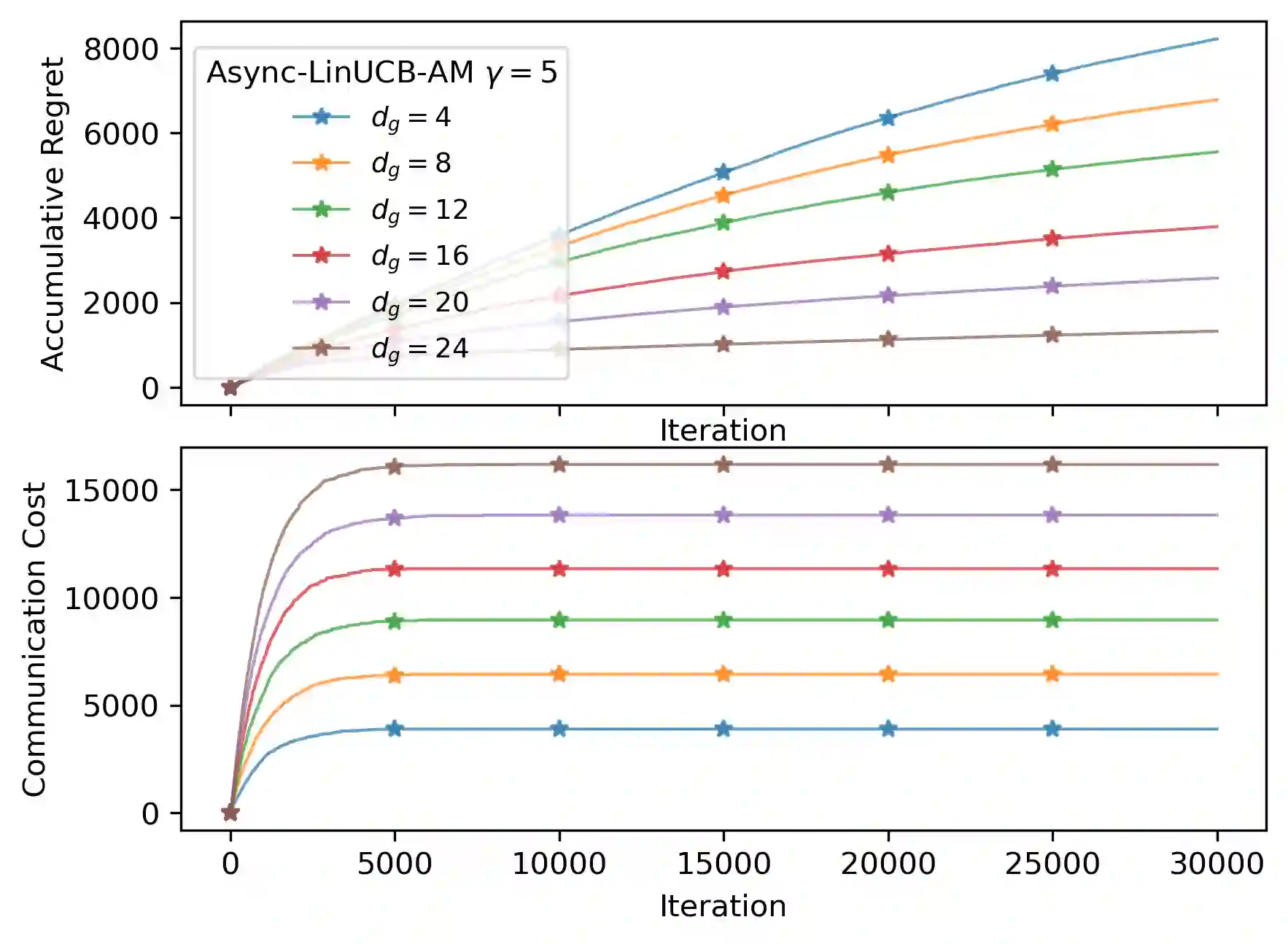

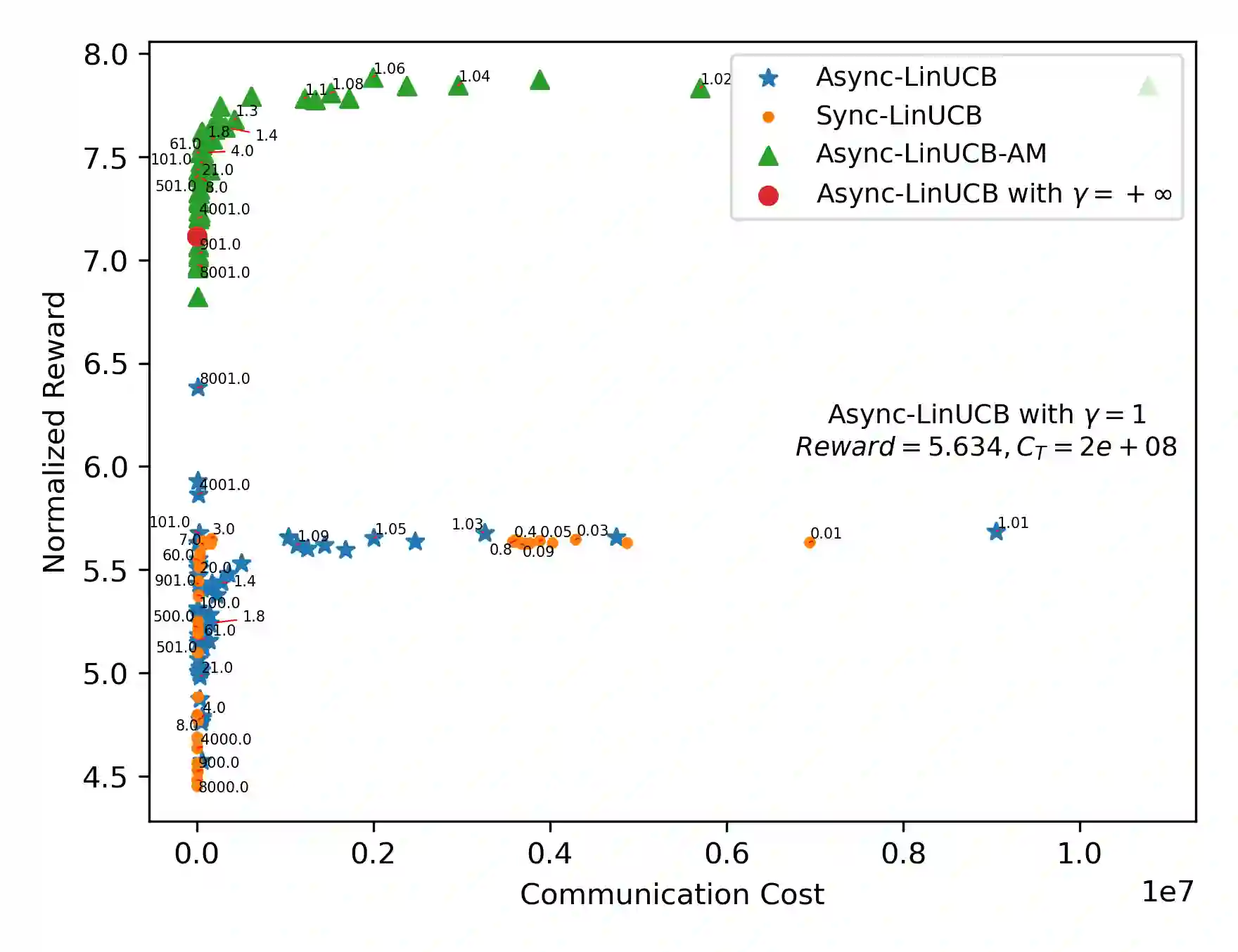

Linear contextual bandit is a popular online learning problem. It has been mostly studied in centralized learning settings. With the surging demand of large-scale decentralized model learning, e.g., federated learning, how to retain regret minimization while reducing communication cost becomes an open challenge. In this paper, we study linear contextual bandit in a federated learning setting. We propose a general framework with asynchronous model update and communication for a collection of homogeneous clients and heterogeneous clients, respectively. Rigorous theoretical analysis is provided about the regret and communication cost under this distributed learning framework; and extensive empirical evaluations demonstrate the effectiveness of our solution.

翻译:线性背景土匪是一个受欢迎的在线学习问题,大部分是在集中学习环境中研究的。随着大规模分散式模式学习(如联合学习)需求激增,如何在降低通信成本的同时保留遗憾最小化而降低通信成本成为一项公开的挑战。在本文中,我们在联合学习环境中研究线性背景土匪。我们建议一个总框架,为收集同质客户和不同客户分别提供不同步的模型更新和交流。在这个分布式学习框架下,对遗憾和通信成本进行了严格的理论分析;广泛的实证评估显示了我们解决方案的有效性。