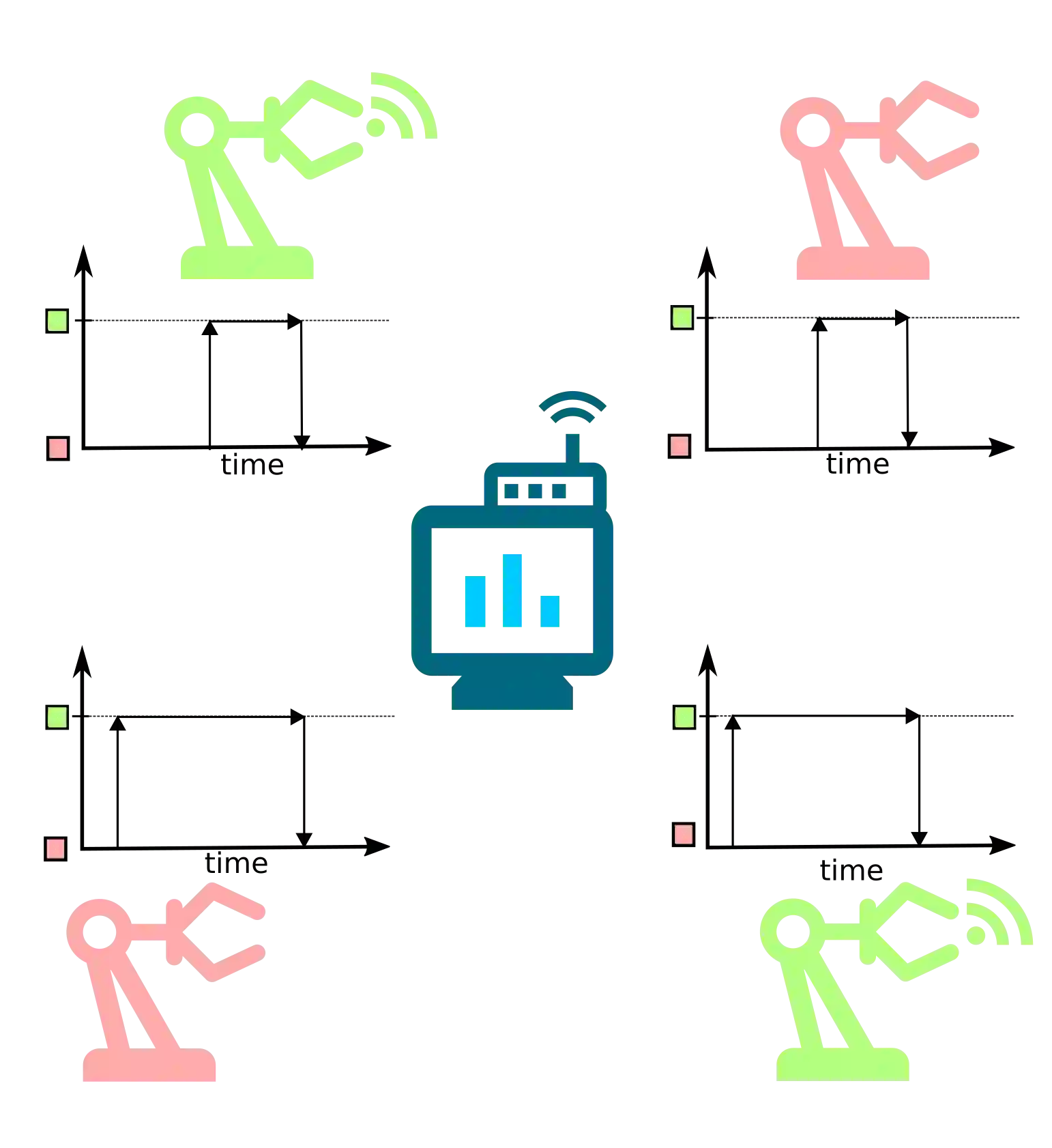

In this work, we consider a remote monitoring scenario in which multiple sensors share a wireless channel to deliver their status updates to a process monitor via an access point (AP). Moreover, we consider that the sensors randomly arrive and depart from the network as they become active and inactive. The goal of the sensors is to devise a medium access strategy to collectively minimize the long-term mean network \ac{AoI} of their respective processes at the remote monitor. For this purpose, we propose specific modifications to ALOHA-QT algorithm, a distributed medium access algorithm that employs a policy tree (PT) and reinforcement learning (RL) to achieve high throughput. We provide the upper bound on the mean network Age of Information (AoI) for the proposed algorithm along with pointers for selecting its key parameter. The results reveal that the proposed algorithm reduces mean network \ac{AoI} by more than 50 percent for state of the art stationary randomized policies while successfully adjusting to a changing number of active users in the network. The algorithm needs less memory and computation than ALOHA-QT while performing better in terms of AoI.

翻译:在这项工作中,我们考虑一种远程监测设想,即多个传感器共享一个无线频道,通过接入点向一个进程监测器提供其状态更新。此外,我们认为传感器随着它们变得活跃和不活动,随机到达和离开网络。传感器的目标是设计一个中位访问战略,在远程监测器中集体将各自进程的长期平均网络 =ac{AoI} 最小化。为此,我们建议具体修改ALOHA-QT 算法,即使用政策树(PT)和强化学习(RL)实现高吞吐的分布式中等访问算法。我们为拟议的信息平均网络时代(AoI)提供了与选择关键参数的指针的上限约束。结果显示,拟议的算法将平均网络 \ac{AoI 减少50%以上,以适应艺术的随机化政策状态,同时成功地适应网络中不断变化的积极用户数量。算法需要比ALOHA-QT 更好地运行AoI 。